Migrating data with Pentaho ETL (Kettle)

Pentaho Data Integration (also known as Kettle) is one of the leading open source integration solutions. With PDI/Kettle, you can take data from a multitude of sources, transform the data in a particular way, and load the data into just as many target systems.

For this tutorial, I would like to demonstrate how to convert an XML file containing category data into Category Impex file using Pentaho Data Integration.

To illustrate more capabilities and features of Pentaho, I decided to go with the comprehensive XML structure provides the data in a Entity-Attribute-Values (EAVs) model.

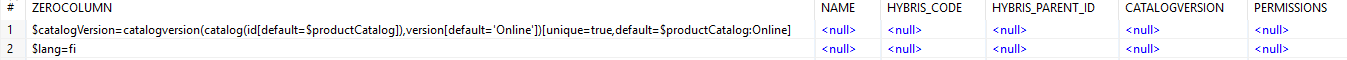

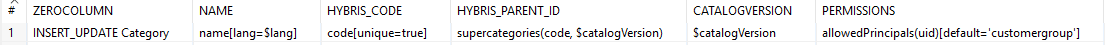

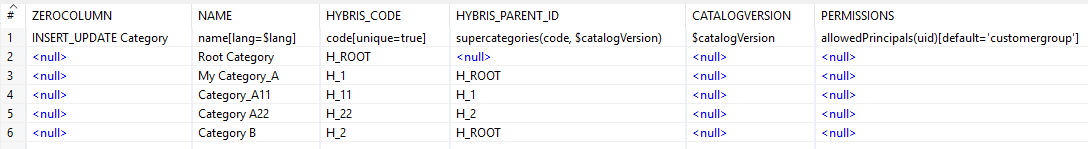

The target format is ImpEx. For the XML above, the following result is expected:

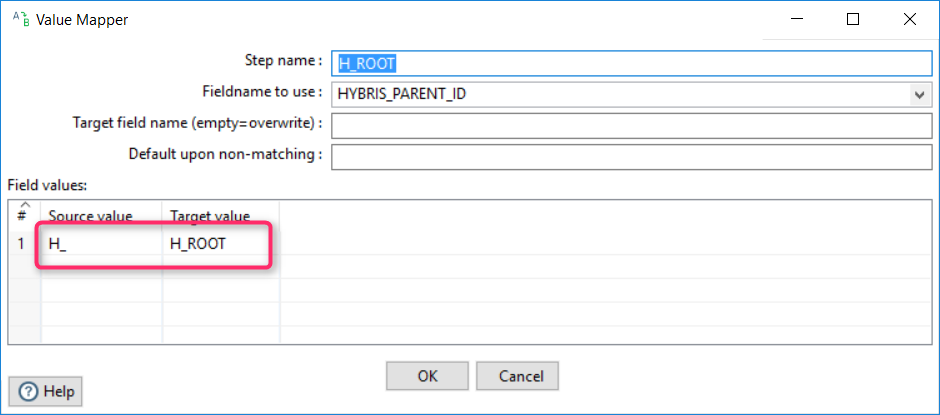

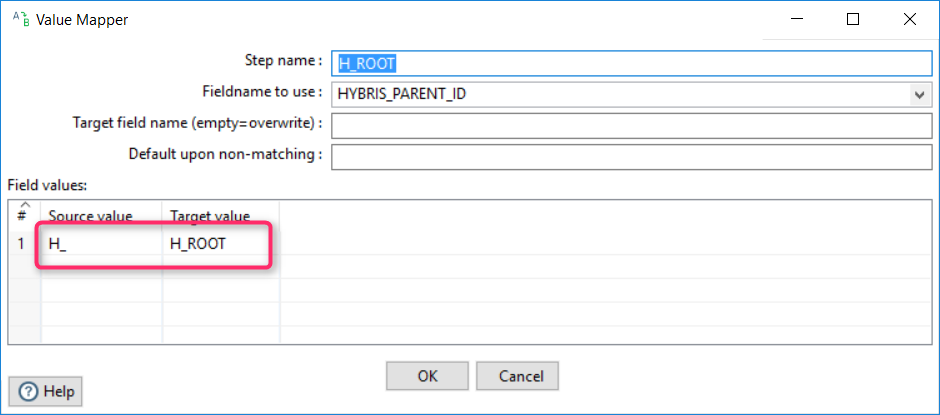

Please note that category IDs begin with “H_”. It is an optional change, just to demonstrate the Pentaho capabilities of Value Mappers. In the real projects, converting of IDs is also important, but the algorithm may be more complex.

Root nodes are represented by empty values in XML. In the target format we will need to put them under one root node (let’s call it H_ROOT).

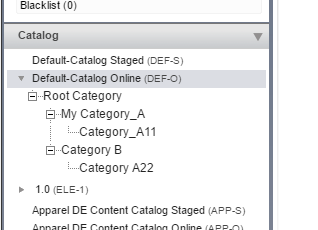

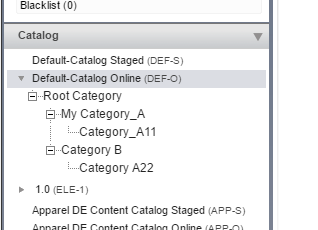

After applying this Impex, hybris creates a category tree:

So our goal is to transform original XML to this ImpEx file. In the previous article I showed how it can be implemented without using any out-of-the-shelf software. Today I am going to tell you about the alternate solution. This solution is a configuration for Pentaho, a data integration platform.

So our goal is to transform original XML to this ImpEx file. In the previous article I showed how it can be implemented without using any out-of-the-shelf software. Today I am going to tell you about the alternate solution. This solution is a configuration for Pentaho, a data integration platform.

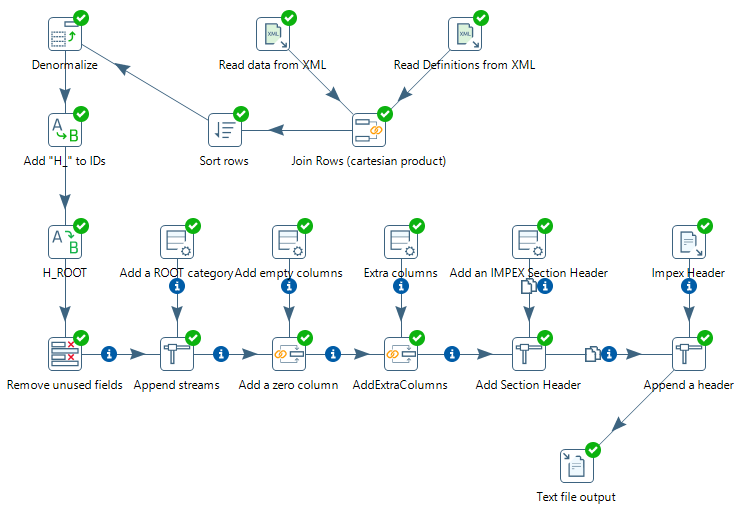

The process consists of 19 steps (after each step I put a table with the results of the step):

The process consists of 19 steps (after each step I put a table with the results of the step):

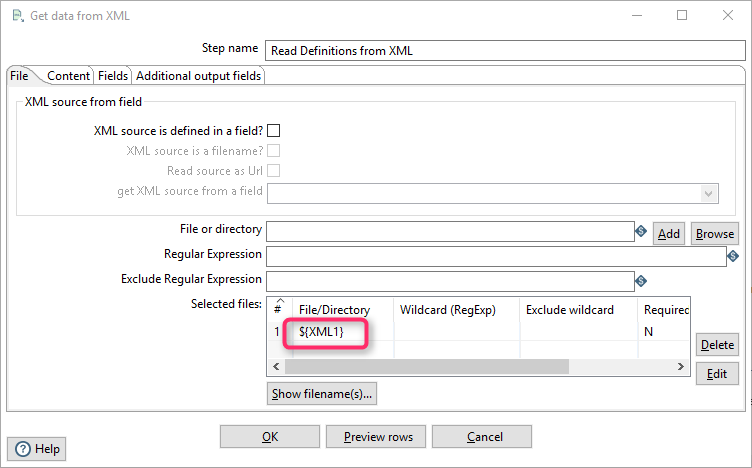

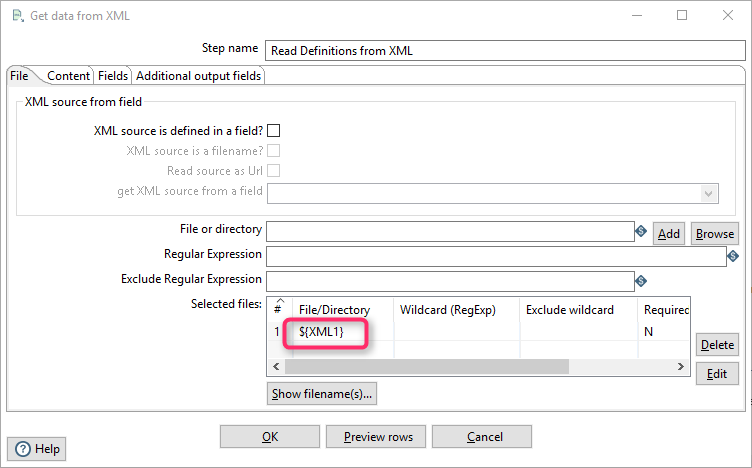

XML1 is a constant that is defined in the project configuration. You can also use a filename instead of the variable reference.

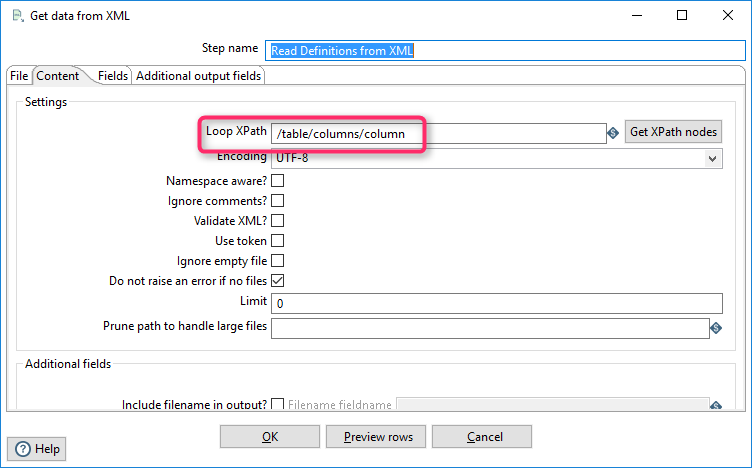

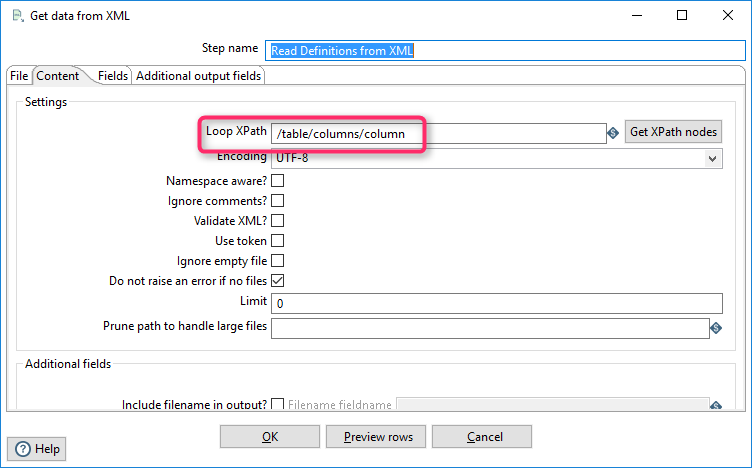

Content. The “Loop XPath” defines a root node for the processing. This job processes the headers, so I filter the header section (see XML above).

XML1 is a constant that is defined in the project configuration. You can also use a filename instead of the variable reference.

Content. The “Loop XPath” defines a root node for the processing. This job processes the headers, so I filter the header section (see XML above).

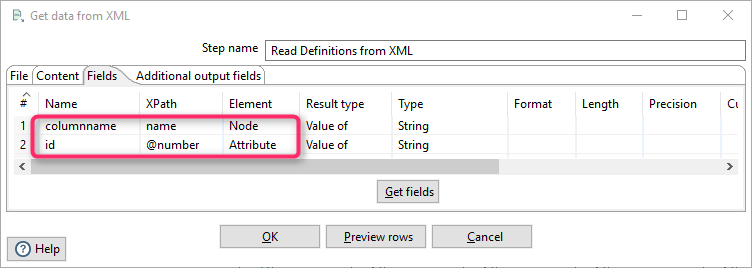

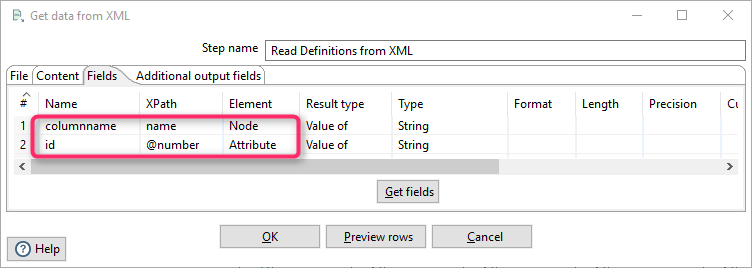

Fields. This section defines what XML tag/attribute values should be mapped with what fields. The rules below say: put a value from name tag into a first column (called “columnname”) and a value of the attribute into the variable “id”.

Fields. This section defines what XML tag/attribute values should be mapped with what fields. The rules below say: put a value from name tag into a first column (called “columnname”) and a value of the attribute into the variable “id”.

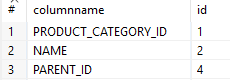

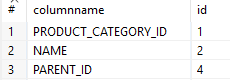

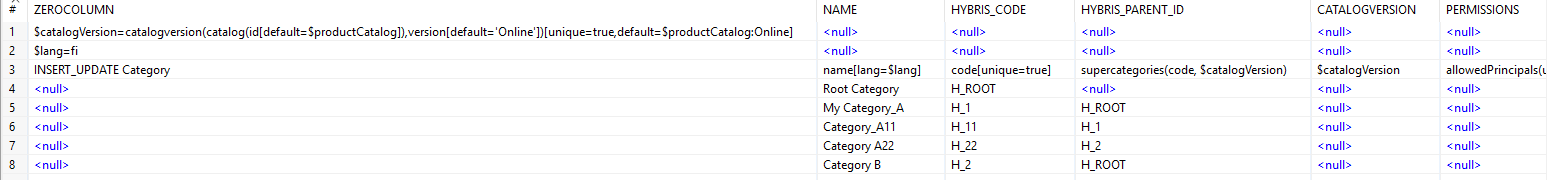

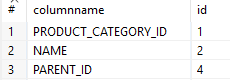

As a result, we have a list of category attributes as well as their codes:

As a result, we have a list of category attributes as well as their codes:

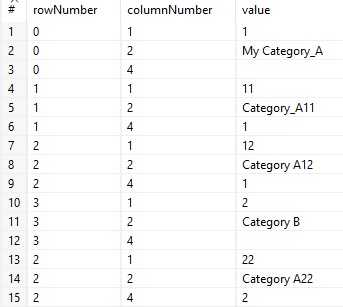

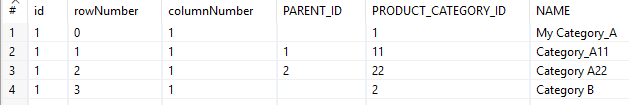

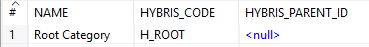

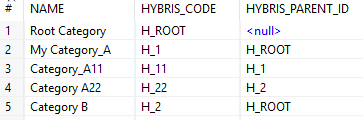

The resulting set looks like:

The resulting set looks like:

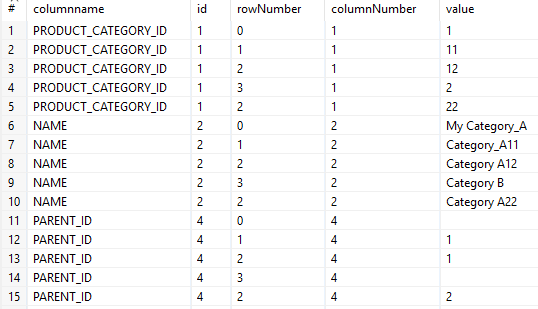

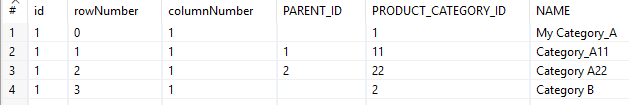

For some categories, there is no parent category, so the values of the attribute #4 (PARENT_ID) are empty for them.

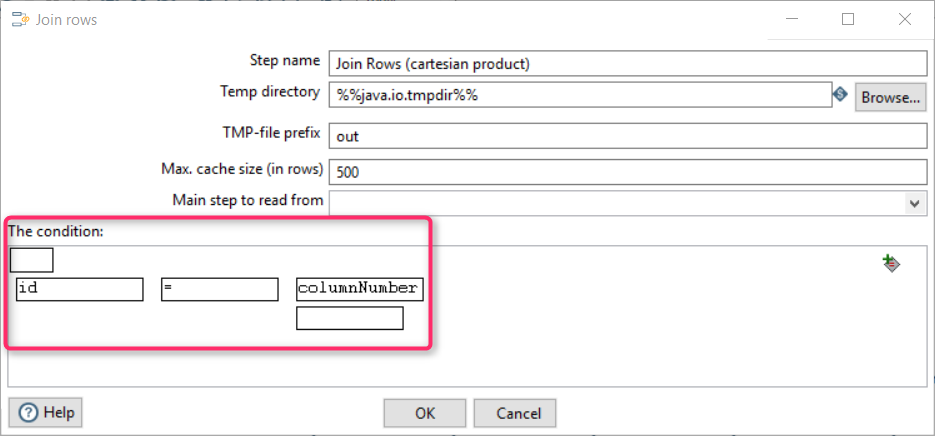

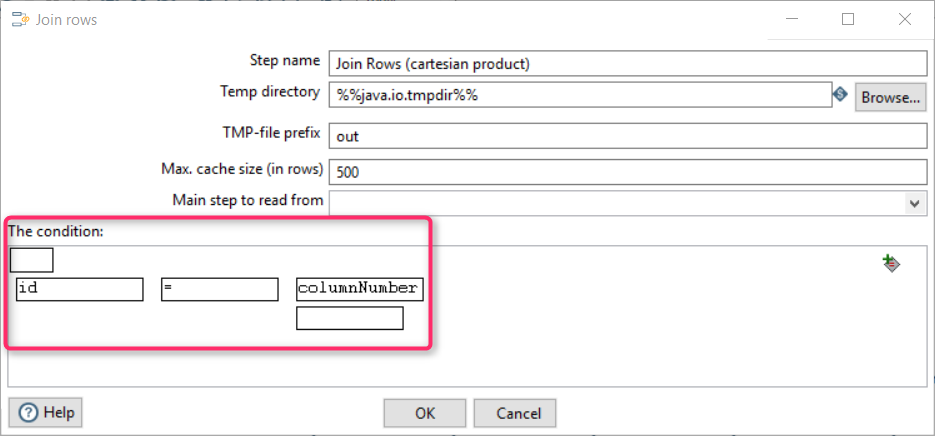

. Both fields mean the same thing, a field name id.

For some categories, there is no parent category, so the values of the attribute #4 (PARENT_ID) are empty for them.

. Both fields mean the same thing, a field name id.

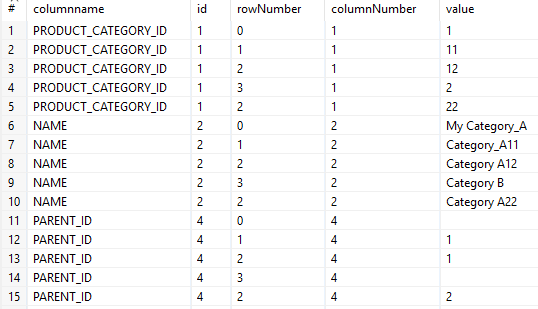

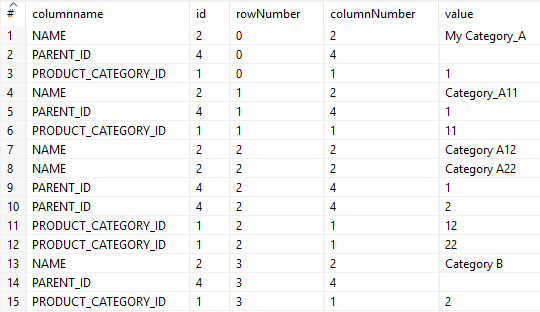

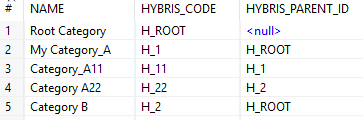

The resulting table is

The resulting table is

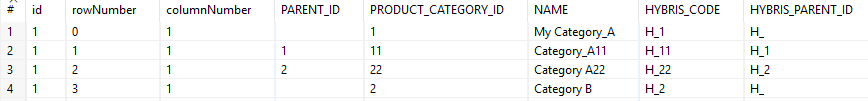

The result:

The result:

The resulting set is

The resulting set is

The result:

The result:

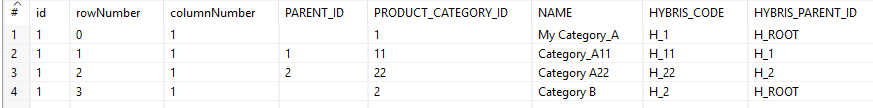

After this operation, the resulting set looks like

After this operation, the resulting set looks like

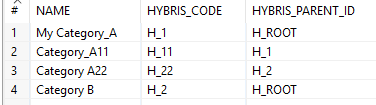

As a result, the following set is created:

As a result, the following set is created:

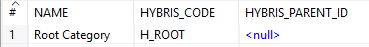

As a result, Root Category is added at the beginning of the list:

As a result, Root Category is added at the beginning of the list:

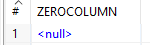

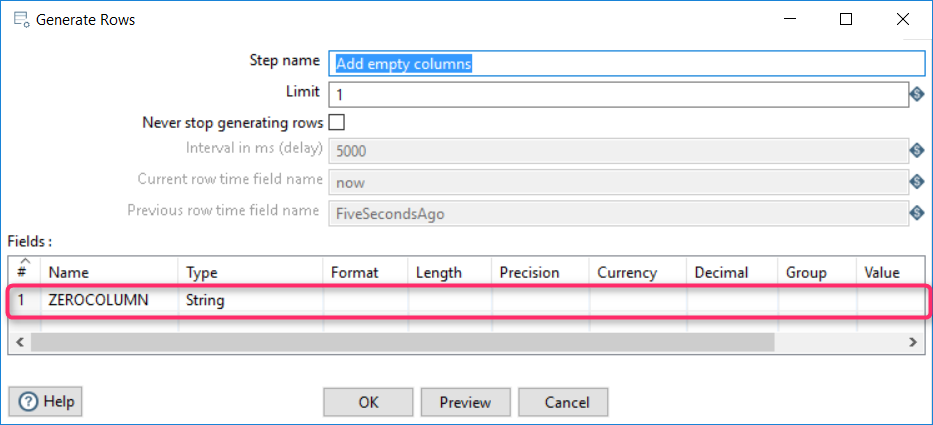

Adding a empty column

As a result, a super simple dataset is created:

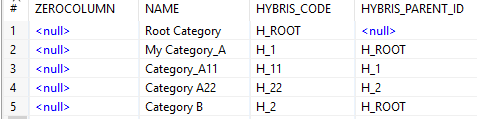

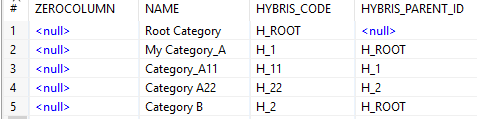

As a result, an empty column was added in the beginning:

As a result, an empty column was added in the beginning:

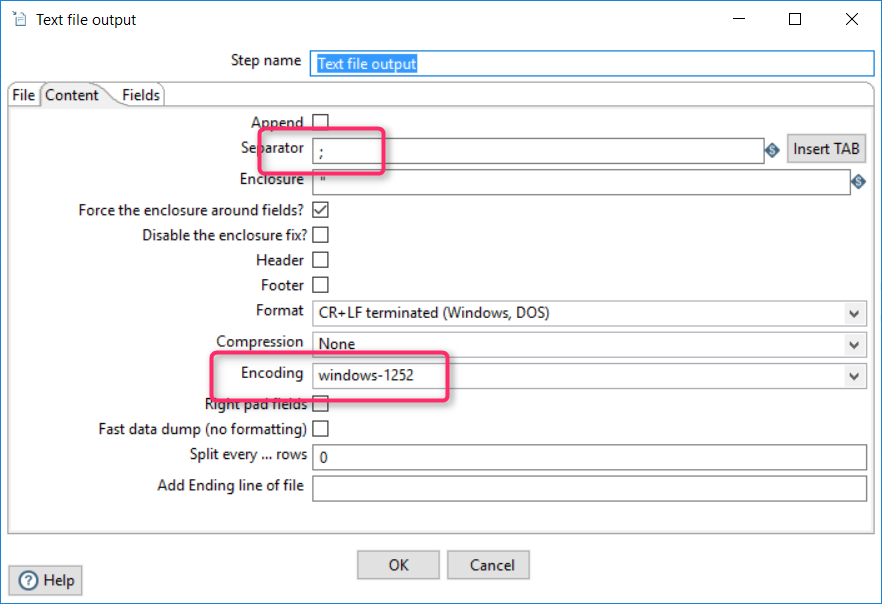

You can change the encoding and the separator according to your preferences and Impex configuration:

You can change the encoding and the separator according to your preferences and Impex configuration:

/

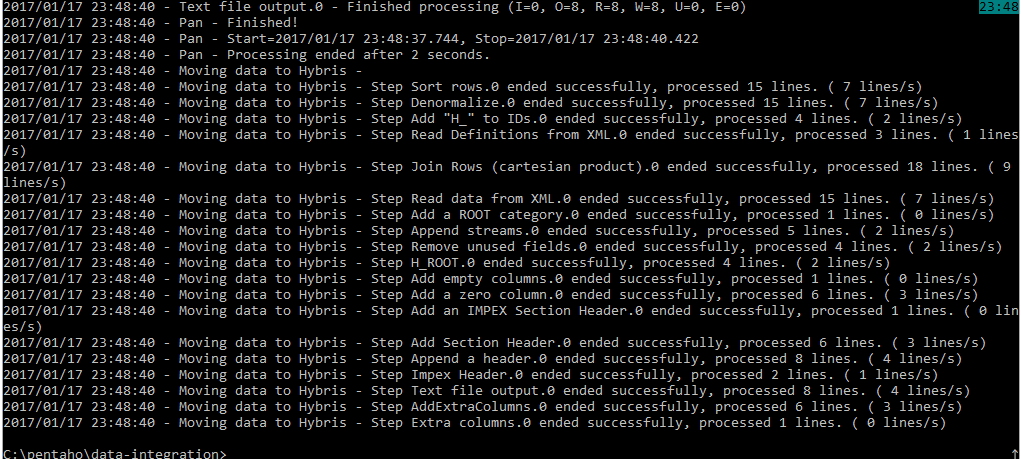

) that allows you to execute transformations from a terminal window.

To execute the tranformation process explained above, the simplest command is used:

/

) that allows you to execute transformations from a terminal window.

To execute the tranformation process explained above, the simplest command is used:

<?xml version="1.0" encoding="UTF-8" standalone="no"?>

<table>

<columns>

<column number="1">

<name>PRODUCT_CATEGORY_ID</name>

</column>

<column number="2">

<name>NAME</name>

</column>

<column number="4">

<name>PARENT_ID</name>

</column>

</columns>

<rows>

<row rowNumber="0">

<value columnNumber="1">1</value>

<value columnNumber="2">My Category_A</value>

<value columnNumber="4"></value>

</row>

<row rowNumber="1">

<value columnNumber="1">11</value>

<value columnNumber="2">Category_A11</value>

<value columnNumber="4">1</value>

</row>

<row rowNumber="2">

<value columnNumber="1">12</value>

<value columnNumber="2">Category A12</value>

<value columnNumber="4">1</value>

</row>

<row rowNumber="3">

<value columnNumber="1">2</value>

<value columnNumber="2">Category B</value>

<value columnNumber="4"></value>

</row>

<row rowNumber="2">

<value columnNumber="1">22</value>

<value columnNumber="2">Category A22</value>

<value columnNumber="4">2</value>

</row>

</rows></table>

<table>

<columns>

<column number="1">

<name>PRODUCT_CATEGORY_ID</name>

</column>

<column number="2">

<name>NAME</name>

</column>

<column number="4">

<name>PARENT_ID</name>

</column>

</columns>

<rows>

<row rowNumber="0">

<value columnNumber="1">1</value>

<value columnNumber="2">My Category_A</value>

<value columnNumber="4"></value>

</row>

<row rowNumber="1">

<value columnNumber="1">11</value>

<value columnNumber="2">Category_A11</value>

<value columnNumber="4">1</value>

</row>

<row rowNumber="2">

<value columnNumber="1">12</value>

<value columnNumber="2">Category A12</value>

<value columnNumber="4">1</value>

</row>

<row rowNumber="3">

<value columnNumber="1">2</value>

<value columnNumber="2">Category B</value>

<value columnNumber="4"></value>

</row>

<row rowNumber="2">

<value columnNumber="1">22</value>

<value columnNumber="2">Category A22</value>

<value columnNumber="4">2</value>

</row>

</rows></table>

$productCatalog=Default

"$catalogVersion=catalogversion(catalog(id[default=$productCatalog]),version[default='Online'])[unique=true,default=$productCatalog:Online]";;;;;

"$lang=en";;;;;

"INSERT_UPDATE Category";"code[unique=true]";"supercategories(code, $catalogVersion)";"name[lang=$lang]";"$catalogVersion";"allowedPrincipals(uid)[default='customergroup']"

;"H_ROOT";;"Root Category";;

;"H_1";"H_ROOT";"My Category_A";;

;"H_11";"H_1";"Category_A11";;

;"H_22";"H_2";"Category A22";;

;"H_2";"H_ROOT";"Category B";;

"$catalogVersion=catalogversion(catalog(id[default=$productCatalog]),version[default='Online'])[unique=true,default=$productCatalog:Online]";;;;;

"$lang=en";;;;;

"INSERT_UPDATE Category";"code[unique=true]";"supercategories(code, $catalogVersion)";"name[lang=$lang]";"$catalogVersion";"allowedPrincipals(uid)[default='customergroup']"

;"H_ROOT";;"Root Category";;

;"H_1";"H_ROOT";"My Category_A";;

;"H_11";"H_1";"Category_A11";;

;"H_22";"H_2";"Category A22";;

;"H_2";"H_ROOT";"Category B";;

So our goal is to transform original XML to this ImpEx file. In the previous article I showed how it can be implemented without using any out-of-the-shelf software. Today I am going to tell you about the alternate solution. This solution is a configuration for Pentaho, a data integration platform.

So our goal is to transform original XML to this ImpEx file. In the previous article I showed how it can be implemented without using any out-of-the-shelf software. Today I am going to tell you about the alternate solution. This solution is a configuration for Pentaho, a data integration platform.

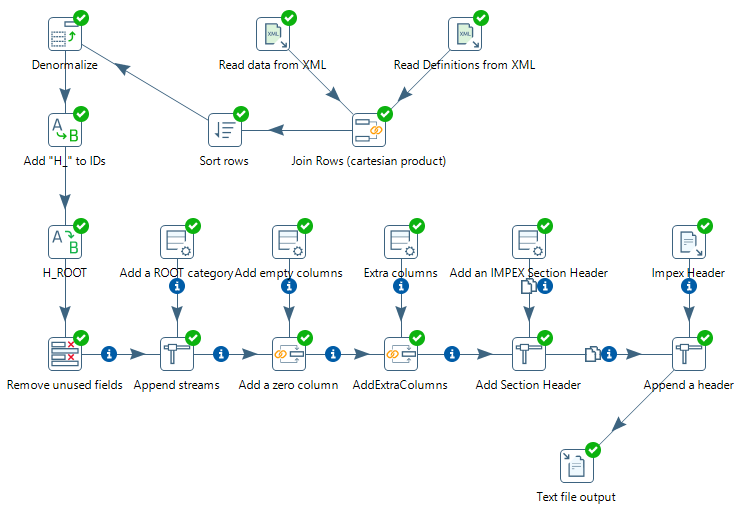

Pentaho configuration

Let’s start at the ends and have a look at the final result. In this tutorial we’ll come to the following process: The process consists of 19 steps (after each step I put a table with the results of the step):

The process consists of 19 steps (after each step I put a table with the results of the step):

- Read definitions from XML. Reads column names and their codes.

- Read values from XML. Reads column values. This part of XML doesn’t contain the column names, only their IDs. So next phase is joining them together.

- Join Rows. Two previous datasets were joined on id=columnNumber.

- Sort rows. Sorts by rowNumber.

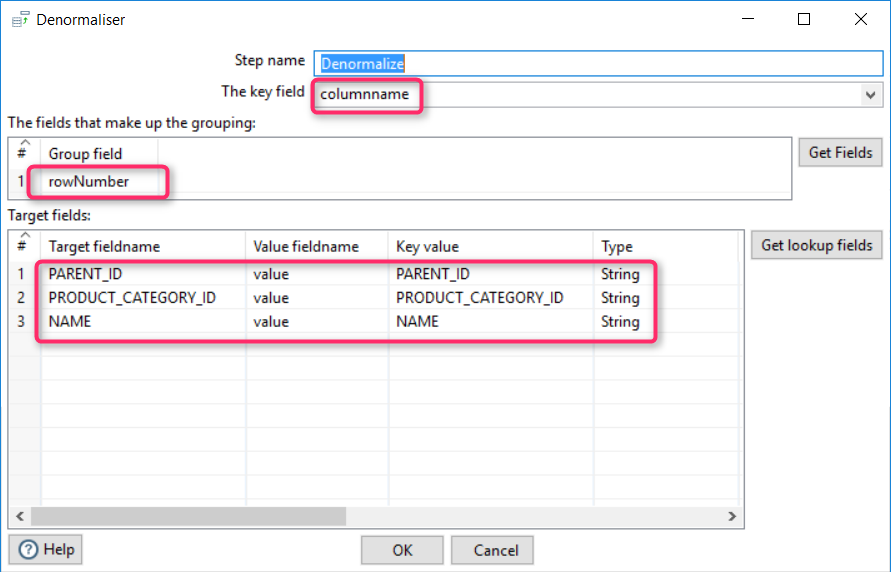

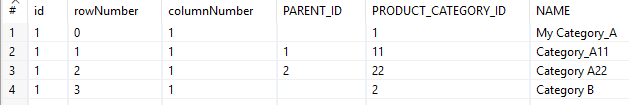

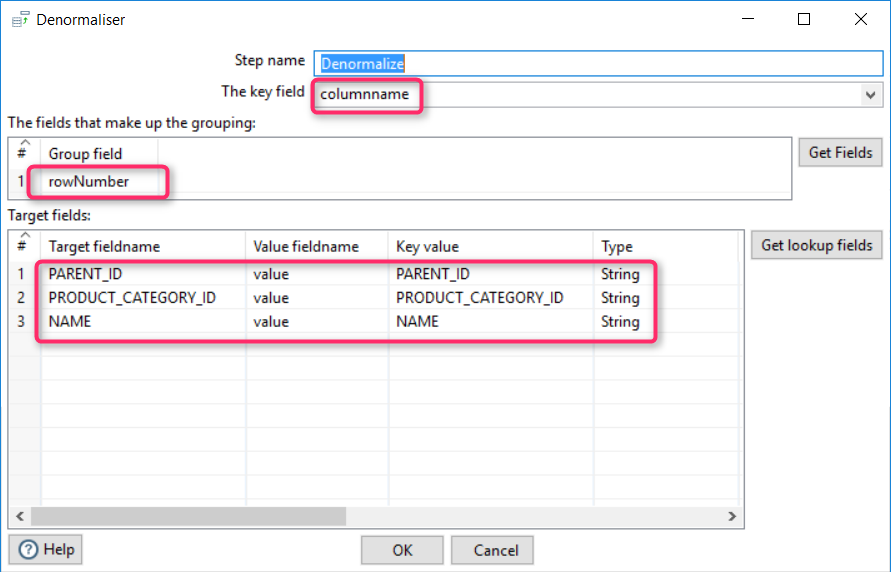

- Denormalize. Pentaho creates additional columns (PARENT_ID, PRODUCT_CATEGORY_ID, NAME) using the values of the field “value”.

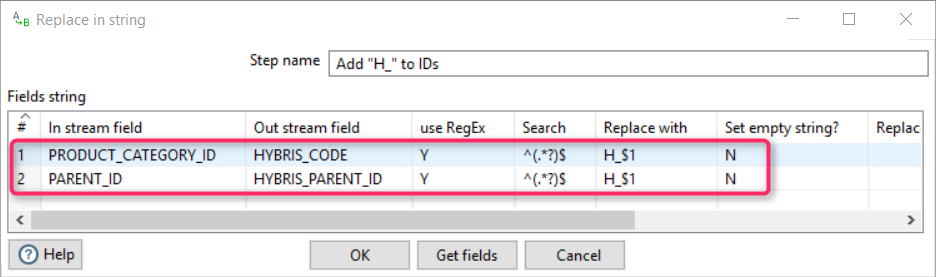

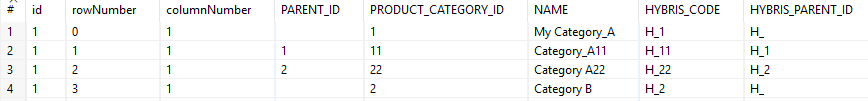

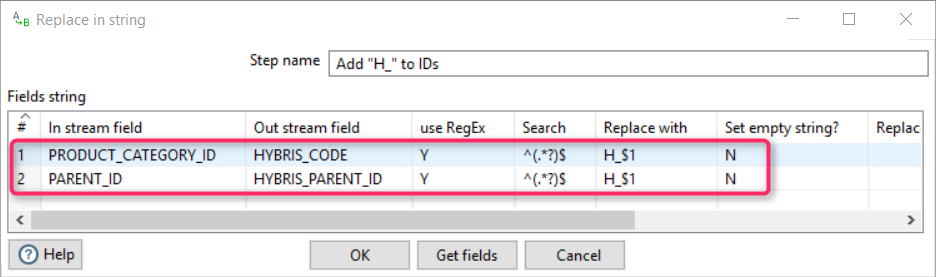

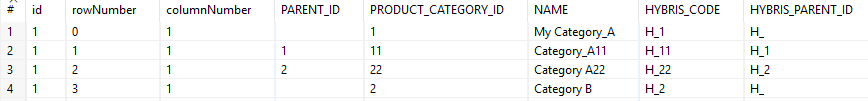

- Converting IDs into hybris IDs. According to the task, we add “H_” to the original ID.

- Converting root nodes to H_ROOT.

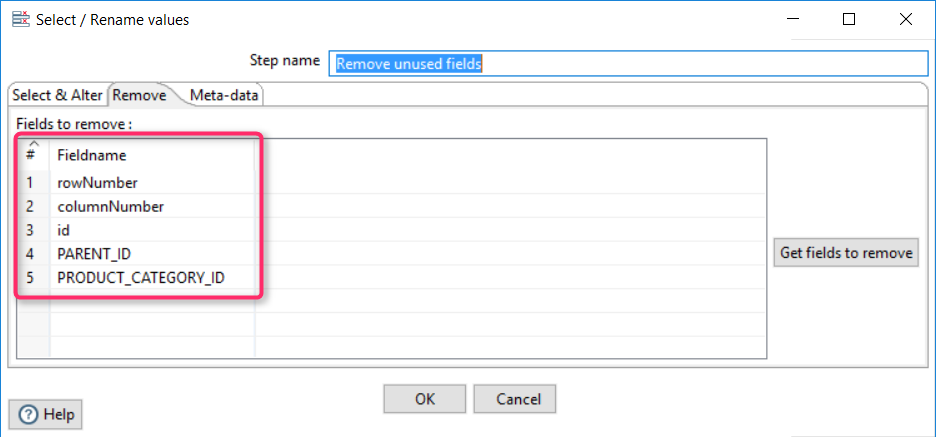

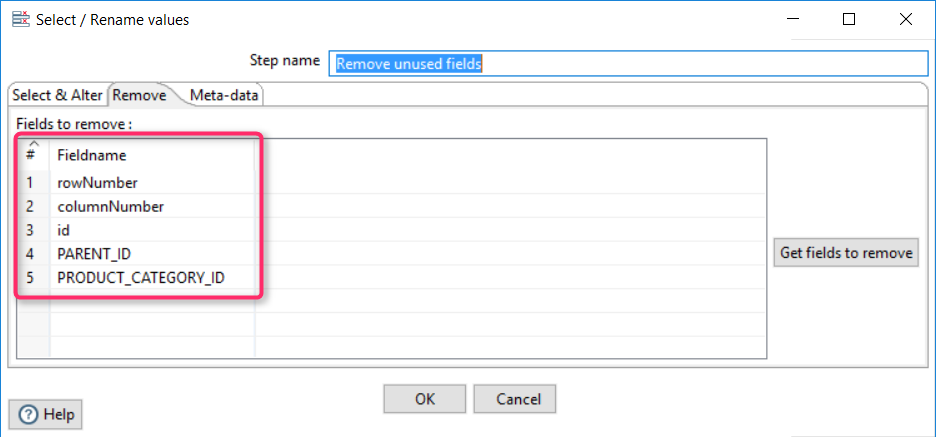

- Remove unused fields (columns).

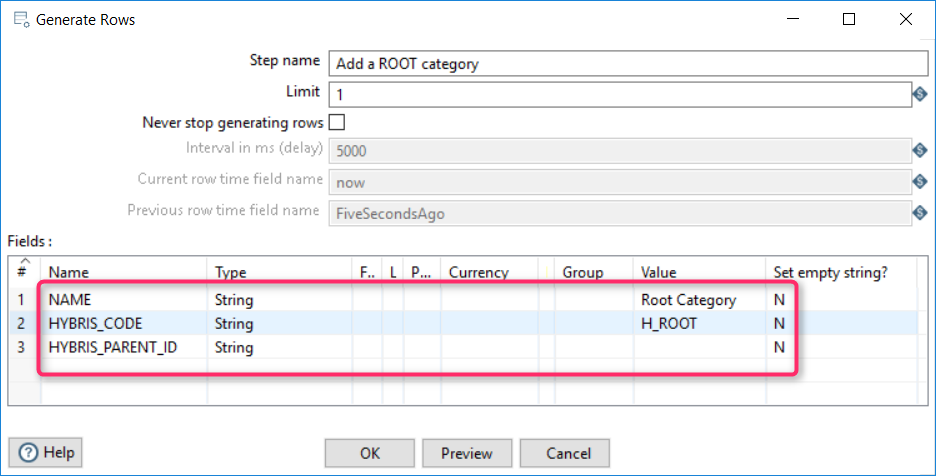

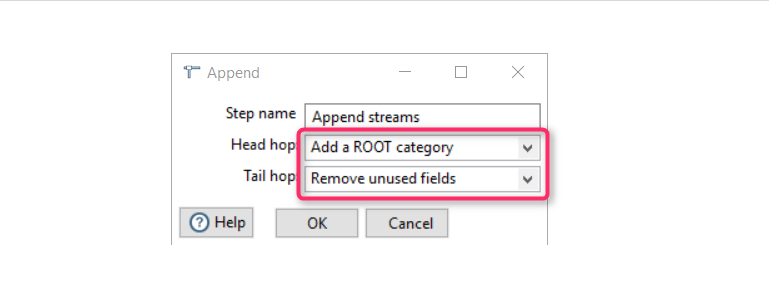

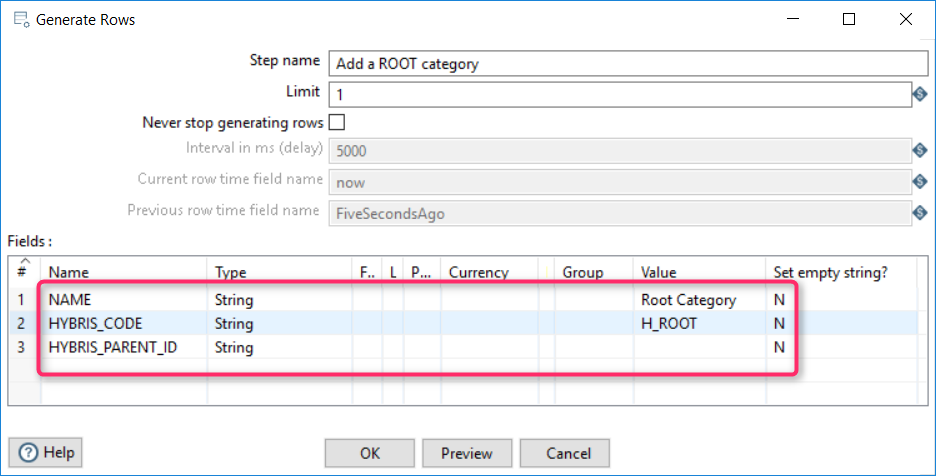

- Append a Root category:

- First, create a root category

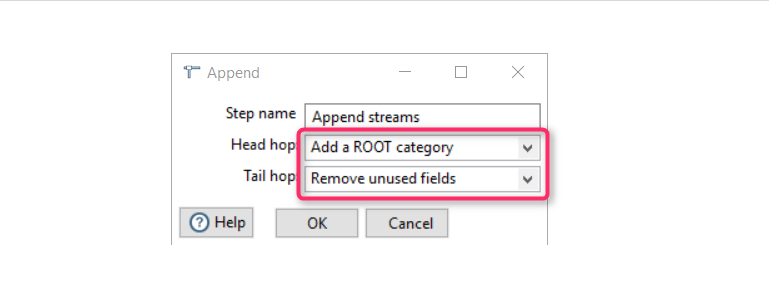

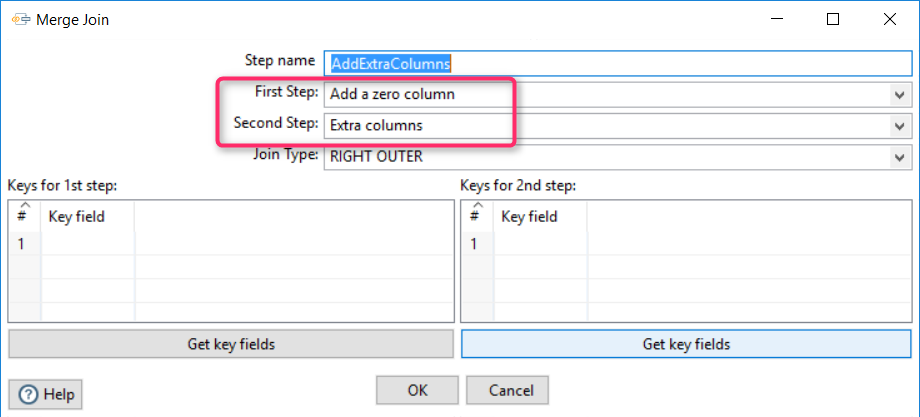

- Merge it with the data from the dataset retrieved from XML file:

- First, create a root category

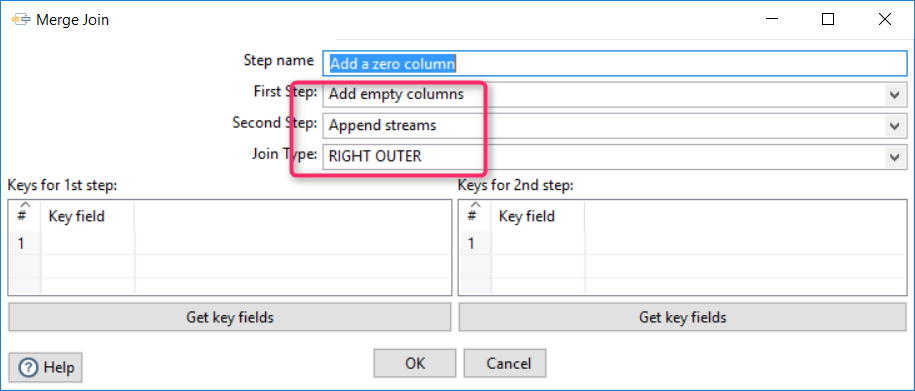

- Add a column in the beginning

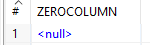

- Add a zero column with no value:

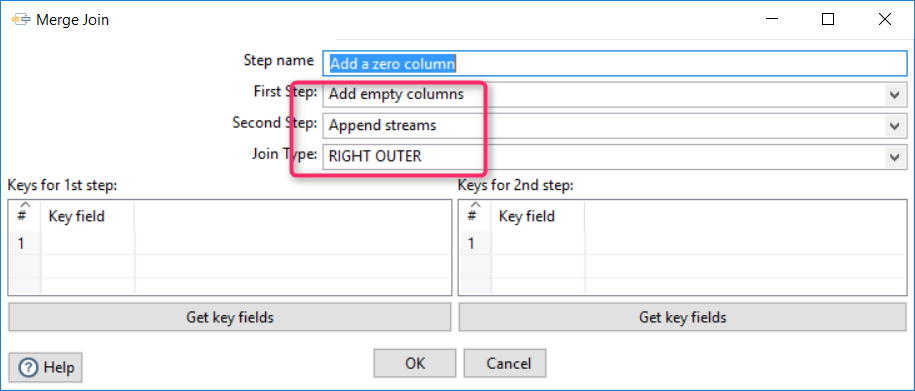

- Join it into the dataset retrieved from XML file:

- Add a zero column with no value:

- Add a couple of extra fields

- Prepare an object with these columns:

- Join them into the dataset from the previous step

- Prepare an object with these columns:

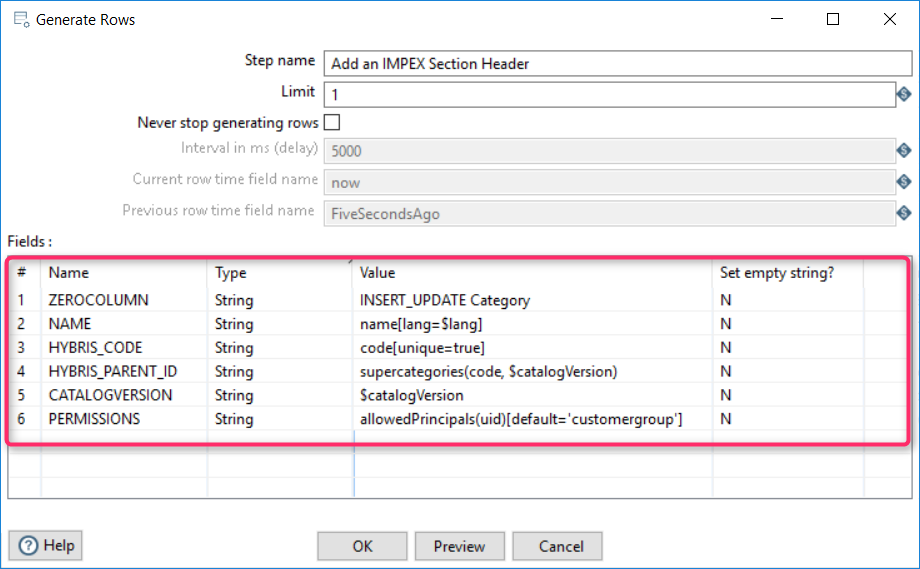

- Add an IMPEX Section Header

- Prepare an Impex Section header

- Merge it with the data from the previous step

- Prepare an Impex Section header

- Apply a template from the external text file. Basically, the template defines an Impex Header:

- Prepare a template

- Merge this header into a dataset from the previous step

- Prepare a template

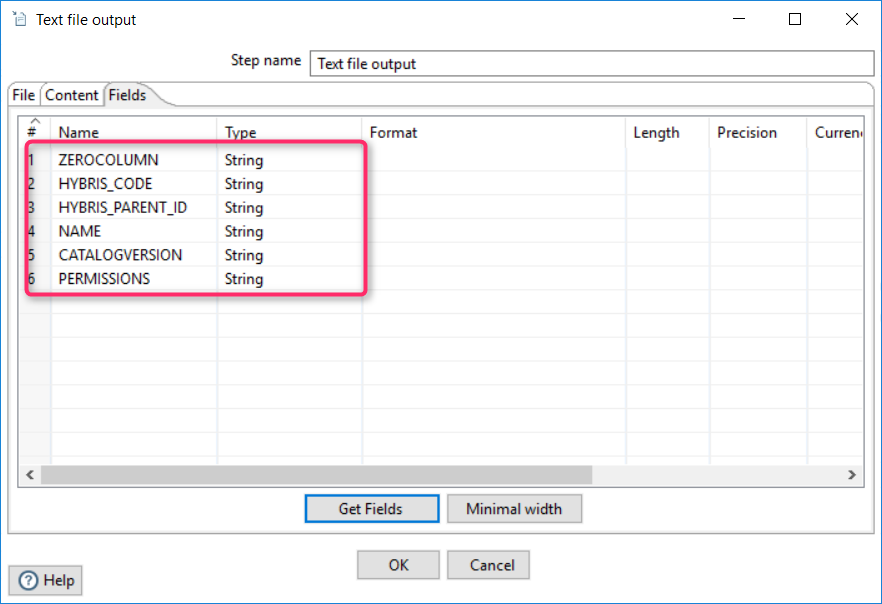

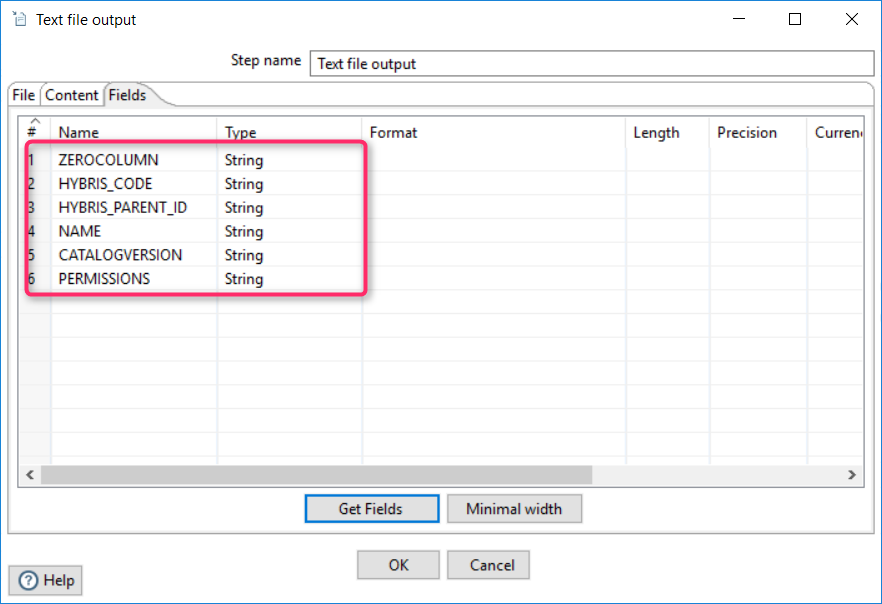

- Convert these data into CSV file.

Read definitions from XML

This step provides the ability to read data from any type of XML file using XPath specifications. It uses DOM parsers that need in memory processing and even the purging of parts of the file is not sufficient when these parts are very big. If you need to process huge files, Pentaho has another XML parsing component, XML Input Stream (StAX). There are three main tabs:- File

- Content

- Fields

XML1 is a constant that is defined in the project configuration. You can also use a filename instead of the variable reference.

Content. The “Loop XPath” defines a root node for the processing. This job processes the headers, so I filter the header section (see XML above).

XML1 is a constant that is defined in the project configuration. You can also use a filename instead of the variable reference.

Content. The “Loop XPath” defines a root node for the processing. This job processes the headers, so I filter the header section (see XML above).

Fields. This section defines what XML tag/attribute values should be mapped with what fields. The rules below say: put a value from name tag into a first column (called “columnname”) and a value of the attribute into the variable “id”.

Fields. This section defines what XML tag/attribute values should be mapped with what fields. The rules below say: put a value from name tag into a first column (called “columnname”) and a value of the attribute into the variable “id”.

As a result, we have a list of category attributes as well as their codes:

As a result, we have a list of category attributes as well as their codes:

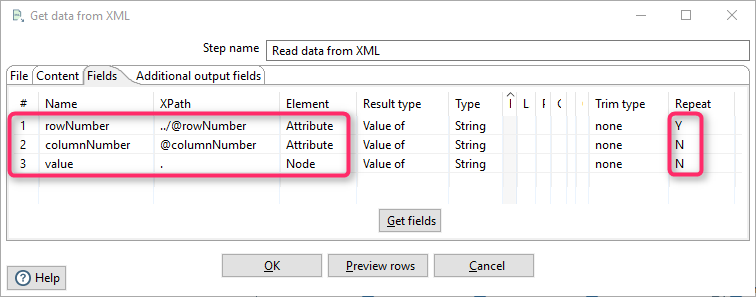

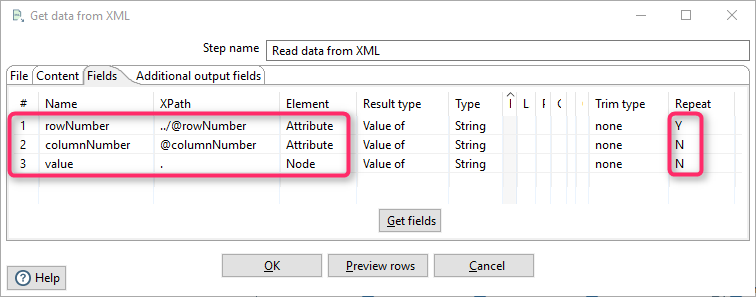

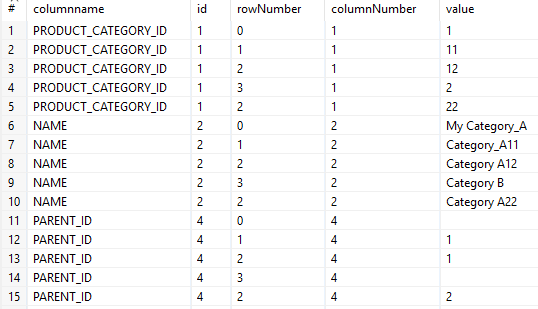

Read data from XML

It uses the same component, but with the slightly different configuration:- XPath is

./table/rows/row/*

- Field configuration:

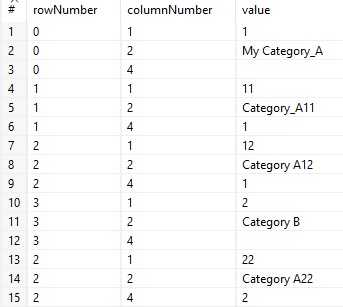

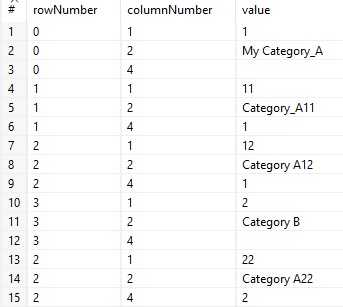

The resulting set looks like:

The resulting set looks like:

For some categories, there is no parent category, so the values of the attribute #4 (PARENT_ID) are empty for them.

For some categories, there is no parent category, so the values of the attribute #4 (PARENT_ID) are empty for them.

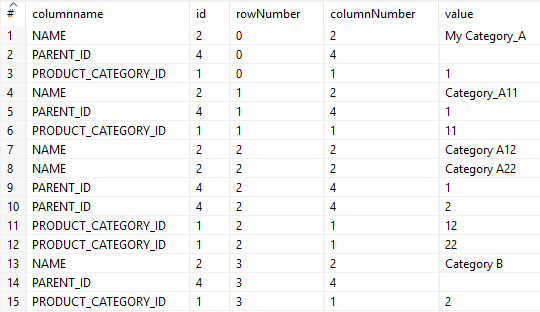

Join Rows

For this task, the component “Joins/Join Rows (cartesian product)” was used. At this step, two previous sets are merged in one. The join condition isid=columnnumber

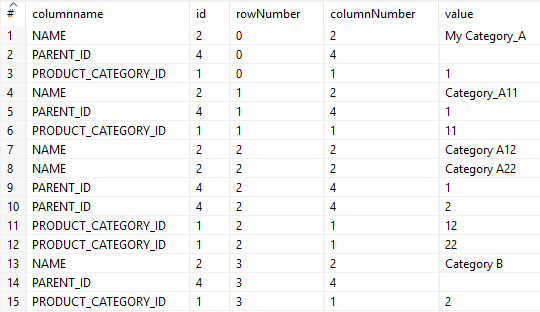

The resulting table is

The resulting table is

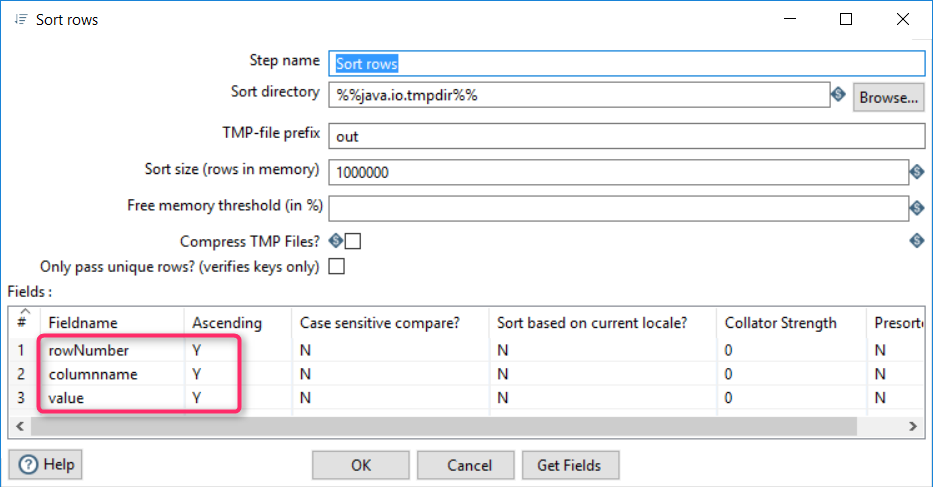

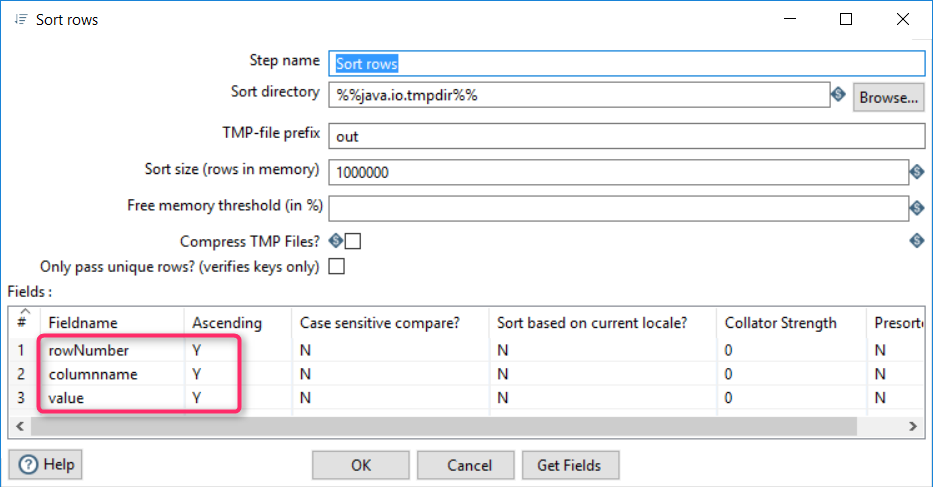

Sorting rows

To get prepared to the Denormalization, we need to sort rows by rowNumber, that put the the same category related attributes together. The result:

The result:

Denormalization

This component converts EAV data into the fields, one per EAV attribute. There are three things we need to configure:- Key field, the field from the source table that contains a name of the attribute.

- Group field, a source category unique id in our case

- Target fields, a mapping rules for each attribute used in the key field values

The resulting set is

The resulting set is

Converting IDs into hybris IDs

According to the task, we add “H_” to the original ID. I used a component called “Transform/Replace in String” and regular expressions. Two new fields are created, HYBRIS_CODE and HYBRIS_PARENT_ID. The result:

The result:

Converting root nodes to H_ROOT

Remove unused fields (columns)

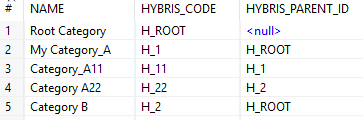

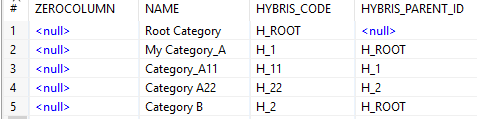

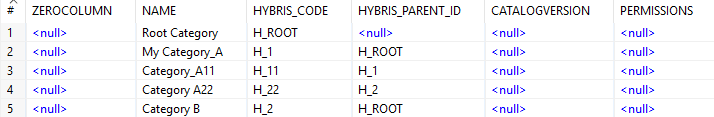

Some fields are no longer used and should be removed from the output. I used a component “Select/Rename values” for this task. After this operation, the resulting set looks like

After this operation, the resulting set looks like

Append a Root category

According to the task statement, we need to add a virtual category, ROOT, and move all categories under ROOT in the tree. I used the component “Input/Generate Rows”.Creating a ROOT category

First, we need to create a root category as a separate set: As a result, the following set is created:

As a result, the following set is created:

Merging ROOT into the original dataset

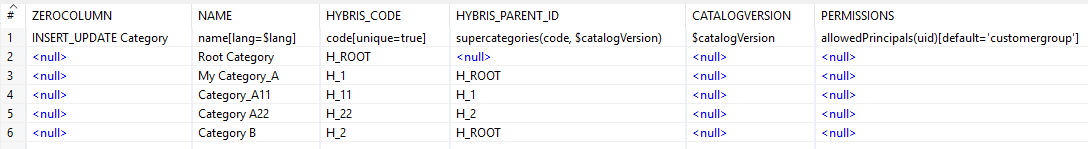

To merge it into the original dataset, the Pentaho component “Flow/Append streams” is used. As a result, Root Category is added at the beginning of the list:

As a result, Root Category is added at the beginning of the list:

Add a column in the beginning

In the resulting ImpEx file, all data starts from the second column. The first column is used for the commands and ImpEx variables. “Input/Generate rows” is also leveraged.Adding a empty column

As a result, a super simple dataset is created:

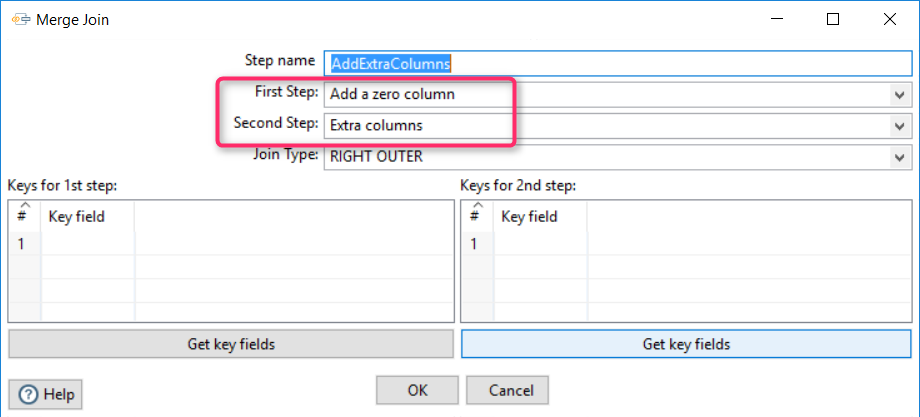

Joining the empty column

Joining the empty column created at the previous step to the original dataset: As a result, an empty column was added in the beginning:

As a result, an empty column was added in the beginning:

Add a couple of extra fields

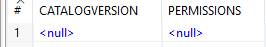

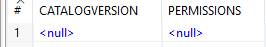

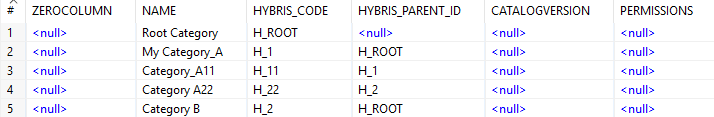

The same exercise is for CATALOGVERSION and PERMISSION columns. The only difference between this step and the previous one is a position of the couple in the resulting dataset. We are going to add it at the end. “Input/Generate rows” again.

- We prepared an object with these columns:

- We joined this couple into the dataset

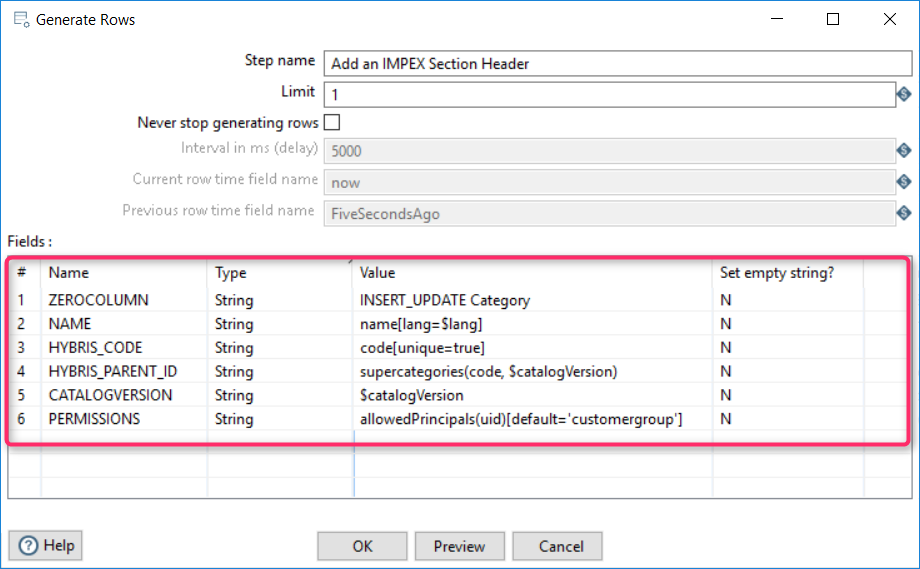

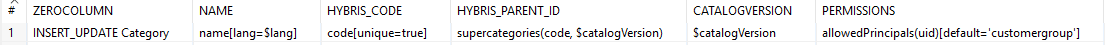

Add an IMPEX Section Header

Similarly to adding a ROOT category, we create an Impex Section Header using “Import/Generate rows”.

- Impex Section header is prepared

- the data from the previous step are merged with the header:

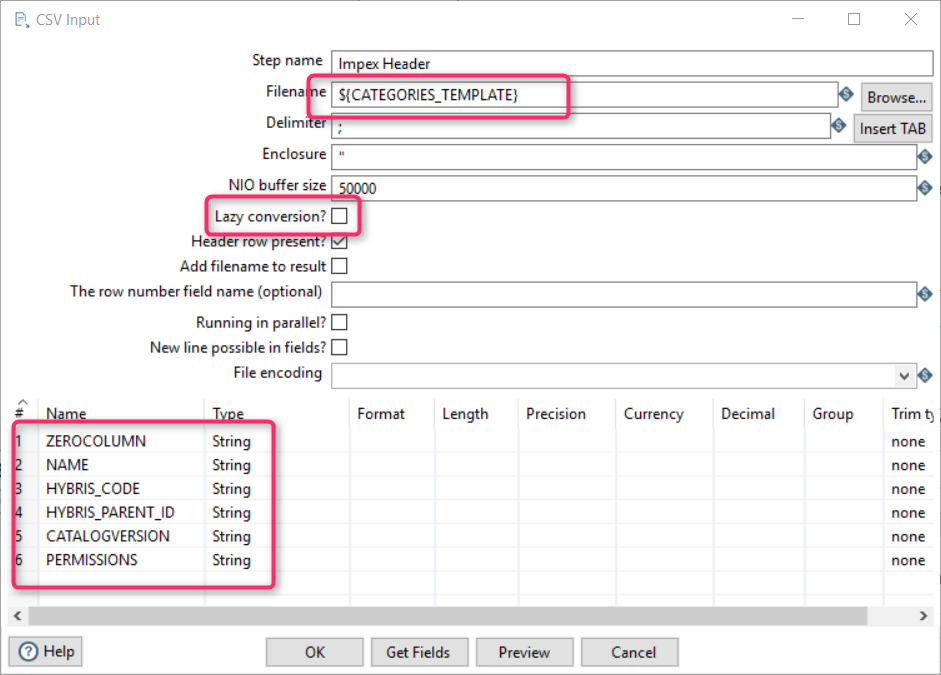

Apply a template from the external text file

To avoid repeating the steps for each new line in the impex, I used a component called “Input/CSV Input” to get the template from the disk. The template contains variable definitions and constants. The following template defines an Impex Header:$productCatalog=testCatalog $catalogVersion=catalogversion(catalog(id[default=$productCatalog]),version[default='Online'])[unique=true,default=$productCatalog:Online] $lang=en

Prepare a template

I use the global variable “CATEGORIES_TEMPLATE”. It is important, the Lazy conversion must be off.Merging this header and the data set

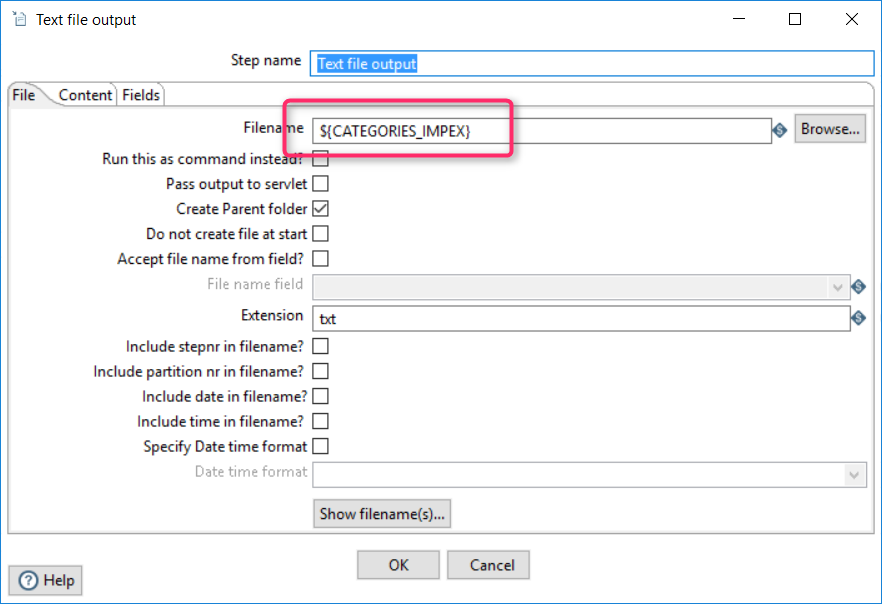

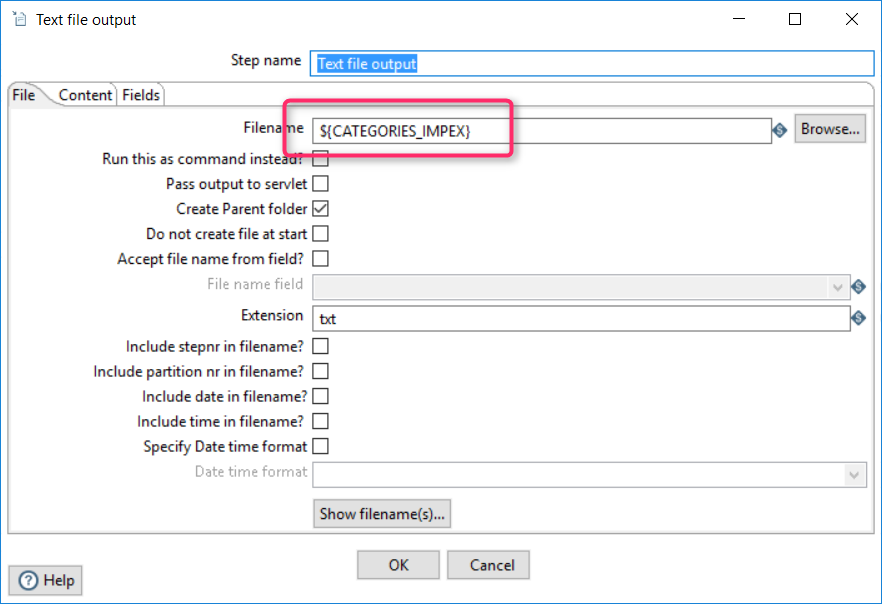

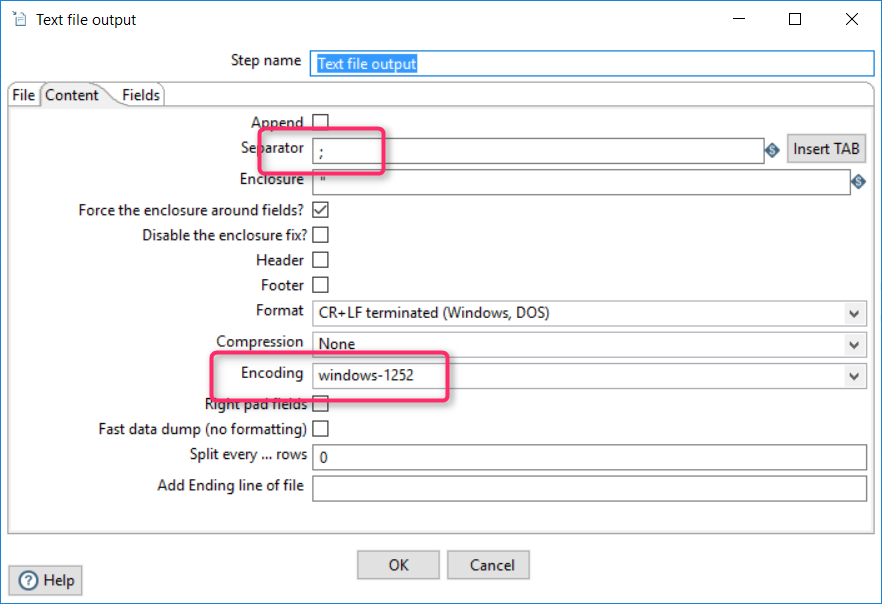

As a result, the header is created, “Transformation/Append streams” is used to concatenate datasets:Convert these data into CSV file

The last step is exporting the dataset into the CSV file. The component “Output/Text file output” is used here. You can change the encoding and the separator according to your preferences and Impex configuration:

You can change the encoding and the separator according to your preferences and Impex configuration:

Executing from the command line

Pentaho has a command line utility called “pan” (pan.bat

pan.sh

Pan /file CSVToImpex.ktr /norep

Rajendra Alapaty

18 January 2017 at 14:29

I’ve used Kettle for similar purposes in my ATG days to convert data in excel sheet to RQL for promotions, sale etc… for bulk update.