Best Practices: Migrating Content to hybris

Introduction

Data migration, as a fundamental aspect of projects on modernizing legacy systems, has been recognized to be a challenging task that may result in failed projects as a whole. One of main reasons for time and budget overrun is the lack of a well-defined methodology that can help handle the complexity of data migration tasks. The process of data migration is tightly linked to the project scope. That explains why there is no universal tool that could be used for all situation. However, some ideas, concepts, architecture, and even components could be reused to make the process smoother and predictable. In this article I tell you about my own experience and my personal vision on the topic. I’ve been testing it in different projects where I was involved in for last 15 years. Data migration tools have been implemented by my teams and me in different programming languages, from Perl to Java.Business process

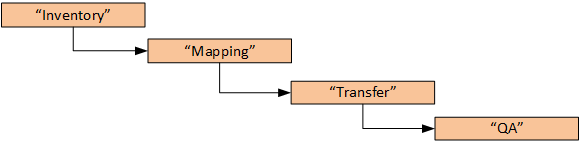

From a business process perspective, there are four sequential phases: Inventory phase defines

Inventory phase defines

- What data is moving,

- What data we can get rid of,

- How it can be grouped,

- What data requires special handling,

- What data requires changes

- How volatile is the data etc.

Since we are talking about e-commerce, you are likely going to migrate at least the following data:

|

- How is data going to “fit” and work in hybris?

- How is the overall structure of the data going to transfer?

The following points should be considered if you deal with CMS/rich text content:

|

- Internal technical QA

- Client review, editorial QA

Data transfer phase

Data migration moves data from legacy systems into new systems. Data sources in the new system can have different structures and limitations. There are several issues that can considerably complicate this process:- Legacy systems may have a number of heterogeneous data sources. These data sources are interconnected but designed by different teams, in different time, using different data modeling tools or interpreted under different semantics. In addition to that they might be used differently than they were originally intended to be used by design.

- Legacy systems may have inaccurate, incomplete, duplicate or inconsistent data, and new systems may also require additional semantic constraints on data after being migrated. Thus, bringing the quality of data up to standard of new systems can be costly and time-consuming.

- Many data migration tasks such as data profiling, discovery, validating, cleansing, etc. need to be iteratively executed in a project and specification changes frequently happen in order to repair detected problems.

- Extract – the process of reading data from a specified source and extracting a desired subset of data. The extraction phase largely deals with the technical heterogeneity of the different sources and imports relevant data into a staging area.

- Transform. – the process of converting the extracted/acquired data from its previous form into the form it needs to be in so that it can be placed into another database. The transformation phase is the heart of an ETL process. Here, syntactical and semantical heterogeneities are overcome using specialized components, often called stages:

- All data is brought into a common data model and schema;

- Data scrubbing and cleansing techniques standardize the data;

- Aggregating or combining data sets;

- Duplicate detection algorithms etc.

- Load. This phase loads the integrated, consolidated, and cleaned data from the staging area into hybris.

Off the shelf solutions

![Pentaho_new_logo_2013[1].png](http://hybrismart.com/wp-content/uploads/2017/01/pentaho_new_logo_20131.png) There is a number of the Off-the-shelf software. I am going to tell more about one of them, Pentaho Comminity Edition, but not in this article. Using Pentaho with hybris is a large topic which deserves a separate article.

As for the off the shelf products in general, the majority of off-the-shelf solutions are too heavy and expensive for using in hybris projects. Sometimes, the barriers to entry are too high for this market. However, for large projects, using off-the-shelf ETL tools might be a best option.

So I’ll explain the details in a separate article on the blog (next week?). It has already been preparing and will be published on the blog soon.

The approach explained in this article works well for small and medium projects. If the transformation rules are comprehensive, creating the data migration tool from scratch may work much better than using an out-of-the-shelf software.

There is a number of the Off-the-shelf software. I am going to tell more about one of them, Pentaho Comminity Edition, but not in this article. Using Pentaho with hybris is a large topic which deserves a separate article.

As for the off the shelf products in general, the majority of off-the-shelf solutions are too heavy and expensive for using in hybris projects. Sometimes, the barriers to entry are too high for this market. However, for large projects, using off-the-shelf ETL tools might be a best option.

So I’ll explain the details in a separate article on the blog (next week?). It has already been preparing and will be published on the blog soon.

The approach explained in this article works well for small and medium projects. If the transformation rules are comprehensive, creating the data migration tool from scratch may work much better than using an out-of-the-shelf software.

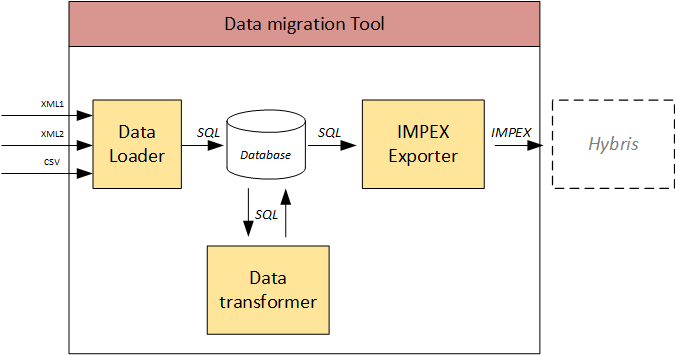

Architecture

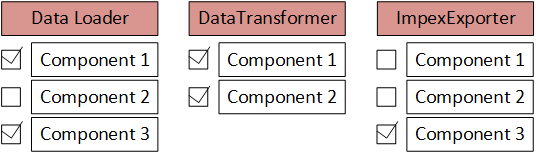

These three modules covers Extract, Transform and Load phases of the process. Each module is configurable. It consist of the module components:

Each module is configurable. It consist of the module components:

By default, all components are executed one after another in the configured order. However, some components could be skipped. It makes the system scalable and manageable. Components are generally custom. However, they share some common code that is part of the module.

Database is used as a staging area for the loaded data.

All modules support rerunability. Meaning that, if the the process fails half way through the batch, the module can be run again with the updated input data sets or configuration.

By default, all components are executed one after another in the configured order. However, some components could be skipped. It makes the system scalable and manageable. Components are generally custom. However, they share some common code that is part of the module.

Database is used as a staging area for the loaded data.

All modules support rerunability. Meaning that, if the the process fails half way through the batch, the module can be run again with the updated input data sets or configuration.

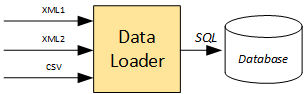

Data loader

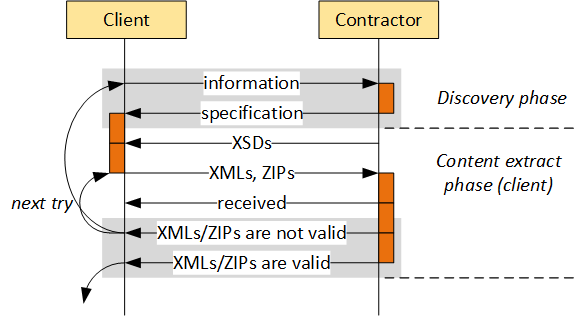

During the extract phase of ETL, the desired data sources are identified and the data is extracted from those data sources. In my solution, the data loader converts input text files (XML) into the database create/insert statements (SQL). It looks like a very basic solution, but for the small and medium projects my experience shows that it is good enough. Input files. As a rule, there is a milestone in the project, when the input files (data extracts) are provided to the developer according to the agreed specification (formats, data structures etc.). It is virtually impossible to get right data from the first try, so you need to take into the consideration that this phase needs to be iteratively executed in a project. SQL statements are created in the folder called

SQL statements are created in the folder called

output.

- XML files. It is the most convenient format for the data loader. XML Schemas help to constrain the content and prevent mistakes in data.

- CSV files. I recommend using CSV files only for very large amount of data of very simple structure. CSV files are good only when your data is strictly tabular and you 100% know its structure and this structure won’t be changed.

- ZIP files. For binary content referenced from an XML: images, digital assets, documents.

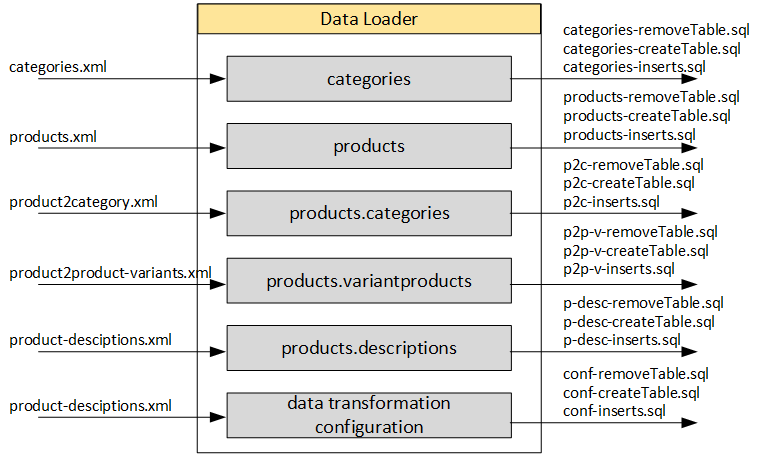

Output files are SQL statements. They include three sections:

Output files are SQL statements. They include three sections:

- Remove tables

- Create tables

- Insert data

Each components should do only one thing (single responsibility principle). No data transformation. No merging or splitting. The module is basically a text formats converter: it reads one text file (XML, CSV) and create another text file (SQL). ZIPs are loaded by copying into a folder that is used by Data Transformer.

In my solution, the structure of the tables based on the structure of XMLs. If you add a field into the XML, the database field will be automatically added into

Each components should do only one thing (single responsibility principle). No data transformation. No merging or splitting. The module is basically a text formats converter: it reads one text file (XML, CSV) and create another text file (SQL). ZIPs are loaded by copying into a folder that is used by Data Transformer.

In my solution, the structure of the tables based on the structure of XMLs. If you add a field into the XML, the database field will be automatically added into

-createTable.sql and <code>-inserts.sql

./data-loader -enable categories,products,product.descriptions ./data-loader -enable all ./data-loader -enable all -disable products,categoriesIn order to execute SQLs I use a separate app. The full cycle looks like

./cleanSQLs ./data-loader -enable all ./executeSQLAfter this phase, the database has been created, all data have been uploaded into the database. It is a turn of the data transformer.

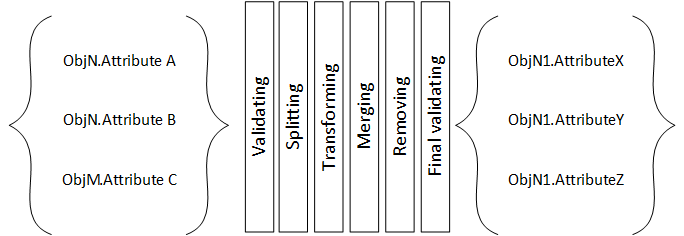

Data transformer

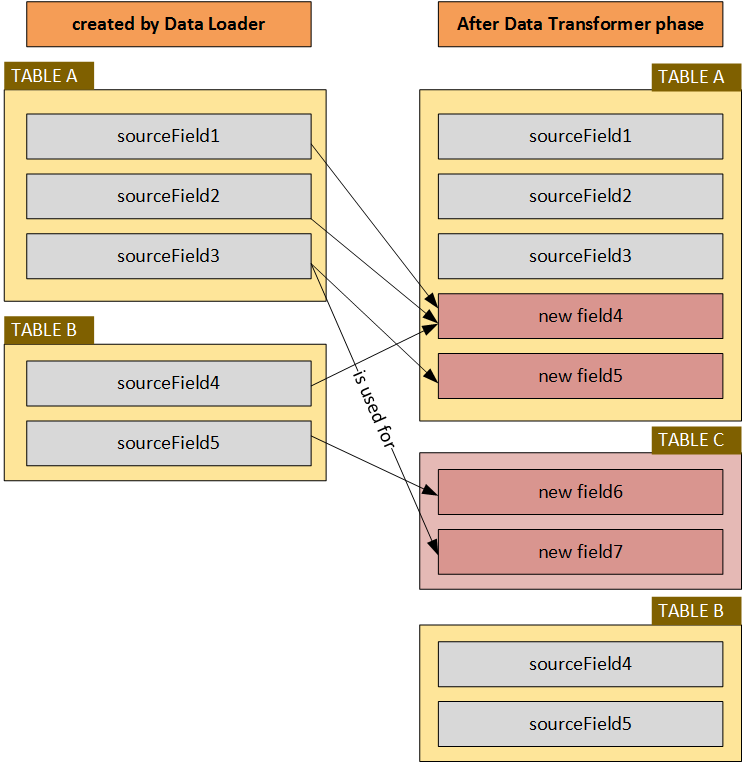

The transform step applies a set of rules to transform the data from the source to the target. In my solution, the data transformer module

In my solution, the data transformer module

- creates additional tables or/and fields (if needed)

- adds indexes (if needed)

- extract data from the tables and/or fields

- transforms the data according to agreed logic

- saves the transformed data into new tables and/or fields

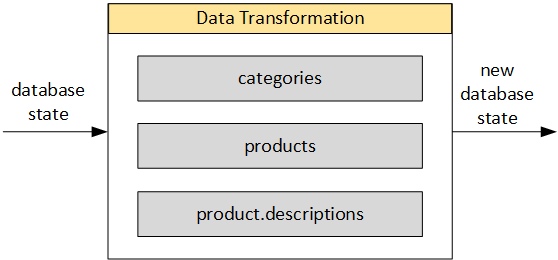

In my solution, the data tranformation module also works with configurable data loader components, which can be executed separately or in groups. For example, in my last project the following components are used:

In my solution, the data tranformation module also works with configurable data loader components, which can be executed separately or in groups. For example, in my last project the following components are used:

The same concept of single responsibility is used here for the components: they should do one specific thing (responsibility) and not trying to do more than it.

The same concept of single responsibility is used here for the components: they should do one specific thing (responsibility) and not trying to do more than it.

Images and links

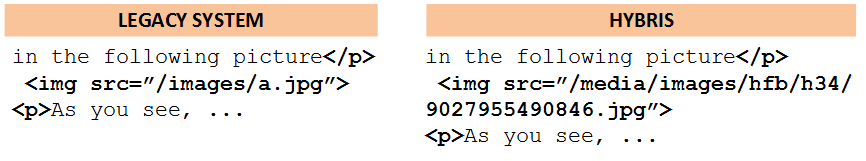

One of the challenging topics is converting embedded images and links into hybris media and page URLs.

At the data transformation phase new URLs of images are not known because they haven’t uploaded to hybris yet.

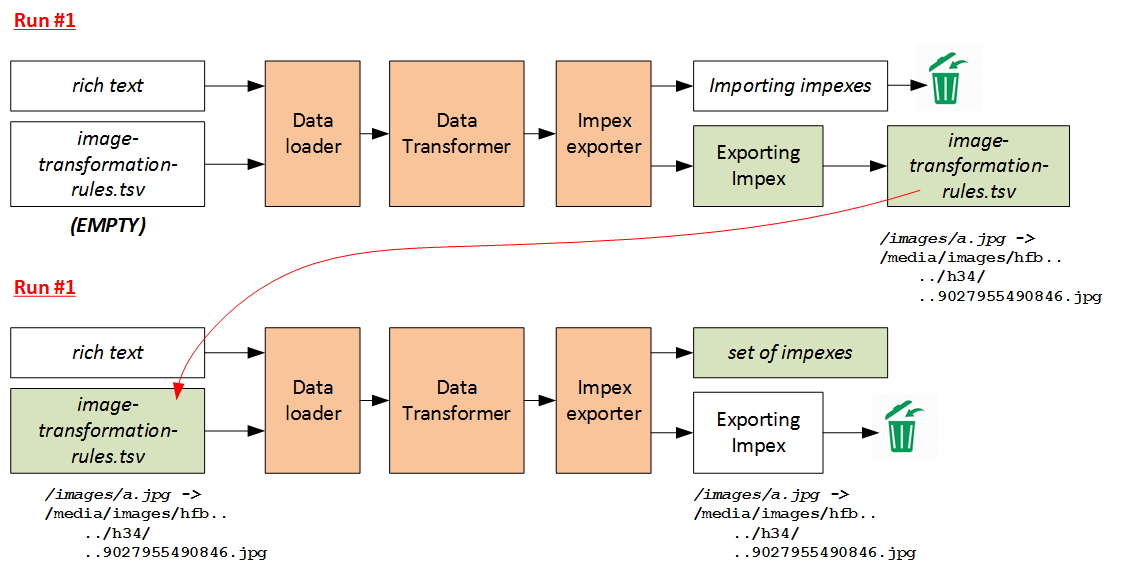

In my solution, the conversion is performed in two phases. First phase ends with the data (ImpEx: Export) that are used on the second phase. The following diagram is self-explanatory:

At the data transformation phase new URLs of images are not known because they haven’t uploaded to hybris yet.

In my solution, the conversion is performed in two phases. First phase ends with the data (ImpEx: Export) that are used on the second phase. The following diagram is self-explanatory:

|

./data-transformation -enable categories,products ./data-transformation -enable all ./data-transformation -enable all -disable products,categoriesAlong with the previous phase,

./cleanSQLs ./data-loader -enable all ./executeSQL ./data-transformation -enable allAfter this phase, the database has been updated, the data are cleansed, enriched, the duplicates are removed etc. It is a turn of the impex exporter.

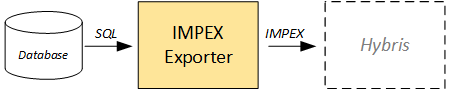

Impex Exporter

Exporter module creates a set of ImpEx files to load the data received from the previous two steps into hybris. There is no any data modification, data in output files is exactly the same as data in the database. If any transformation is needed, it should be performed earlier.

There is a set of components or their groups that can be enabled or disabled from the command line. The output files are placed into

There is no any data modification, data in output files is exactly the same as data in the database. If any transformation is needed, it should be performed earlier.

There is a set of components or their groups that can be enabled or disabled from the command line. The output files are placed into

output

Video

<iframe src=”https://player.vimeo.com/video/199095008″ width=”640″ height=”360″ frameborder=”0″ webkitallowfullscreen mozallowfullscreen allowfullscreen></iframe>© Rauf Aliev, January 2017

nitin

12 January 2017 at 11:51

very informative , thanks for publishing these detailed steps

sergeyshimansky

18 January 2017 at 07:12

Great job, Rauf! Very valuable, thanks a lot.

hybris e-commerce

8 February 2017 at 09:41

Thank you for sharing this priceless experience. We use Hybris platform in our projects and your article was very informative and useful!