A Deeper Look at SAP Commerce Data Hub

SAP Commerce Data Hub is an ETL integration platform introduced by SAP back in September 2014 as part of Hybris 5.3. Today it is being decommissioned because of better alternatives but many are still using it and may benefit from these insights.

In this article, I am going to dissect Data Hub and turn it inside out. It is more or less a post-mortem at this point but for those who are still using Data Hub, it may be a good complement to the official documentation.

I must say that nobody liked Datahub despite all the efforts being made by SAP. I think we’ve put up with it for too long. It was the only SAP-aware integration platform that comes with Hybris/SAP Commerce, and later SAP Commerce which was recommended by the vendor for master and transactional data replication.

SAP Commerce Data Hub is an ETL integration platform introduced by SAP back in September 2014 as part of Hybris 5.3. Today it is being decommissioned because of better alternatives but many are still using it and may benefit from these insights.

In this article, I am going to dissect Data Hub and turn it inside out. It is more or less a post-mortem at this point but for those who are still using Data Hub, it may be a good complement to the official documentation.

I must say that nobody liked Datahub despite all the efforts being made by SAP. I think we’ve put up with it for too long. It was the only SAP-aware integration platform that comes with Hybris/SAP Commerce, and later SAP Commerce which was recommended by the vendor for master and transactional data replication.

A Quick Overview of Key Concepts

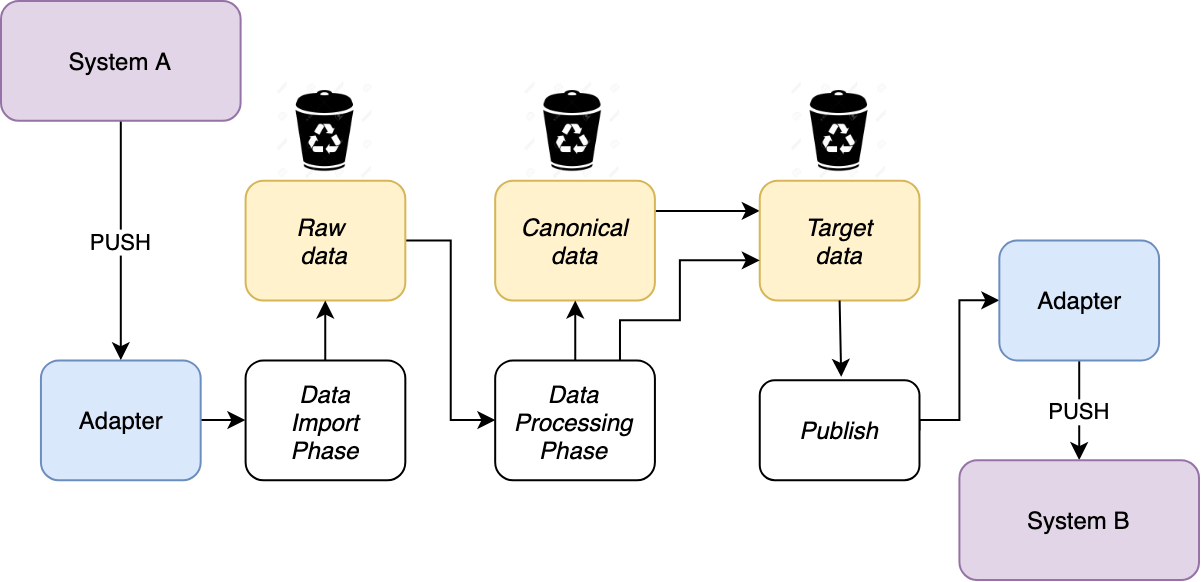

As I told above, a product called Hybris Data Hub has been introduced five years ago, as a complimentary tool for inbound and outbound data replication. The product was designed specifically for Hybris Commerce to easily import and export data between Hybris and external data storage systems, but architecturally it was a platform-agnostic tool with a set of plugins. These plugins, or extensions, were aware of SAP Commerce and ERP integration interfaces, protocols, data models, and data formats. The pre-built integrations came as part of Commerce Data Hub distributive or as a standalone integration package (for example, SAP for Retail). There were extensions from the third-party vendors (ItemSense IoT Connector). Despite the complexity, Commerce Data Hub was a common tool for integration. Many customers are still using it today. Data Hub extensions are separate apps deployed on the Tomcat server along with the Data Hub core. The core itself is built around Spring Integration. Commerce Data Hub can be understood as Spring integration powered integration platform equipped with the configuration-driven staging area and flexible data models. Data Hub is designed only for asynchronous data integration. This is a so-called fire-and-forget model where the data does not have to be moved immediately but can be moved at a later point in time. Data Hub registers all the data packets received from the partner systems to process it asynchronously. Such approach is aimed to better scalability. In Datahub, there are three processing phases :- LOAD or data import phase.

- COMPOSITION or data processing/transformation phase

- PUBLISH or data exporting phase

Datahub doesn’t remove any items in the phases. For cleanup, there are dedicated procedures outside the Data Hub data processing flow.

At the PUBLISH phase, Commerce Data Hub sends the data from the “target items” to the target system via the outbound adapter.

Datahub doesn’t remove any items in the phases. For cleanup, there are dedicated procedures outside the Data Hub data processing flow.

At the PUBLISH phase, Commerce Data Hub sends the data from the “target items” to the target system via the outbound adapter.

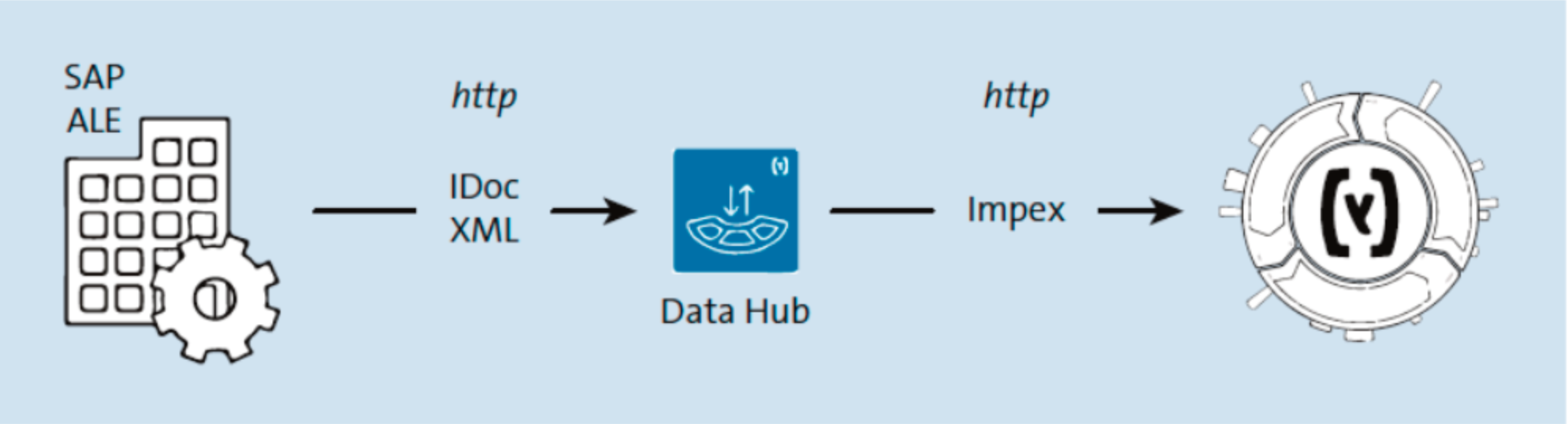

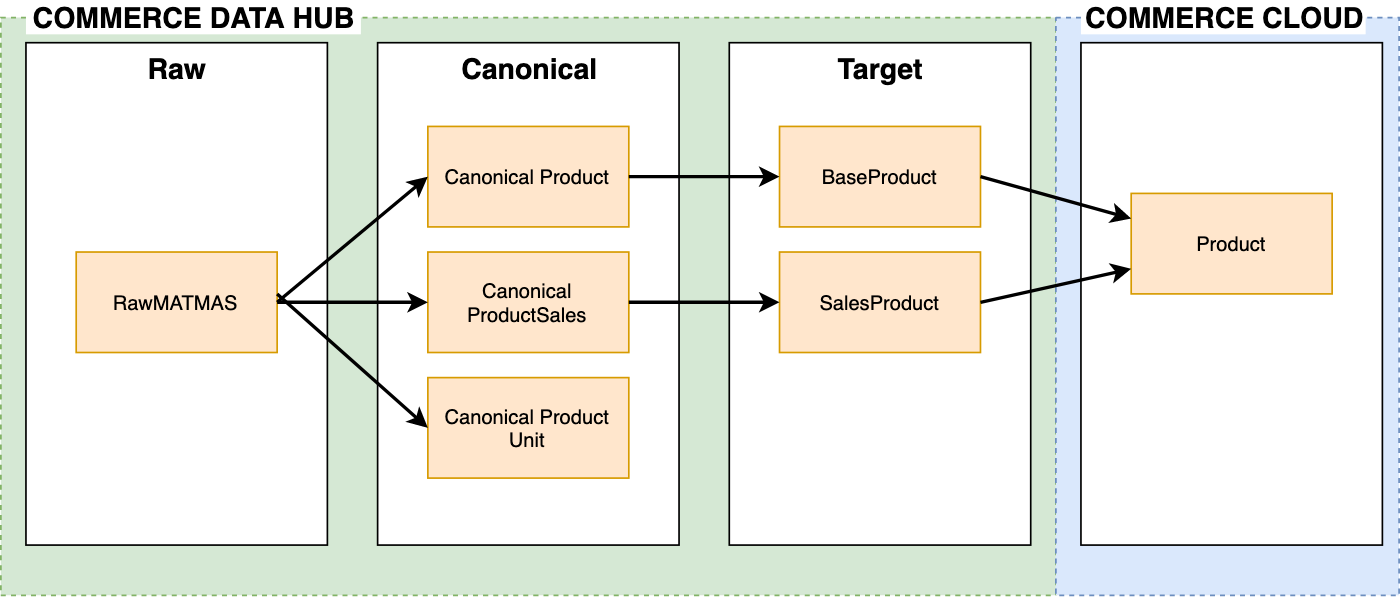

Integration with SAP ERP

For the asynchronous ERP integration, the Data Hub uses an IDOC connector. For SAP Commerce integration, there is a combination of Impex outbound adapter (Data Hub side) and datahub adapter (SAP Commerce Cloud side). The following diagram shows one of the scenarios covered by the ERP integration module, replicating master data from SAP ERP to SAP Commerce. There is also an opposite direction flow. For example, for sending the orders from SAP Commerce to SAP ERP. Such bidirectional flows raise the risk of data inconsistencies caused by cross-system changes and lack of data maintenance and debugging tools in Commerce Data Hub.

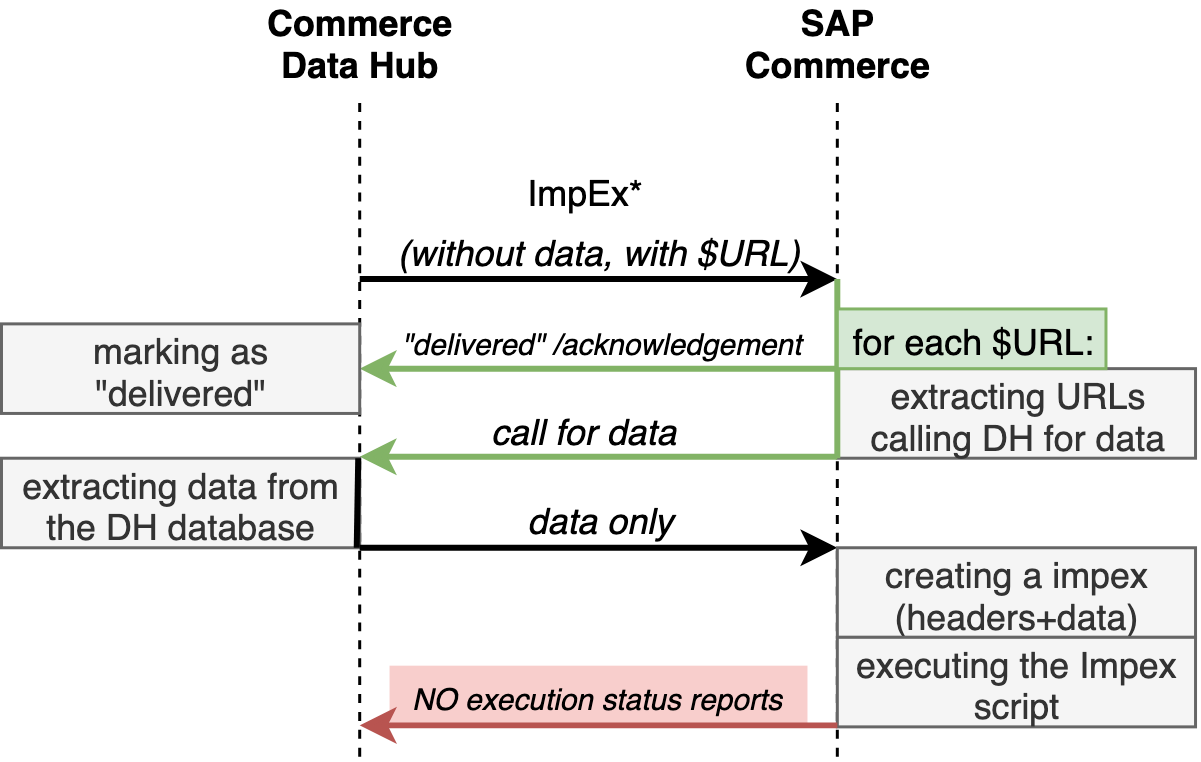

Particularly noteworthy is a way how generated ImpEx scripts feed into SAP Commerce. It happens at the publish phase where the system creates Impex scripts from the target items and push these scripts to SAP Commerce. SAP Commerce out of the box doesn’t provide a platform-agnostic integration interface for running ImpEx scripts. However, for Data Hub there is a special module for such a purpose. This module introduces a special “macro” to separate commands from data. Normally, ImpEx has one or more headers (“UPDATE Item;uid[unique=true];name”) and a data block followed by the header (“;1;name1”, “;2;name2”). For the Data Hub integration, the integration extension introduces a “$URL:..” macro which means “make a call to pull data”.

There is also an opposite direction flow. For example, for sending the orders from SAP Commerce to SAP ERP. Such bidirectional flows raise the risk of data inconsistencies caused by cross-system changes and lack of data maintenance and debugging tools in Commerce Data Hub.

Particularly noteworthy is a way how generated ImpEx scripts feed into SAP Commerce. It happens at the publish phase where the system creates Impex scripts from the target items and push these scripts to SAP Commerce. SAP Commerce out of the box doesn’t provide a platform-agnostic integration interface for running ImpEx scripts. However, for Data Hub there is a special module for such a purpose. This module introduces a special “macro” to separate commands from data. Normally, ImpEx has one or more headers (“UPDATE Item;uid[unique=true];name”) and a data block followed by the header (“;1;name1”, “;2;name2”). For the Data Hub integration, the integration extension introduces a “$URL:..” macro which means “make a call to pull data”.

INSERT_UPDATE B2BUnit;;Name;description;active;uid[unique=true];buyer;locName

#$URL:http://localhost:8080/datahub-webapp/v1/core-publications/2045/Company.txt?targetName=HybrisCore&locale=&fields=b2bUnitName,description,active,uid,isBuyer,locName&lastProcessedId=0&pageSize=1000

#$URL:http://localhost:8080/datahub-webapp/v1/core-publications/2045/Company.txt?targetName=HybrisCore&locale=&fields=b2bUnitName,description,active,uid,isBuyer,locName&lastProcessedId=0&pageSize=1000

SAP Commerce Data Hub sends a sort of message “there are data” along with the structure of this data, and SAP Commerce requests these data asynchronously to build a valid Impex.

From my perspective, the approach is controversial and not reliable. For example, Data Hub can be overloaded, and after the configurable number of attempts (three by default), SAP Commerce will stop trying to request the data. Such situation is hard to detect because Data Hub will mark data replication as successful which is not so in fact. The only monitoring console is available on the Data Hub side and it is not enough for complex cases.

The documentation is generally good, but many aspects like mentioned one are still underdocumented.

SAP Commerce Data Hub sends a sort of message “there are data” along with the structure of this data, and SAP Commerce requests these data asynchronously to build a valid Impex.

From my perspective, the approach is controversial and not reliable. For example, Data Hub can be overloaded, and after the configurable number of attempts (three by default), SAP Commerce will stop trying to request the data. Such situation is hard to detect because Data Hub will mark data replication as successful which is not so in fact. The only monitoring console is available on the Data Hub side and it is not enough for complex cases.

The documentation is generally good, but many aspects like mentioned one are still underdocumented.

IDOCs

IDocs are the central import and export format of SAP ERP systems. IDocs are used for asynchronous transactions. Each IDoc generated exists as a self-contained structure (can be represented as a text file) that can then be transmitted to the requesting system without connecting to the central database. One of the representations of IDOC is XML. For replicating master data, SAP ERP sends IDocs via a HTTP connection to the Data Hub Server. IDOCs are received by the Datahub IDoc adapter, which creates Spring Integration messages (idocXmlInboundChannel). There is a Spring Integration Router which routes the messages to specific IDoc-specific channels, such as MATMAS or ORDER05. These channels are read by service activators. The service activators use mapping services provided by the Data Hub integration extension, such as sapproduct or sapcustomer. In general, the IDoc client interfaces can be of different forms of complexity. One used in the Data Hub is the simplest, HTTP-based, XML-payloaded. It simplifies the debugging and maintenance procedures. It treats the IDoc payload as a XML-formatted text with the particular set of segments and attributes. However, the incoming XMLs are not stored anywhere that creates challenges in understanding what went wrong when a problem with data integration arises. There is no documentation saying which components (segments, attributes) of IDocs are to be processed and which are to be ignored. It can be extracted from the data hub extensions and java code, but you will find it pretty messy. There is also no documentation saying what attributes should be mapped to what target items, canonical or SAP Commerce Cloud attributes, and what data transformations are to be applied. One may say that the configuration (XML, Datahub + Spring) is self-explanatory, but it is not so much because part of this logic is outside XML, in the Java code. In many cases, the order of the IDocs is important for the integration processes. The order the Data Hub expects is not guaranteed because of the asynchronous nature of IDoc and Data Hub. It may create issues with the data replication. If something goes wrong, the items will be left unprocessed without clear explanation on why. It may clutter the staging area with time and pose the issues on wrong/not timely data replication. Typical length of all text fields in IDOC is limited to 18 or 40 characters. Additionally, there is a limit for the whole segment (1000 characters). In some ERPs, there are customer-specific customizations to handle long texts such as product descriptions or long product name. Of course, all these are not supported by Data Hub out of the box and it is often the subject of Data Hub customization. Every IDoc has one data record with multiple segments organized in hierarchy. The segments often represent a referencing table in the data model. For example, MATMAS IDoc, used for product master data (MATerial MASter), has a “E1MARMM” segment which represents a relevant extract from the MARM table where units of measure are stored. “E1MVKEM” represents an “MVKE” table which is used for material sales data and so forth. IDoc data should be carefully screened. It is important to keep only those IDocs and segments which require Data Hub processing. Extraneous IDocs or segments can have a serious effect on Data Hub performance. Commerce Data Hub doesn’t have any sample IDOCs. Also, it is not straightforward to find sample IDocs on Internet just to perform a test run of Data Hub out of the box integration. You need to configure your SAP ERP and SAP Commerce for a test run.Integration Scenarios Covered in This Article

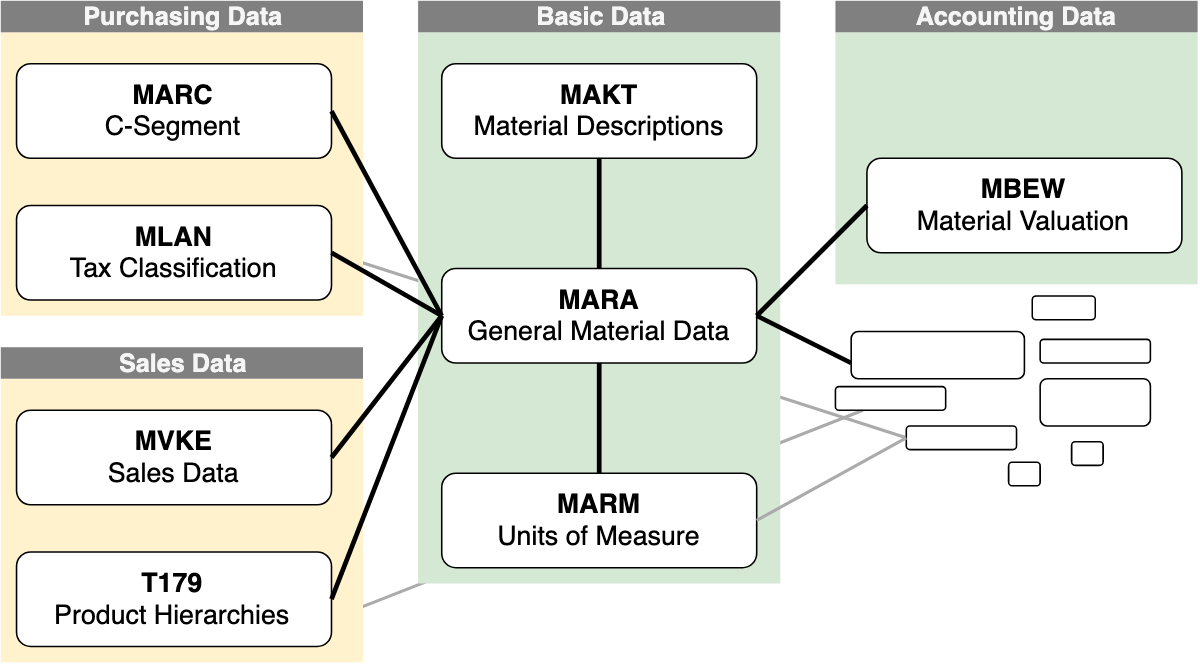

To explain the Data Hub concepts, I decided to use only one integration scenario, the material data replication from ERP to SAP Commerce. Other topics, such as customer, pricing or order data replication are purposely remained untouched. Also, I purposely excluded inventory (LOISTD IDoc) from the material data integration because this challenging topic deserves a separate discussion. I will probably come back to them in the next follow-ups if there is a demand.Material Data Model in SAP ERP

The internal structure of the ERP data model is not well-documented. Below is a quick overview of the data relations for better understanding of the Data Hub data transformations explained in the next sections. In SAP ERP, the following tables are considered together as a material master set:- MARC (Material Master)

- MAKT (Material description)

- MARA (Material Master General data)

- MLAN (Material Master Tax Classification)

- MARM (Unit of Measure)

- MBEW (Material Valuation)

- MVKE (Sales Data for Material)

- EINA and EINE, A017 and some others.

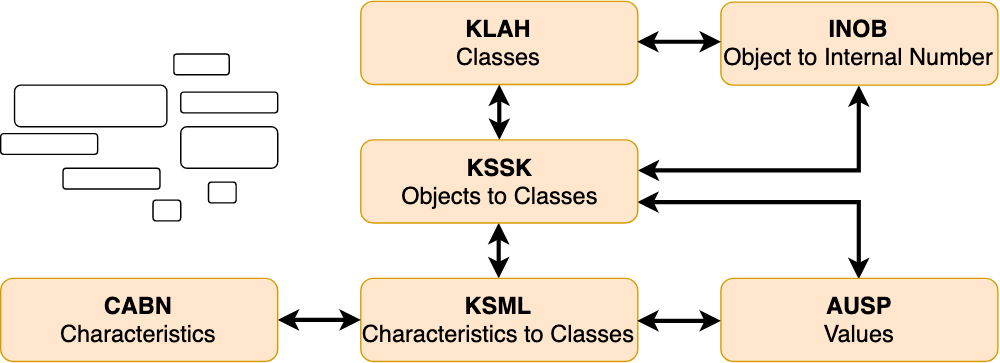

Additionally, there are tables used for the classification and characteristics:

Additionally, there are tables used for the classification and characteristics:

- CABN (Characteristic information)

- KLAH (Class Header Data information)

- AUSP (Characteristic Values).

- KSSK (Allocation Table: Object to Class) and some others.

Material Master Data Replication

For the material data replication, there is an extension sapproduct. It defines the replication rules, mappings, canonical and target structures.

The following IDocs are involved in the material master data integration scenario:

Material Master Data Replication

For the material data replication, there is an extension sapproduct. It defines the replication rules, mappings, canonical and target structures.

The following IDocs are involved in the material master data integration scenario:

- Material Core Data:

- MATMAS IDoc. In the example below, I use two MATMAS IDocs, one is for the variant product (https://pastebin.com/A6y0ZctT) and another is for base product (https://pastebin.com/tbC2sycy).

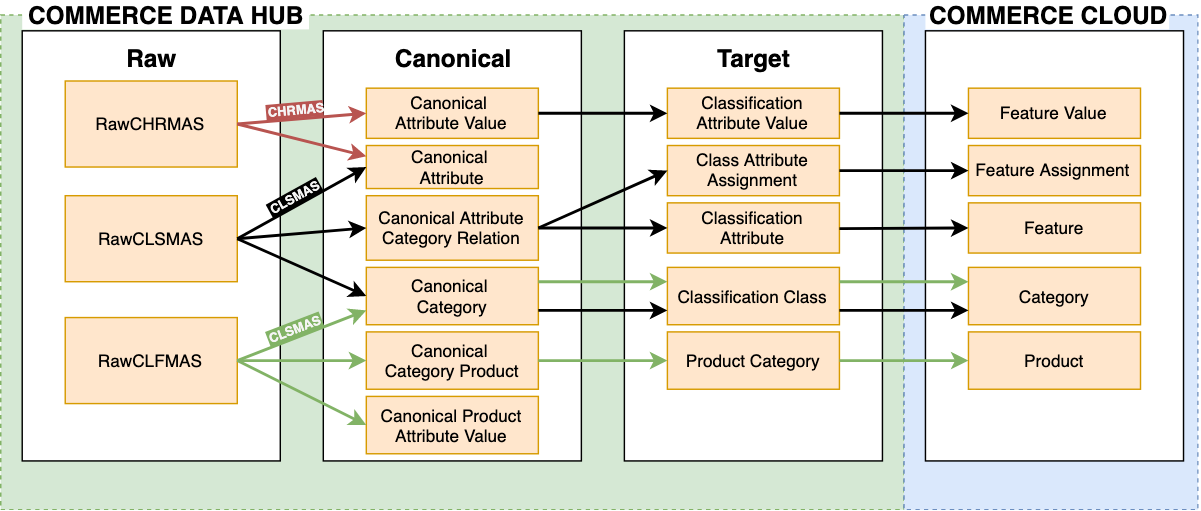

- Classification and Characteristics IDocs:

- CLSMAS IDoc. It contains material classes with characteristics. Example: https://pastebin.com/6VVTFYqh

- CLFMAS IDoc https://pastebin.com/gLdnZGds

- CHRMAS IDoc. This IDoc is used for transfer product characteristics dictionary. I haven’t included it in the process because normally it is stable.

| SEGMENT | SEGMENT | SEGMENT | DESCRIPTION | SUPPORTED BY THE DEFAULT DATAHUB CONF? |

| E1MARAM | Master material general data (MARA) | NO | ||

| E1MARA1 | Additional Fields for E1MARAM | NO | ||

| E1MAKTM | Master material short texts (MAKT) | YES | ||

| E1MARCM | Master material C segment (MARC) | NO | ||

| E1MARC1 | Additional Fields for E1MARCM | NO | ||

| E1MARDM | Master material warehouse/batch segment (MARD) | NO | ||

| E1MFHMM | Master material production resource/too l (MFHM) | NO | ||

| E1MPGDM | Master material product group | NO | ||

| E1MPOPM | Master material forecast parameter | NO | ||

| E1MPRWM | Master material forecast value | NO | ||

| E1MVEGM | Master material total consumption | NO | ||

| E1MVEUM | Master material unplanned consumption | NO | ||

| E1MKALM | Master material production version | NO | ||

| E1MARMM | Master material units of measure (MARM) | YES | ||

| E1MEANM | Master Material European Article Number (MEAN) | NO | ||

| E1MBEWM | Master material material valuation (MBEW) | NO | ||

| E1MLGNM | Master material material data per warehouse number (MLGN) | NO | ||

| E1MLGTM | Material Master: Material Data for Each Storage Type (MLGT) | NO | ||

| E1MVKEM | Master material sales data (MVKE) | NO | ||

| E1MLANM | Master material tax classification (MLAN) | YES | ||

| E1MTXHM | Master material long text header | NO | ||

| E1MTXLM | Master material long text line | NO | ||

| E1MTXLM | Master material long text line | NO | ||

| E1CUCFG | E1CUVAL | General configuration data for the configurable material. Not available in MATMAS05. | YES |

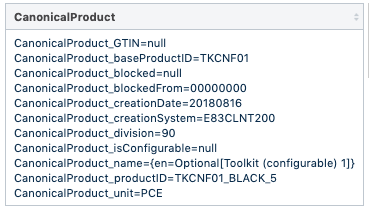

However, the configuration says that some data from MATMAS are also involved in CanonicalProductVariantAttributeValue, for example. This canonical object is not used in the configuration, but it is used in the java classes. Another example is CanonicalProductTax which is populated from MATMAS as well, and there are also no any rules in the XML configuration showing how CanonicalProductTax records are supposed to be transformed into any objects in Hybris. This separation between configuration-driven and java code driven data transformation creates enormous difficulties with understanding how all this stuff works.

The SAP Commerce product data model and local data configuration require an extension and initial setup to get Commerce ready for Datahub. First, there are additional types and attributes. For example, the Product type is extended with the following attributes:

However, the configuration says that some data from MATMAS are also involved in CanonicalProductVariantAttributeValue, for example. This canonical object is not used in the configuration, but it is used in the java classes. Another example is CanonicalProductTax which is populated from MATMAS as well, and there are also no any rules in the XML configuration showing how CanonicalProductTax records are supposed to be transformed into any objects in Hybris. This separation between configuration-driven and java code driven data transformation creates enormous difficulties with understanding how all this stuff works.

The SAP Commerce product data model and local data configuration require an extension and initial setup to get Commerce ready for Datahub. First, there are additional types and attributes. For example, the Product type is extended with the following attributes:

- sapBlocked: True if the product is blocked for sales. This value merges the cross and specific sales area flag.

- sapBlockedDate: The date at which the blocked status takes effect.

- sapConfigurable: True if the product is configurable.

- sapEAN: The EAN of the sales unit of measure (UOM).

- sapBaseUnitConversion: The conversion factor to multiply the current quantity (in sales UOM) to be translated into base UOM quantity.

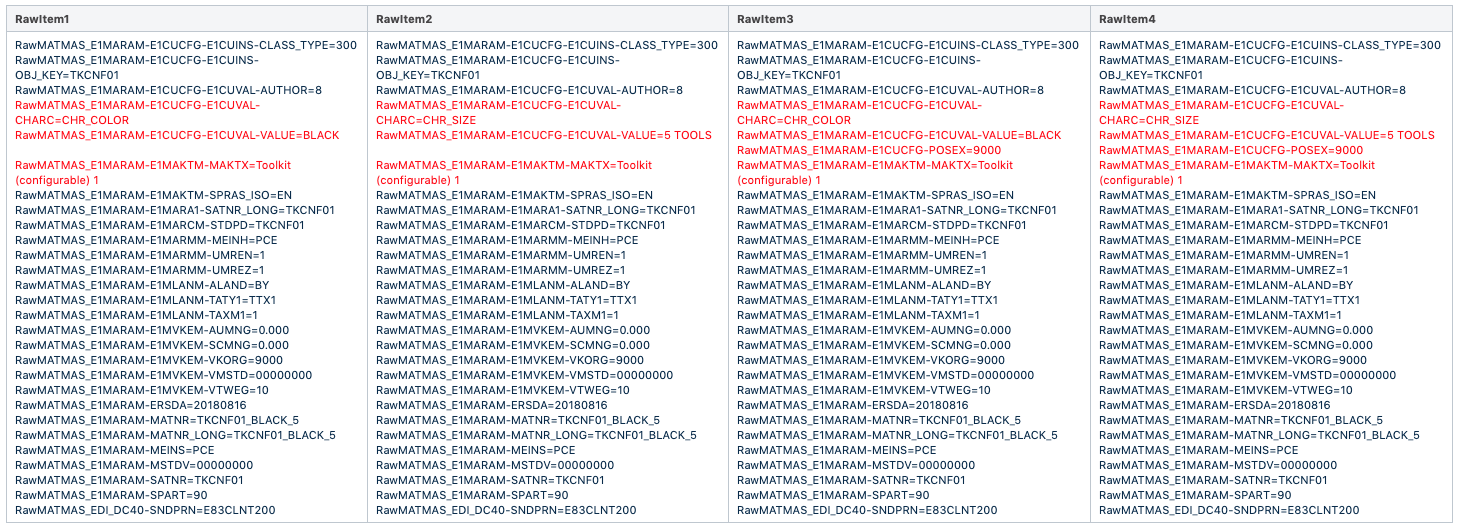

Load phase

At the load phase, the information from the IDOC is loaded into the database. The names of XML tags are concatenated and persisted as keys of raw item attributes. As I mentioned above, only some IDOC attributes are configured for being imported. All others are ignored. The following list shows the attributes from IDOC the Data Hub is aware of as well as their source (IDOC) and purpose. The symbol “→“ represents nesting in the IDoc XML: a→b means “b is a sub-element of a”.- E1CABNM → ATEIN. ‘X’ means Single valued. From CHRMAS. Used for ClassAttributeAssignment.multiValued = true if singleValued or interval is ‘X’ (see ATINT below). Example: “X” or “”.

- E1CABNM → ATFOR. Data type of characteristic (for example, “NUM”)., Used for ClassAttributeAssignment.attributeType:

- ‘enum’ if attributeValueId <> “”

- ‘date’ if attributeType = ‘DATE’

- ‘number’ if attributeType = ‘NUM’

- ‘string’ otherwise.

- E1CABNM → ATINT. “X” means Interval Values Allowed. From CHRMAS.Used for

- ClassAttributeAssignment.multiValued = true if singleValued or interval is ‘X’ (see ATEIN above)

- ClassAttributeAssignment.range if interval is ‘X’

- E1CABNM → ATNAM. A name of the characteristic (for example, “weight”). From CHRMAS. Used for

- ClassificationAttributeValue.code (attributeId+”_”+attributeValueId)

- ClassificationAttribute.code (=attributeId)

- ClassAttributeAssignment.classificationAttribute

- Product.attributes (via determineAttributeValue()) – @attributeId[…]

- ERPVariantProduct.attributes (–“–)

- E1CABNM → ATBEZ. Language-dependent description of characteristic (“Country of origin”).

- E1CABNM → SPRAZ_ISO. Language (“EN”).

- E1CABNM → ATWRT. Characteristic Value ID (for example, “12”). From CHRMAS and CLSMAS.Used for

- ClassificationAttributeValue.code (attributeId+”_”+attributeValueId)

- ClassificationAttribute.code (=attributeId)

- ClassAttributeAssignment.classificationAttribute

- Product.attributes (via determineAttributeValue()) – @attributeId[…]

- ERPVariantProduct.attributes (–“–)

- E1CABNM → ATWB.

- E1TEXTL → TDFORMAT.

- E1TEXTL → TDLINE.

- E1TEXTL → LANGUAGE_ISO.

- MSEHI. Unit of measurements. From CHRMAS. Used for

- ClassAttributeAssignment.unit → unit(code,systemVersion(catalog(id),version),unitType)[unique=true]

- Product.unit → unit(code)

- ERPVariantProduct.unit → unit(code)

- Product.sapBaseUnitConversion (formula)

- CLASS. Class number/name. From CLSMAS. Used for

- ClassificationClass.categoryID

- E1KSMLM → ATNAM. Attribute ID

- KLART. Class Type (for example, “001” – it is a Material class). From CLFMAS if E10CLFMAFID = 0.

- E1MARAM → E1CUCFG → E1CUVAL → AUTHOR. Attribute author. From MATMAS.

- E1MARAM → E1CUCFG → E1CUVAL → CHARC. Attribute ID. From MATMAS.

- E1MARAM → E1CUCFG → E1CUVAL → VALUE. Attribute Value. From MATMAS.

- E1MARAM → E1MAKTM → MAKTX. Description. From MATMAS.

- E1MARAM → E1MAKTM → SPRAS_ISO. Language. From MATMAS.

- E1MARAM → E1MARMM→ UMREN. Denominator information. Measure is piece (PC). 5 kg correspond to 3 pieces to the base unit of measure. From MATMAS.

- E1MARAM → E1MLANM → ALAND. Tax Country (For example, “GB”). From MATMAS.

- E1MARAM → E1MLANM → TATY1. Tax Class. From MATMAS.

- E1MARAM → E1MLANM → TAXM1. Tax Value. From MATMAS.

- E1MARAM → MATNR. The product SKU#. From MATMAS.

- E1MARAM → MATNR_LONG. Long product name (40 characters). From MATMAS.

- E10CLFM → E1AUSPM → ATFLB. Attribute Value (Numeric). From CLFMAS.

- E10CLFM → E1AUSPM → ATFLV. Internal Floating Point From (Numeric). From CLFMAS.

- E10CLFM → E1AUSPM → ATNAM. Characteristic Name. From CLFMAS if E10CLFM-MAFID is zero.

- E10CLFM → E1AUSPM → ATWRT. Characteristic Value. From CHRMAS.

- E10CLFM → E1KSSKM → CLASS. Category Parent. From CLFMAS.

- E10CLFM → KLART. Category Type. It is used for creating a catalog name (“ERP_CLASSIFICATION_” + category type). From CLFMAS.

- E10CLFM → OBJEK_LONG. ID, Classification. From CLFMAS.

- E10CLFM → SNDPRN. Creation system. From CLFMAS.

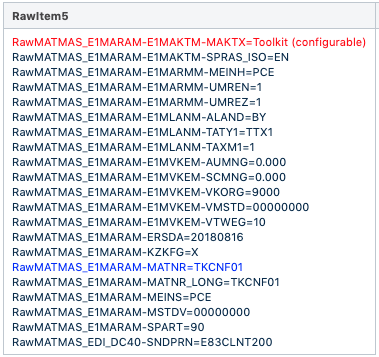

<?xml version="1.0" encoding="UTF-8"?> <MATMAS05> <IDOC BEGIN="1"> <E1MARAM SEGMENT="1"> <MSGFN>005</MSGFN> <MATNR>TKCNF01_BLACK_5</MATNR> </E1MARAM> . . . <E1CUCFG SEGMENT="1"> <E1CUVAL SEGMENT="1"> <INST_ID>00000001</INST_ID> <CHARC>CHR_COLOR</CHARC> <VALUE>BLACK</VALUE> <AUTHOR>8</AUTHOR> <VALCODE>1</VALCODE> <VALUE_LONG>BLACK</VALUE_LONG> </E1CUVAL> <E1CUVAL SEGMENT="1"> <INST_ID>00000001</INST_ID> <CHARC>CHR_SIZE</CHARC> <VALUE>5 TOOLS</VALUE> <AUTHOR>8</AUTHOR> <VALCODE>1</VALCODE> <VALUE_LONG>5 TOOLS</VALUE_LONG> </E1CUVAL> </E1CUCFG> . . . </IDOC>As a result, Datahub creates two Raw Item during the load phase: Raw Item #1

- RawMATMAS_E1MARAM-MATNR=TKCNF01_BLACK_5

- RawMATMAS_E1MARAM-E1CUCFG-E1CUVAL-AUTHOR=8

- RawMATMAS_E1MARAM-E1CUCFG-E1CUVAL-CHARC=CHR_COLOR

- RawMATMAS_E1MARAM-E1CUCFG-E1CUVAL-VALUE=BLACK

- RawMATMAS_E1MARAM-E1MAKTM-MAKTX=Toolkit (configurable) 1

- RawMATMAS_E1MARAM-MATNR=TKCNF01_BLACK_5

- RawMATMAS_E1MARAM-E1CUCFG-E1CUVAL-AUTHOR=8

- RawMATMAS_E1MARAM-E1CUCFG-E1CUVAL-CHARC=CHR_SIZE

- RawMATMAS_E1MARAM-E1CUCFG-E1CUVAL-VALUE=5 TOOLS

- RawMATMAS_E1MARAM-E1MAKTM-MAKTX=Toolkit (configurable) 1

I highlighted the differences in red.

After adding a base product, MATMAS-BASE.xml (https://pastebin.com/tbC2sycy), the system adds the fifth raw item:

I highlighted the differences in red.

After adding a base product, MATMAS-BASE.xml (https://pastebin.com/tbC2sycy), the system adds the fifth raw item:

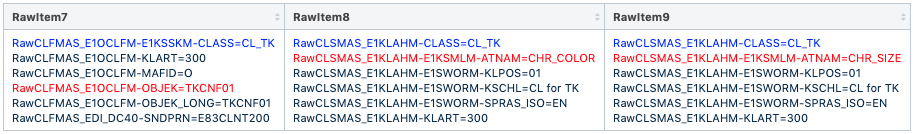

After importing CLSMAS (https://pastebin.com/6VVTFYqh) and CLFMAS (https://pastebin.com/gLdnZGds), our rawitems set was extended with the three new records, one for CLFMAS and two for CLSMAS:

After importing CLSMAS (https://pastebin.com/6VVTFYqh) and CLFMAS (https://pastebin.com/gLdnZGds), our rawitems set was extended with the three new records, one for CLFMAS and two for CLSMAS:

One CLSMAS led to two raw items because there are two segments with the same name.

One CLSMAS led to two raw items because there are two segments with the same name.

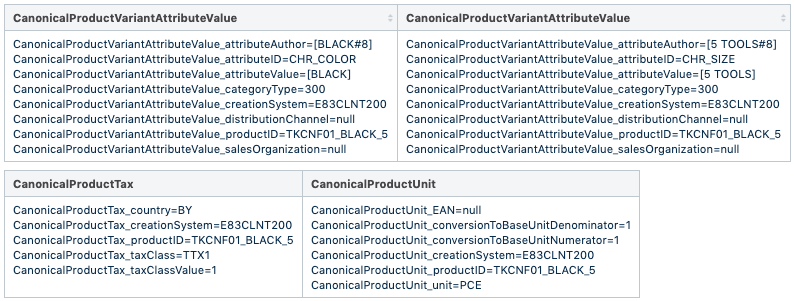

Composition phase

At the composition phase, the elements from raw items are filtered and grouped according to the business logic for the particular transformation flow. The target of grouping mechanisms is to split raw items by groups that will later be handled by composition logic. There are two default grouping handlers, splitting by the canonical item type and a primary key splitting. Primary key is a combination of attributes marked in the configuration as “part of a primary key”. For the example above, the eight raw items of the RawMATMAS, RawCLSMAS, RAWCLFMAS types were converted into five canonical items of the CanonicalProductVariantAttributeValue, CanonicalProductTax, CanonicalProductUnit and CanonicalProduct types:

According to the configuration, CanonicalProductVariantAttributeValue has the following rules:

According to the configuration, CanonicalProductVariantAttributeValue has the following rules:

| Canonical Product Variant Attribute Value attribute | Source type | Source | Example |

| attributeAuthor = | MATMAS | E1MARAM-E1CUCFG-E1CUVAL-VALUE E1MARAM-E1CUCFG-E1CUVAL-AUTHOR | [BLACK#8] |

| attributeValue = | MATMAS | E1MARAM-E1CUCFG-E1CUVAL-VALUE | [BLACK] |

| KEY:categoryType= | MATMAS | E1MARAM-E1CUCFG-E1CUINS-CLASS_TYPE | 300 |

| KEY:attributeId | MATMAS | E1MARAM-E1CUCFG-E1CUVAL-CHARC | CHR_COLOR |

| KEY:creationSystem | MATMAS | EDI_DC40-SNDPRN | E83CLNT200 |

| KEY:productid | MATMAS | E1MARAM-MATNR | TKCNF01_BLACK_5 |

- BaseVariant

- BaseVariantAttributes

- CleanBaseVariantAttributes

- BaseProduct

- ClassificationClass

- ClassAttributeAssignment

- ClassificationAttribute

Publish phase

At this phase, Data Hub sends the target items to the target system (SAP Commerce in our case) via the adapter (SAP Commerce Cloud adapter in our case, it uses Impex and assumes that datahubadapter is installed on the SAP Commerce Cloud side). Let’s have a look at the publishing process for the case when only a MATMAS is loaded. After processing the MATMAS IDoc, we got four raw items and five canonical items (CanonicalProductVariantAttributeValue, CanonicalProductTax, CanonicalProductUnit, CanonicalProduct). The resulting Impex for the “variant MATMAS only” case is#% impex.setLocale( Locale.ENGLISH )

INSERT_UPDATE SAPInboundVariant;;@SAPInboundVariant[translator=de.hybris.platform.sap.sapmodel.inbound.SapClassificationAttributeTranslator];baseProduct(code,catalogVersion(catalog(id),version));catalogVersion(Catalog(id),version)[unique=true];code[unique=true]

;1;<ignore>;TKCNF01:Default:Staged;Default:Staged;TKCNF01_BLACK_5

;4;<ignore>;TKCNF01:Default:Staged;Default:Staged;TKCNF01_BLACK_5

#% impex.setLocale( Locale.ENGLISH )

INSERT_UPDATE ERPVariantProduct;;sapEAN;sapBlockedDate[dateformat='yyyyMMdd'];sapBlocked;variantType(code);sapConfigurable;unit(code);baseProduct(code,catalogVersion(catalog(id),version));catalogVersion(Catalog(id),version)[unique=true];code[unique=true];supercategories(code,catalogVersion(catalog(id),version));sapBaseUnitConversion

;5;;;false;;false;PCE;TKCNF01:Default:Staged;Default:Staged;TKCNF01_BLACK_5;<ignore>;1

INSERT_UPDATE ERPVariantProduct;;unit(code);name[lang=en];baseProduct(code,catalogVersion(catalog(id),version));catalogVersion(Catalog(id),version)[unique=true];code[unique=true]

;5;PCE;Toolkit (configurable) 1;TKCNF01:Default:Staged;Default:Staged;TKCNF01_BLACK_5

After adding a base product, another set of impexes is generated:

INSERT_UPDATE Product;;unit(code);name[lang=en];catalogVersion(Catalog(id),version)[unique=true];code[unique=true]

;504;PCE;Toolkit (configurable);Default:Staged;TKCNF01

INSERT_UPDATE SAPInboundVariant;;@SAPInboundVariant[translator=de.hybris.platform.sap.sapmodel.inbound.SapClassificationAttributeTranslator];baseProduct(code,catalogVersion(catalog(id),version));catalogVersion(Catalog(id),version)[unique=true];code[unique=true]

;4;<ignore>;TKCNF01:Default:Staged;Default:Staged;TKCNF01_BLACK_5

;1;<ignore>;TKCNF01:Default:Staged;Default:Staged;TKCNF01_BLACK_5

Note that here a product-variant link has been created.

After adding the CLSMAS and CLFMAS, the system generates classification catalogs and entries:

INSERT_UPDATE ClassificationClass;;supercategories(code,catalogVersion(catalog(id),version));catalogVersion(Catalog(id),version)[unique=true];code[unique=true]

;1014;<ignore>;ERP_CLASSIFICATION_300:ERP_IMPORT;CL_TK

INSERT_UPDATE ClassificationClass;;name[lang=en];catalogVersion(Catalog(id),version)[unique=true];code[unique=true]

;1014;CL for TK;ERP_CLASSIFICATION_300:ERP_IMPORT;CL_TK

INSERT_UPDATE SAPInboundVariant;;@SAPInboundVariant[translator=de.hybris.platform.sap.sapmodel.inbound.SapClassificationAttributeTranslator];baseProduct(code,catalogVersion(catalog(id),version));catalogVersion(Catalog(id),version)[unique=true];code[unique=true]

;1025;<ignore>;TKCNF01:Default:Staged;Default:Staged;TKCNF01_BLACK_5

;1024;<ignore>;TKCNF01:Default:Staged;Default:Staged;TKCNF01_BLACK_5

#% impex.setLocale( Locale.ENGLISH )

INSERT_UPDATE ClassificationAttribute;;defaultAttributeValues(systemVersion(catalog(id),version),code);systemVersion(catalog(id),version)[unique=true];code[unique=true]

;1005;<ignore>;ERP_CLASSIFICATION_300:ERP_IMPORT;CHR_SIZE

;1004;<ignore>;ERP_CLASSIFICATION_300:ERP_IMPORT;CHR_COLOR

INSERT_UPDATE ClassAttributeAssignment;;range;formatDefinition;unit(code,systemVersion(catalog(id),version),unitType)[unique=true];multiValued;attributeType(code);classificationAttribute(systemVersion(catalog(id),version),code)[unique=true];classificationClass(catalogVersion(catalog(id),version),code)[unique=true]

;1005;false;<ignore>;;true;string;ERP_CLASSIFICATION_300:ERP_IMPORT:CHR_SIZE;ERP_CLASSIFICATION_300:ERP_IMPORT:CL_TK

;1004;false;<ignore>;;true;string;ERP_CLASSIFICATION_300:ERP_IMPORT:CHR_COLOR;ERP_CLASSIFICATION_300:ERP_IMPORT:CL_TK

INSERT_UPDATE ERPVariantProduct;;sapEAN;sapBlockedDate[dateformat='yyyyMMdd'];sapBlocked;variantType(code);sapConfigurable;unit(code);baseProduct(code,catalogVersion(catalog(id),version));catalogVersion(Catalog(id),version)[unique=true];code[unique=true];supercategories(code,catalogVersion(catalog(id),version));sapBaseUnitConversion

;1021;;;false;;false;PCE;TKCNF01:Default:Staged;Default:Staged;TKCNF01_BLACK_5;<ignore>;1

INSERT_UPDATE ERPVariantProduct;;unit(code);name[lang=en];baseProduct(code,catalogVersion(catalog(id),version));catalogVersion(Catalog(id),version)[unique=true];code[unique=true]

;1021;PCE;Toolkit (configurable);TKCNF01:Default:Staged;Default:Staged;TKCNF01_BLACK_5

INSERT_UPDATE Product;;sapConfigurable;unit(code);catalogVersion(Catalog(id),version)[unique=true];code[unique=true];supercategories(code,catalogVersion(catalog(id),version));sapBaseUnitConversion;sapEAN;sapBlockedDate[dateformat='yyyyMMdd'];sapBlocked;variantType(code)

;1022;true;PCE;Default:Staged;TKCNF01;CL_TK:ERP_CLASSIFICATION_300:ERP_IMPORT;1;;;false;ERPVariantProduct

INSERT_UPDATE Product;;unit(code);name[lang=en];catalogVersion(Catalog(id),version)[unique=true];code[unique=true]

;1022;PCE;Toolkit (configurable);Default:Staged;TKCNF01

INSERT_UPDATE ERPVariantProduct;;catalogVersion(Catalog(id),version)[unique=true];code[unique=true];baseProduct(code,catalogVersion(catalog(id),version));@CHR_SIZE[system=ERP_CLASSIFICATION_300,version=ERP_IMPORT,translator=de.hybris.platform.sap.sapmodel.authors.SapClassificationAttributeAuthorTranslator]

;1024;Default:Staged;TKCNF01_BLACK_5;TKCNF01:Default:Staged;5 TOOLS#8

INSERT_UPDATE ERPVariantProduct;;catalogVersion(Catalog(id),version)[unique=true];code[unique=true];baseProduct(code,catalogVersion(catalog(id),version));@CHR_COLOR[system=ERP_CLASSIFICATION_300,version=ERP_IMPORT,translator=de.hybris.platform.sap.sapmodel.authors.SapClassificationAttributeAuthorTranslator]

;1025;Default:Staged;TKCNF01_BLACK_5;TKCNF01:Default:Staged;BLACK#8

INSERT_UPDATE SAPInboundVariant;;@SAPInboundVariant[translator=de.hybris.platform.sap.sapmodel.inbound.SapClassificationAttributeTranslator];baseProduct(code,catalogVersion(catalog(id),version));catalogVersion(Catalog(id),version)[unique=true];code[unique=true]

;1;<ignore>;TKCNF01:Default:Staged;Default:Staged;TKCNF01_BLACK_5

;4;<ignore>;TKCNF01:Default:Staged;Default:Staged;TKCNF01_BLACK_5

#% impex.setLocale( Locale.ENGLISH )

INSERT_UPDATE ERPVariantProduct;;sapEAN;sapBlockedDate[dateformat='yyyyMMdd'];sapBlocked;variantType(code);sapConfigurable;unit(code);baseProduct(code,catalogVersion(catalog(id),version));catalogVersion(Catalog(id),version)[unique=true];code[unique=true];supercategories(code,catalogVersion(catalog(id),version));sapBaseUnitConversion

;5;;;false;;false;PCE;TKCNF01:Default:Staged;Default:Staged;TKCNF01_BLACK_5;<ignore>;1

INSERT_UPDATE ERPVariantProduct;;unit(code);name[lang=en];baseProduct(code,catalogVersion(catalog(id),version));catalogVersion(Catalog(id),version)[unique=true];code[unique=true]

;5;PCE;Toolkit (configurable) 1;TKCNF01:Default:Staged;Default:Staged;TKCNF01_BLACK_5

After adding a base product, another set of impexes is generated:

INSERT_UPDATE Product;;unit(code);name[lang=en];catalogVersion(Catalog(id),version)[unique=true];code[unique=true]

;504;PCE;Toolkit (configurable);Default:Staged;TKCNF01

INSERT_UPDATE SAPInboundVariant;;@SAPInboundVariant[translator=de.hybris.platform.sap.sapmodel.inbound.SapClassificationAttributeTranslator];baseProduct(code,catalogVersion(catalog(id),version));catalogVersion(Catalog(id),version)[unique=true];code[unique=true]

;4;<ignore>;TKCNF01:Default:Staged;Default:Staged;TKCNF01_BLACK_5

;1;<ignore>;TKCNF01:Default:Staged;Default:Staged;TKCNF01_BLACK_5

Note that here a product-variant link has been created.

After adding the CLSMAS and CLFMAS, the system generates classification catalogs and entries:

INSERT_UPDATE ClassificationClass;;supercategories(code,catalogVersion(catalog(id),version));catalogVersion(Catalog(id),version)[unique=true];code[unique=true]

;1014;<ignore>;ERP_CLASSIFICATION_300:ERP_IMPORT;CL_TK

INSERT_UPDATE ClassificationClass;;name[lang=en];catalogVersion(Catalog(id),version)[unique=true];code[unique=true]

;1014;CL for TK;ERP_CLASSIFICATION_300:ERP_IMPORT;CL_TK

INSERT_UPDATE SAPInboundVariant;;@SAPInboundVariant[translator=de.hybris.platform.sap.sapmodel.inbound.SapClassificationAttributeTranslator];baseProduct(code,catalogVersion(catalog(id),version));catalogVersion(Catalog(id),version)[unique=true];code[unique=true]

;1025;<ignore>;TKCNF01:Default:Staged;Default:Staged;TKCNF01_BLACK_5

;1024;<ignore>;TKCNF01:Default:Staged;Default:Staged;TKCNF01_BLACK_5

#% impex.setLocale( Locale.ENGLISH )

INSERT_UPDATE ClassificationAttribute;;defaultAttributeValues(systemVersion(catalog(id),version),code);systemVersion(catalog(id),version)[unique=true];code[unique=true]

;1005;<ignore>;ERP_CLASSIFICATION_300:ERP_IMPORT;CHR_SIZE

;1004;<ignore>;ERP_CLASSIFICATION_300:ERP_IMPORT;CHR_COLOR

INSERT_UPDATE ClassAttributeAssignment;;range;formatDefinition;unit(code,systemVersion(catalog(id),version),unitType)[unique=true];multiValued;attributeType(code);classificationAttribute(systemVersion(catalog(id),version),code)[unique=true];classificationClass(catalogVersion(catalog(id),version),code)[unique=true]

;1005;false;<ignore>;;true;string;ERP_CLASSIFICATION_300:ERP_IMPORT:CHR_SIZE;ERP_CLASSIFICATION_300:ERP_IMPORT:CL_TK

;1004;false;<ignore>;;true;string;ERP_CLASSIFICATION_300:ERP_IMPORT:CHR_COLOR;ERP_CLASSIFICATION_300:ERP_IMPORT:CL_TK

INSERT_UPDATE ERPVariantProduct;;sapEAN;sapBlockedDate[dateformat='yyyyMMdd'];sapBlocked;variantType(code);sapConfigurable;unit(code);baseProduct(code,catalogVersion(catalog(id),version));catalogVersion(Catalog(id),version)[unique=true];code[unique=true];supercategories(code,catalogVersion(catalog(id),version));sapBaseUnitConversion

;1021;;;false;;false;PCE;TKCNF01:Default:Staged;Default:Staged;TKCNF01_BLACK_5;<ignore>;1

INSERT_UPDATE ERPVariantProduct;;unit(code);name[lang=en];baseProduct(code,catalogVersion(catalog(id),version));catalogVersion(Catalog(id),version)[unique=true];code[unique=true]

;1021;PCE;Toolkit (configurable);TKCNF01:Default:Staged;Default:Staged;TKCNF01_BLACK_5

INSERT_UPDATE Product;;sapConfigurable;unit(code);catalogVersion(Catalog(id),version)[unique=true];code[unique=true];supercategories(code,catalogVersion(catalog(id),version));sapBaseUnitConversion;sapEAN;sapBlockedDate[dateformat='yyyyMMdd'];sapBlocked;variantType(code)

;1022;true;PCE;Default:Staged;TKCNF01;CL_TK:ERP_CLASSIFICATION_300:ERP_IMPORT;1;;;false;ERPVariantProduct

INSERT_UPDATE Product;;unit(code);name[lang=en];catalogVersion(Catalog(id),version)[unique=true];code[unique=true]

;1022;PCE;Toolkit (configurable);Default:Staged;TKCNF01

INSERT_UPDATE ERPVariantProduct;;catalogVersion(Catalog(id),version)[unique=true];code[unique=true];baseProduct(code,catalogVersion(catalog(id),version));@CHR_SIZE[system=ERP_CLASSIFICATION_300,version=ERP_IMPORT,translator=de.hybris.platform.sap.sapmodel.authors.SapClassificationAttributeAuthorTranslator]

;1024;Default:Staged;TKCNF01_BLACK_5;TKCNF01:Default:Staged;5 TOOLS#8

INSERT_UPDATE ERPVariantProduct;;catalogVersion(Catalog(id),version)[unique=true];code[unique=true];baseProduct(code,catalogVersion(catalog(id),version));@CHR_COLOR[system=ERP_CLASSIFICATION_300,version=ERP_IMPORT,translator=de.hybris.platform.sap.sapmodel.authors.SapClassificationAttributeAuthorTranslator]

;1025;Default:Staged;TKCNF01_BLACK_5;TKCNF01:Default:Staged;BLACK#8

- Languages

- Currency

- Units

- Classification System

- ClassificationSystemVersion

- ClassificationAttributeUnit

- Countries

- Regions

- ProductTaxGroup

- Vendor

- Warehouse

- Discount

- SAPProductIDDataConversion

- ProductPriceGroup

- ProductDiscountGroup

- UserPriceGroup

- DiscountUserGroup

- Title

- ReferenceDistributionChannelMapping

- ReferenceDivisionMapping