Product Image Visual Search in SAP Commerce Cloud / Hybris Commerce

Visual search is one of the latest breakthroughs. It is truly making a mark, especially in the eCommerce arena. In 2017, Pinterest launched a visual discovery tool. Ebay launched image search capabilities for mobile devices. These systems use AI and machine learning, that makes this approach too complex for the simple task of grouping products by color.

When you search for a particular product, words are often not enough to find exactly what you need. Sometimes it is easier to deal with the products similar to the one you like. It is especially so for the fashion e-shops. Wouldn’t it be amazing if you could just show your computer a picture or click on the current product picture and say, “Find all products like this”.

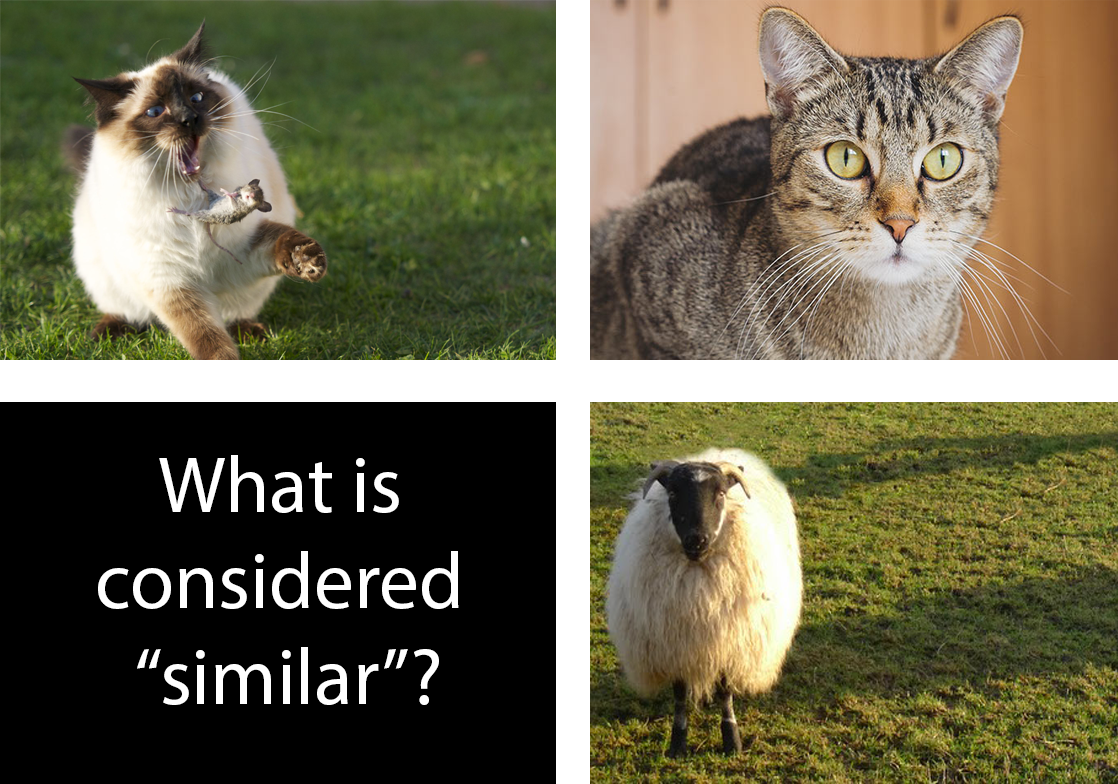

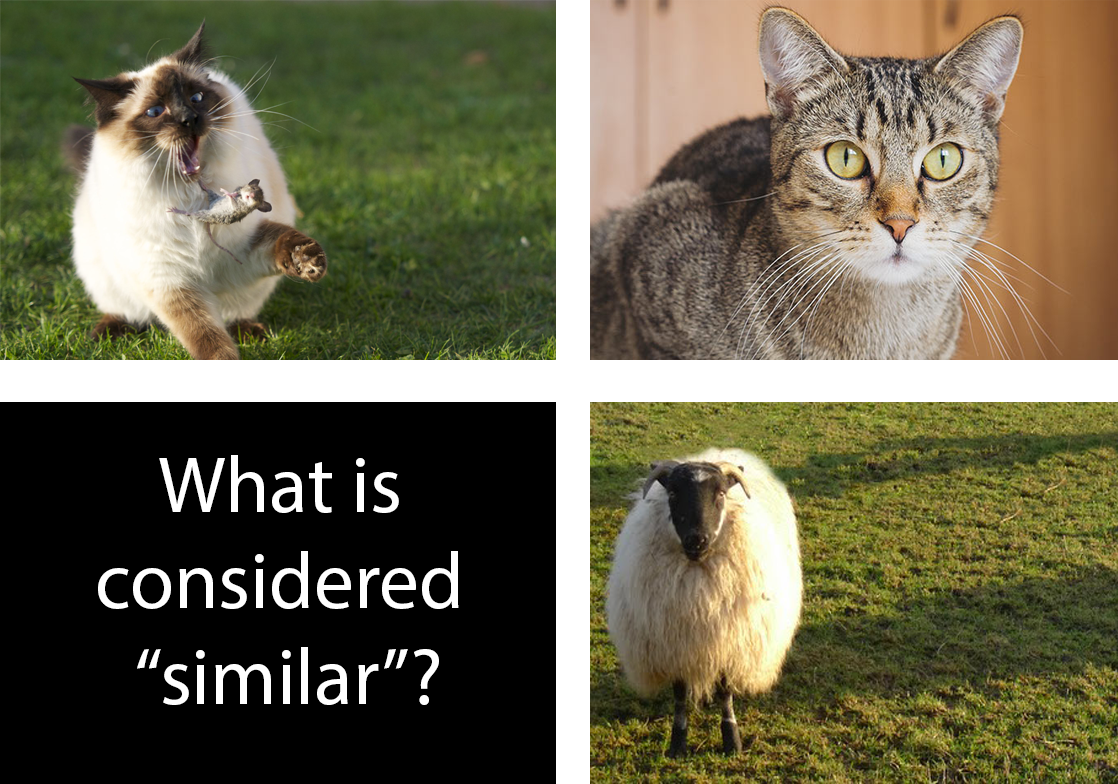

“Like this”? Similar products concept is a complex thing. The images may have the same objects but with the different backgrounds or the same background but with the same objects. For e-commerce, the images are normally cleaned from the non-relevant noise, but even with that, there are complexities too. Products can be similar because they are in the same category or they have similar characteristics or your customers buy them with the same set of accessories. So, in the example above, the customer asks the system to find the products look like this. However, even with this clarification, a new question comes to the front:

What is “similar-looking products”? It is a tough question as well. The common technique is creating a fingerprint for the images and compare these fingerprints. Similar images have similar fingerprints. It reduces the complexity and makes the system able to work with thousands or millions of images. Today we will talk about products similar because their pictures are similar. Namely, they have visual similarities, e.g. the predominance of some colors and almost identical color layouts.

There are a lot of algorithms for calculating image fingerprints, and a lot of implementations of these algorithms. Basically, both the simplest systems and complex AI and machine learning software use the concept of image fingerprints, creating a set of numbers representing an image.

There are many image fingerprint systems available on the market, such as pHash, imgSeek, WindSurf, libpuzzle, ImageTerrier or LIRE. The great list of such tools is on Wikipedia here. There are different algorithms and implementations. I experimented with the last library from this list, LIRE. Eventually, I needed to integrate it with hybris Search, so it was a big advantage that Lire has a module for Solr. It greatly helped me to create a proof of concept in a short time.

LIRE had released in 2006 under GPL. The name LIRE stands for Lucene Image REtrieval, so it had a good foundation from the very beginning for integrating with Solr, which is based on Lucene. Now LIRE is a stable project with a strong community of contributors. The important feature of LIRE for me that it is written entirely in Java and can be integrated with SAP Hybris.

However, LIRE has some drawbacks too. It does very rough similarity because it is mostly based upon color usage. It re-ranks the search results retrieved in full (up to 10000 which is configurable) even if you request only first ten items, that means that it may work much slower on large datasets than you may expect.

Another product from the list, imgSeek, open source and free, is a good alternative, to some degree. It analyzes the images, stores them in its own index, and provides you with a query interface. This will not be integrated with your SOLR instance and you’ll have to do two separate queries. imgSeek uses QT and ImageMagick, implemented in Python 2.4, so it cannot be well integrated into the Hybris search subsystem as I wanted.

There are external commercial services, such as Match Engine from TinEye, for example.

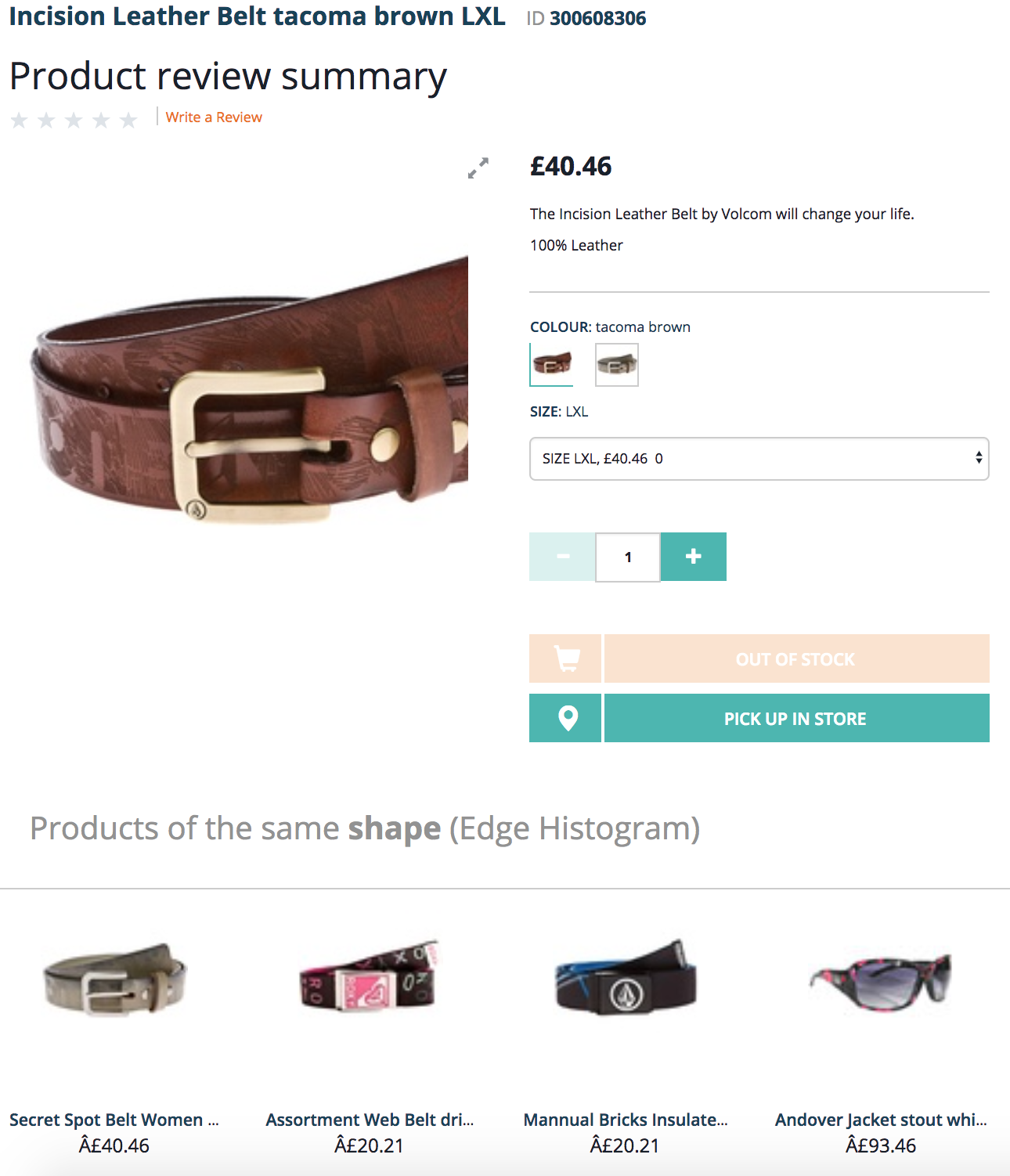

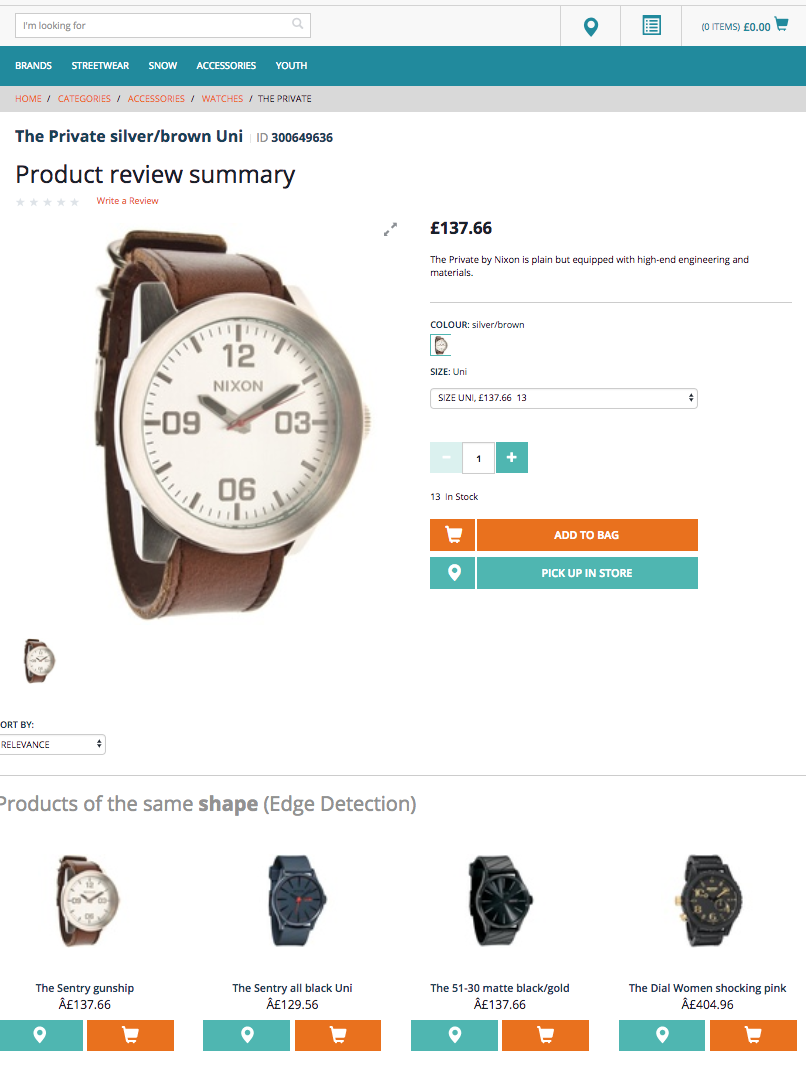

Going forward, I demonstrate the results first:

“Like this”? Similar products concept is a complex thing. The images may have the same objects but with the different backgrounds or the same background but with the same objects. For e-commerce, the images are normally cleaned from the non-relevant noise, but even with that, there are complexities too. Products can be similar because they are in the same category or they have similar characteristics or your customers buy them with the same set of accessories. So, in the example above, the customer asks the system to find the products look like this. However, even with this clarification, a new question comes to the front:

What is “similar-looking products”? It is a tough question as well. The common technique is creating a fingerprint for the images and compare these fingerprints. Similar images have similar fingerprints. It reduces the complexity and makes the system able to work with thousands or millions of images. Today we will talk about products similar because their pictures are similar. Namely, they have visual similarities, e.g. the predominance of some colors and almost identical color layouts.

There are a lot of algorithms for calculating image fingerprints, and a lot of implementations of these algorithms. Basically, both the simplest systems and complex AI and machine learning software use the concept of image fingerprints, creating a set of numbers representing an image.

There are many image fingerprint systems available on the market, such as pHash, imgSeek, WindSurf, libpuzzle, ImageTerrier or LIRE. The great list of such tools is on Wikipedia here. There are different algorithms and implementations. I experimented with the last library from this list, LIRE. Eventually, I needed to integrate it with hybris Search, so it was a big advantage that Lire has a module for Solr. It greatly helped me to create a proof of concept in a short time.

LIRE had released in 2006 under GPL. The name LIRE stands for Lucene Image REtrieval, so it had a good foundation from the very beginning for integrating with Solr, which is based on Lucene. Now LIRE is a stable project with a strong community of contributors. The important feature of LIRE for me that it is written entirely in Java and can be integrated with SAP Hybris.

However, LIRE has some drawbacks too. It does very rough similarity because it is mostly based upon color usage. It re-ranks the search results retrieved in full (up to 10000 which is configurable) even if you request only first ten items, that means that it may work much slower on large datasets than you may expect.

Another product from the list, imgSeek, open source and free, is a good alternative, to some degree. It analyzes the images, stores them in its own index, and provides you with a query interface. This will not be integrated with your SOLR instance and you’ll have to do two separate queries. imgSeek uses QT and ImageMagick, implemented in Python 2.4, so it cannot be well integrated into the Hybris search subsystem as I wanted.

There are external commercial services, such as Match Engine from TinEye, for example.

Going forward, I demonstrate the results first:

So, let’s come back to LIRE-Hybris integration. First, let me give you a bit of theory on how similarity and image fingerprints work. It is necessary to understand the details of the integration.

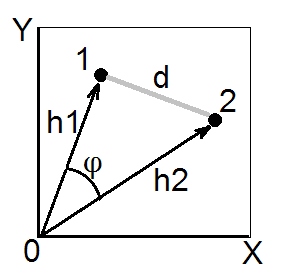

However, the image cannot be represented efficiently by two dimensions. If we add a third dimension, the distance will be calculated in the same way:

However, the image cannot be represented efficiently by two dimensions. If we add a third dimension, the distance will be calculated in the same way:

Vectors that represent images are called feature vectors. Feature vectors have much more dimensions. For example, “Color and Edge Directivity” (CED) is 54 bytes long, so it defines a vector in 54-dimension space, and this vector represents an image. Another popular method is MPEG7 standard image descriptors and FCTH (Fuzzy Color and Texture histogram). These descriptors have the desired properties (visually similar images are mapped to close points in an n-dimensional vector space).

The optically similar images get assigned a similar feature vector, and the optically dissimilar images get assigned a very different feature vector such that the Euclidean distances between the vectors assigned to the first two images are smaller than the Euclidean distances between the vectors assigned to the first two and the third image. Different algorithms create the vectors differently.

Lire supports the following feature vector algorithms:

Vectors that represent images are called feature vectors. Feature vectors have much more dimensions. For example, “Color and Edge Directivity” (CED) is 54 bytes long, so it defines a vector in 54-dimension space, and this vector represents an image. Another popular method is MPEG7 standard image descriptors and FCTH (Fuzzy Color and Texture histogram). These descriptors have the desired properties (visually similar images are mapped to close points in an n-dimensional vector space).

The optically similar images get assigned a similar feature vector, and the optically dissimilar images get assigned a very different feature vector such that the Euclidean distances between the vectors assigned to the first two images are smaller than the Euclidean distances between the vectors assigned to the first two and the third image. Different algorithms create the vectors differently.

Lire supports the following feature vector algorithms:

You can parse it and show with the custom recommended products component.

The request above is not standard, it uses a separate request handler (/lireq).

You can parse it and show with the custom recommended products component.

The request above is not standard, it uses a separate request handler (/lireq).

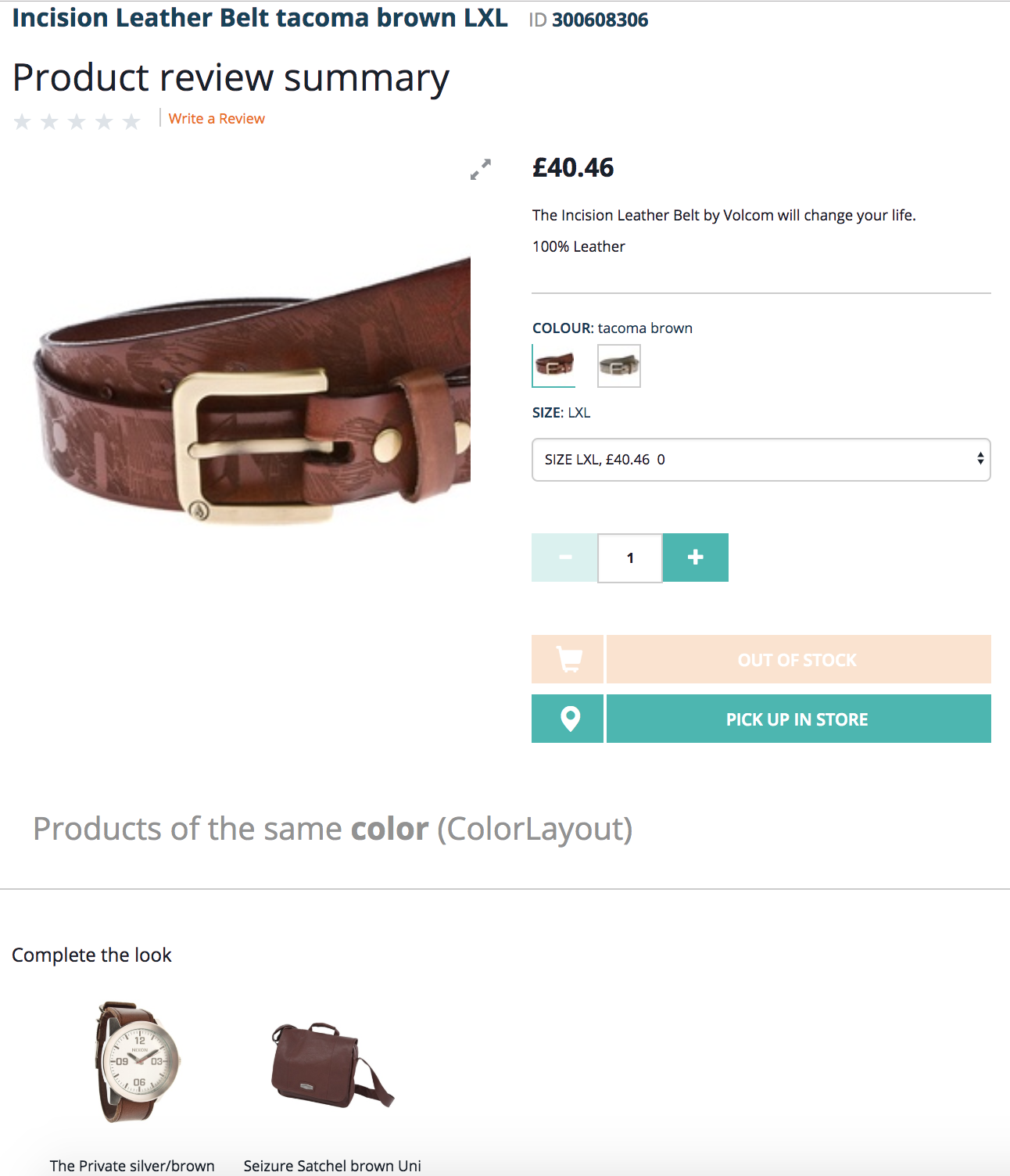

When the product catalog is small or the color cluster is small, the system shows the best possible match (fourth product is not a belt)

When the product catalog is small or the color cluster is small, the system shows the best possible match (fourth product is not a belt)

“Like this”? Similar products concept is a complex thing. The images may have the same objects but with the different backgrounds or the same background but with the same objects. For e-commerce, the images are normally cleaned from the non-relevant noise, but even with that, there are complexities too. Products can be similar because they are in the same category or they have similar characteristics or your customers buy them with the same set of accessories. So, in the example above, the customer asks the system to find the products look like this. However, even with this clarification, a new question comes to the front:

What is “similar-looking products”? It is a tough question as well. The common technique is creating a fingerprint for the images and compare these fingerprints. Similar images have similar fingerprints. It reduces the complexity and makes the system able to work with thousands or millions of images. Today we will talk about products similar because their pictures are similar. Namely, they have visual similarities, e.g. the predominance of some colors and almost identical color layouts.

There are a lot of algorithms for calculating image fingerprints, and a lot of implementations of these algorithms. Basically, both the simplest systems and complex AI and machine learning software use the concept of image fingerprints, creating a set of numbers representing an image.

There are many image fingerprint systems available on the market, such as pHash, imgSeek, WindSurf, libpuzzle, ImageTerrier or LIRE. The great list of such tools is on Wikipedia here. There are different algorithms and implementations. I experimented with the last library from this list, LIRE. Eventually, I needed to integrate it with hybris Search, so it was a big advantage that Lire has a module for Solr. It greatly helped me to create a proof of concept in a short time.

LIRE had released in 2006 under GPL. The name LIRE stands for Lucene Image REtrieval, so it had a good foundation from the very beginning for integrating with Solr, which is based on Lucene. Now LIRE is a stable project with a strong community of contributors. The important feature of LIRE for me that it is written entirely in Java and can be integrated with SAP Hybris.

However, LIRE has some drawbacks too. It does very rough similarity because it is mostly based upon color usage. It re-ranks the search results retrieved in full (up to 10000 which is configurable) even if you request only first ten items, that means that it may work much slower on large datasets than you may expect.

Another product from the list, imgSeek, open source and free, is a good alternative, to some degree. It analyzes the images, stores them in its own index, and provides you with a query interface. This will not be integrated with your SOLR instance and you’ll have to do two separate queries. imgSeek uses QT and ImageMagick, implemented in Python 2.4, so it cannot be well integrated into the Hybris search subsystem as I wanted.

There are external commercial services, such as Match Engine from TinEye, for example.

Going forward, I demonstrate the results first:

“Like this”? Similar products concept is a complex thing. The images may have the same objects but with the different backgrounds or the same background but with the same objects. For e-commerce, the images are normally cleaned from the non-relevant noise, but even with that, there are complexities too. Products can be similar because they are in the same category or they have similar characteristics or your customers buy them with the same set of accessories. So, in the example above, the customer asks the system to find the products look like this. However, even with this clarification, a new question comes to the front:

What is “similar-looking products”? It is a tough question as well. The common technique is creating a fingerprint for the images and compare these fingerprints. Similar images have similar fingerprints. It reduces the complexity and makes the system able to work with thousands or millions of images. Today we will talk about products similar because their pictures are similar. Namely, they have visual similarities, e.g. the predominance of some colors and almost identical color layouts.

There are a lot of algorithms for calculating image fingerprints, and a lot of implementations of these algorithms. Basically, both the simplest systems and complex AI and machine learning software use the concept of image fingerprints, creating a set of numbers representing an image.

There are many image fingerprint systems available on the market, such as pHash, imgSeek, WindSurf, libpuzzle, ImageTerrier or LIRE. The great list of such tools is on Wikipedia here. There are different algorithms and implementations. I experimented with the last library from this list, LIRE. Eventually, I needed to integrate it with hybris Search, so it was a big advantage that Lire has a module for Solr. It greatly helped me to create a proof of concept in a short time.

LIRE had released in 2006 under GPL. The name LIRE stands for Lucene Image REtrieval, so it had a good foundation from the very beginning for integrating with Solr, which is based on Lucene. Now LIRE is a stable project with a strong community of contributors. The important feature of LIRE for me that it is written entirely in Java and can be integrated with SAP Hybris.

However, LIRE has some drawbacks too. It does very rough similarity because it is mostly based upon color usage. It re-ranks the search results retrieved in full (up to 10000 which is configurable) even if you request only first ten items, that means that it may work much slower on large datasets than you may expect.

Another product from the list, imgSeek, open source and free, is a good alternative, to some degree. It analyzes the images, stores them in its own index, and provides you with a query interface. This will not be integrated with your SOLR instance and you’ll have to do two separate queries. imgSeek uses QT and ImageMagick, implemented in Python 2.4, so it cannot be well integrated into the Hybris search subsystem as I wanted.

There are external commercial services, such as Match Engine from TinEye, for example.

Going forward, I demonstrate the results first:

| Color similarity |  (larger)

(larger) |

(larger)

(larger) |

(larger)

(larger) |

| Shape similarity |  (larger)

(larger) |

(larger)

(larger) |

(larger)

(larger) |

How Image Similarity Works

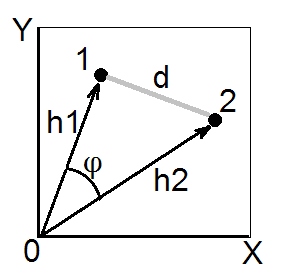

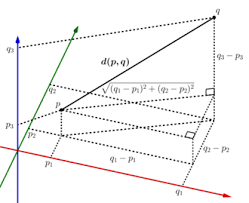

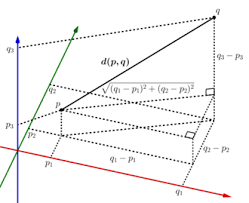

The common approach of image fingerprinting is representing the image using the minimal amount of information needed to encode its essential properties. This minimal information is called image descriptor, and the process is known as feature extraction. The key idea of the approach is to use a function that maps images into an n-dimensional Euclidean vector space (R^n) such that similar images are mapped to points close to each other and dissimilar images are mapped to points far away from each other. A vector distance such as the simple Euclidian vector distance then provides a similarity metric that can be used to determine which images are more similar to each other. The smaller the distance value, the more similar the images are. To build the result list for our sample application, we just need to sort the array containing all the images in a directory by their distance to the specified search image. For two dimensions, the distance will look like that: However, the image cannot be represented efficiently by two dimensions. If we add a third dimension, the distance will be calculated in the same way:

However, the image cannot be represented efficiently by two dimensions. If we add a third dimension, the distance will be calculated in the same way:

Vectors that represent images are called feature vectors. Feature vectors have much more dimensions. For example, “Color and Edge Directivity” (CED) is 54 bytes long, so it defines a vector in 54-dimension space, and this vector represents an image. Another popular method is MPEG7 standard image descriptors and FCTH (Fuzzy Color and Texture histogram). These descriptors have the desired properties (visually similar images are mapped to close points in an n-dimensional vector space).

The optically similar images get assigned a similar feature vector, and the optically dissimilar images get assigned a very different feature vector such that the Euclidean distances between the vectors assigned to the first two images are smaller than the Euclidean distances between the vectors assigned to the first two and the third image. Different algorithms create the vectors differently.

Lire supports the following feature vector algorithms:

Vectors that represent images are called feature vectors. Feature vectors have much more dimensions. For example, “Color and Edge Directivity” (CED) is 54 bytes long, so it defines a vector in 54-dimension space, and this vector represents an image. Another popular method is MPEG7 standard image descriptors and FCTH (Fuzzy Color and Texture histogram). These descriptors have the desired properties (visually similar images are mapped to close points in an n-dimensional vector space).

The optically similar images get assigned a similar feature vector, and the optically dissimilar images get assigned a very different feature vector such that the Euclidean distances between the vectors assigned to the first two images are smaller than the Euclidean distances between the vectors assigned to the first two and the third image. Different algorithms create the vectors differently.

Lire supports the following feature vector algorithms:

- MPEG-7 Color Layout (cl).

- Pyramid histogram of oriented gradients, PHOG (ph)

- MPEG-7 Edge Histogram (eh)

- AutoColorCorrelogram (ac)

- Color and Edge Directivity Descriptor, CEDD (ce)

- Fuzzy Color and Texture histogram, FCTH (fc)

- Joint Composite Descriptor, JCD (jc)

- Opponent Histogram (oh)

- ACCID (ad)

- Fuzzy Opponent Histogram (fo)

- Joint Histogram (jh)

- MPEG-7 Scalable Color (sc)

- SPCEDD (pc)

MPEG-7 Color Layout

Simply put, it represents the spatial distribution of the color in images in a compact form. The image is divided into 8×8 dicrete blocks and their representative average colors in the YCbCr color space are extracted. The descriptor is obtained by applying the discrete cosine transformation (DCT) on every block and using its coefficients. The produced descriptor (=fingerprint) is a 3×64 representation of the image. By the way, image partitioning into blocks, the DCT transformation and zigzag scanning are used in JPG format.

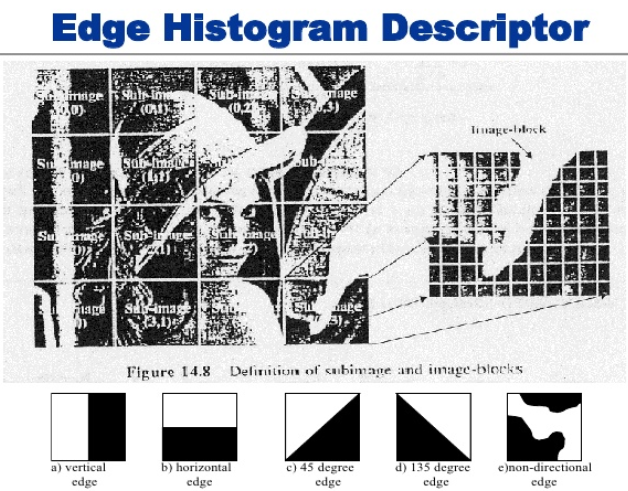

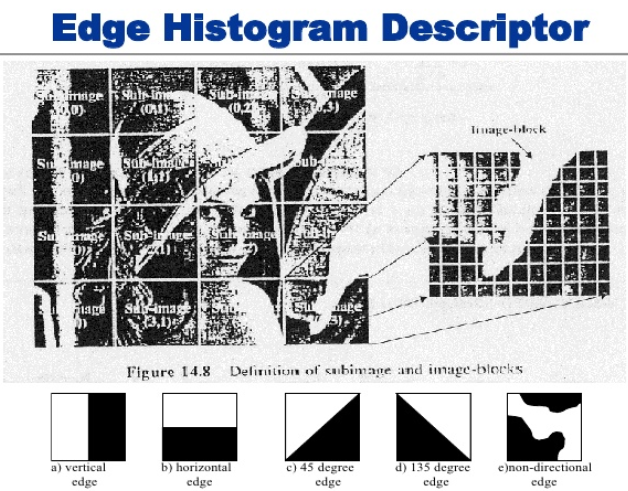

Edge Histogram Descriptor

It represents the spatial distribution of five types of edges in the image. An image is subdivided into 4×4 subimages and the local edge histogram of five broadly grouped edge types (vertical, horizontal, 45 diagonal, 135 diagonal and non-directional) is computed. As a result, the descriptor is 4x4x8. So this method is used mainly for describing the shape features. For each type of edges there are corresponding filters.

Pyramid Histogram Of Oriented Gradients (PHOG)

The technique counts occurrences of gradient orientation in localized portions of an image. This method is similar to that of edge orientation histograms, but differs in that it is computed on a dense grid of uniformly spaced cells and uses overlapping local contrast normalization for improved accuracy. The essential thought behind the histogram of oriented gradients descriptor is that local object appearance and shape within an image can be described by the distribution of intensity gradients or edge directions. The image is divided into small connected regions called cells, and for the pixels within each cell, a histogram of gradient directions is compiled. The descriptor is the concatenation of these histograms.

Other algorithms

Color Correlogram Algorithm expresses how the spatial correlation of pairs of colors changes with distance. Informally, a correlogram for an image is a table indexed by color pairs, where the d-th entry for row (i,j) specifies the probability of finding a pixel of color j at a distance d from a pixel of color i in this image. An autocorrelogram captures spatial correlation between identical colors only. Color and Edge Directivity Descriptor Algorithm (CEDD) combines a 24-bin fuzzy color histogram with 6 types of edges (see edge histogram descriptor above). After extraction, the set is normalized and quantitized. The results in an overal descriptor length of 54 bytes, so it is compact. Fuzzy Color and Texture Histogram (FCTH) uses the same fuzzy color scheme as CEDD, but it has a more extensive edge description method. It is also more accurate, but less compact than CEDD. A combination of CEDD and FCTH is the Joint Composite Descriptor (JCD). All three are robust to scaling and rotation. Color layout and edge histogram are robust only to scaling. OpponentHistogram is a simple color histogram in the opponent color space (luminance / red-green / blue-yellow).Integrating Lire with Hybris

There are two processes needed to get implemented:- indexing the images

- creating the image descriptors and add them to Solr Index along with the products these images belong to

- getting the products having images similar to X

- configuring Solr to use the descriptors as a search criteria

Configuring Solr

-

- Add a request handler to config/solr/instances/default/configsets/default/conf/solrconfig.xml, next to the existing requestHandler name=”/select”:

<requestHandler name="/lireq" class="net.semanticmetadata.lire.solr.LireRequestHandler">

<lst name="defaults">

<str name="echoParams">explicit

<str name="wt">json

<str name="indent">true

</lst>

</requestHandler>

<lst name="defaults">

<str name="echoParams">explicit

<str name="wt">json

<str name="indent">true

</lst>

</requestHandler>

- Add a value source function. Allows for ranking based on the distance function. Added to text based search queries. For example, it can be used for sorting with the normal select query solr request.

<valueSourceParser name="lirefunc"

class="net.semanticmetadata.lire.solr.LireValueSourceParser" /> - Add the dynamic field definitions and DocValue based binary field:

<dynamicField name="*_ha" type="text_ws" indexed="true" stored="false"/> <!-- if you are using BitSampling -->

<dynamicField name="*_ms" type="text_ws" indexed="true" stored="false"/> <!-- if you are using Metric Spaces Indexing -->

<dynamicField name="*_hi" type="binaryDV" indexed="false" stored="true"/> - Compile lire library (gradle distForSolr) and copy dist/*.jar to hybris/bin/ext-commerce/solrserver/resources/solr/7.4/server/server/solr-webapp/webapp/WEB-INF/lib.

Indexing the images

The goal of this process is to enrich the products in SOLR with the information about the product picture, namely, with the image vector. In hybris, we need to extend the indexing subsystem to add extra fields to the indexing request. For the PoC, I extended DefaultIndexer. Add the LIRE library (jar) to the project (/lib) of your extension. For my extension, I took the code from ParallelSolrIndexer.java (start reading the code from the method “run()”). The code opens the media file for the product, trims the white space around the product image (ImageUtils.trimWhiteSpace) and downscales the image to 512 pixels as max side length (ImageUtils.scaleImage). For each of registered global feature algorithms, it adds the dynamic field values to the indexing request:featureAlgorithmClass.extract(img); featureAlg = getCodeForClass(featureAlgorithmClass); //cl, eh, jc and ph

document.addField(featureAlg+"_hi", Base64.getEncoder().encodeToString(feature.getByteArrayRepresentation()));

document.addField(featureAlg+"_ha", BitSampling.generateHashes(feature.getFeatureVector()));

document.addField(featureAlg+"_ms", MetricSpaces.generateHashString(feature));the list of featureAlg is configurable. I used ColorLayout.class, EdgeHistogram.class, JCD.class and PHOG.class with the featureAlg codes cl, eh, jc and ph respectively. The listed classes are part of LIRE library. So, for 4 algorythms you will have 12 additional fields in the request.

Search

There are two options:- Using a designated RequestHandler (/lireq)

- Using a function (lirefunc)

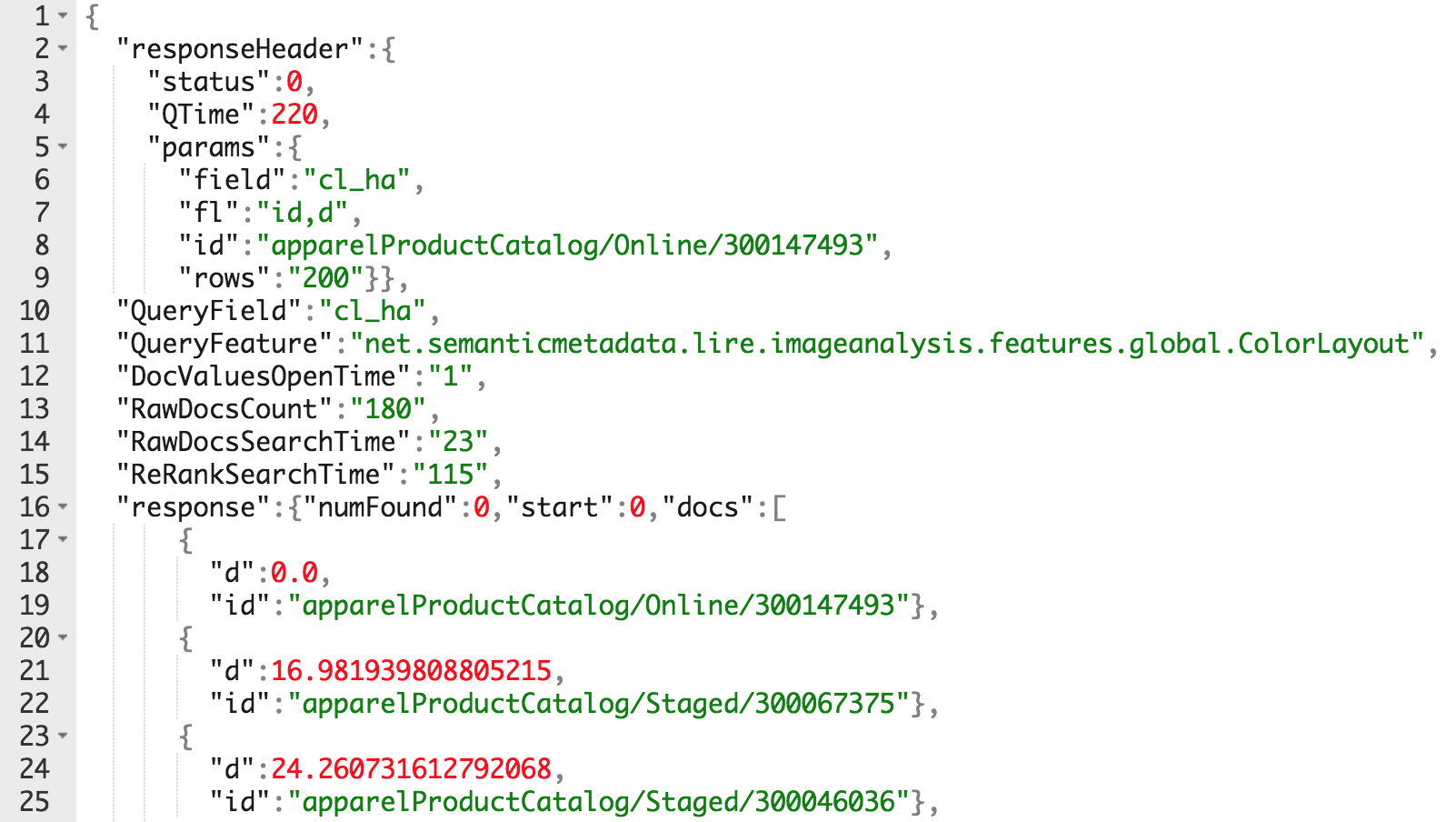

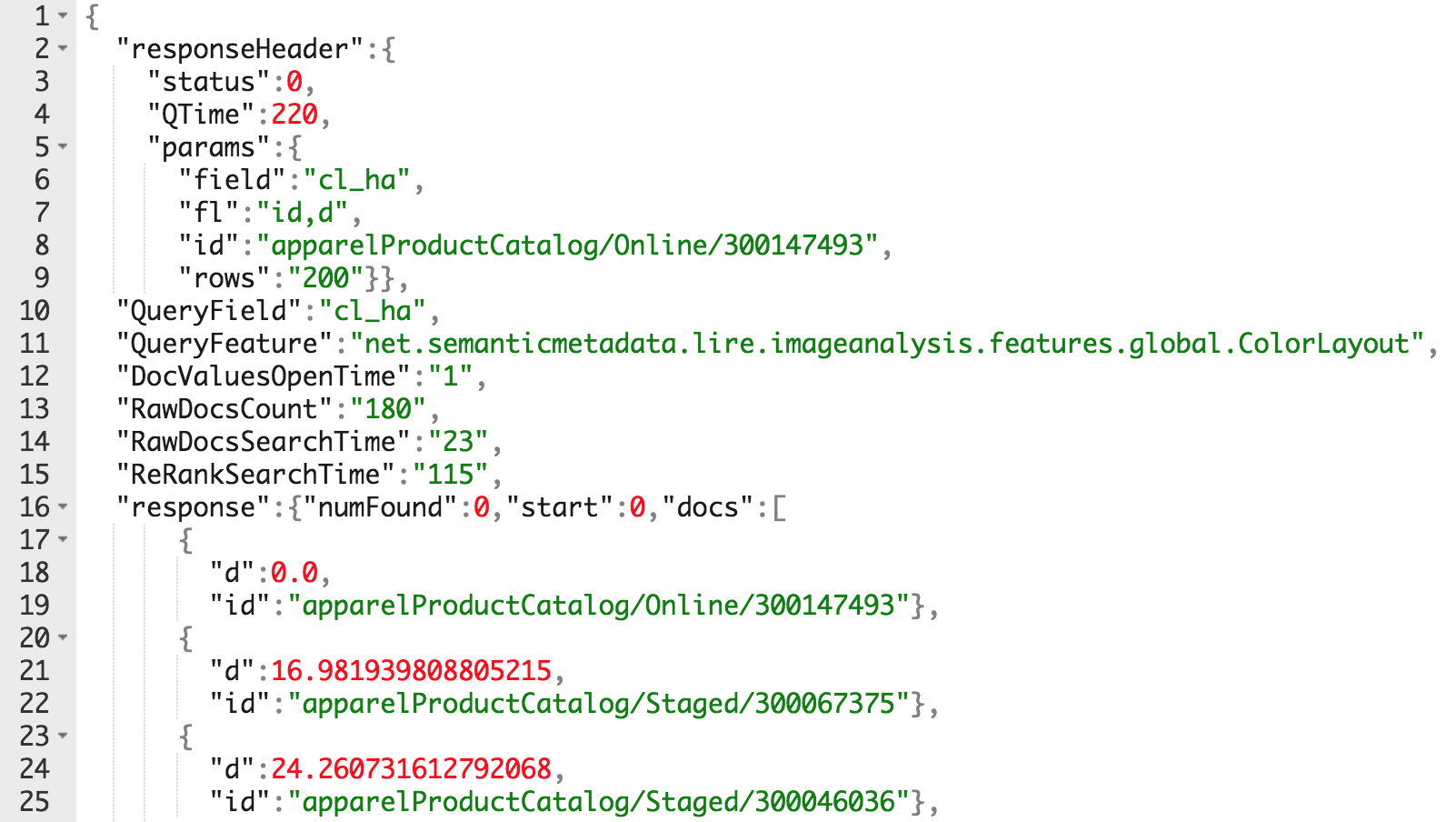

https://localhost:8983/solr/master_apparel-uk_Product_default/lireq?id=apparelProductCatalog/Online/300147493&fl=id,d&field=cl_ha

You can parse it and show with the custom recommended products component.

The request above is not standard, it uses a separate request handler (/lireq).

You can parse it and show with the custom recommended products component.

The request above is not standard, it uses a separate request handler (/lireq).

Using a designated request handler

The LIRE request handler supports the following operations:- get images that are looking like the one

- having the specific ID

- found at URL

- get images with a feature vector

- extract histogram and hashes from an image URL

Using lirefunc

Lirefunc function can be used together with a standard select request which is normally used by hybris. The function lirefunc(arg1,arg2) is available for function queries. Two arguments are necessary and are defined as:- Feature to be used for computing the distance between result and reference image. Possible values are {cl, ph, eh, jc}

- Actual Base64 encoded feature vector of the reference image. It can be obtained by calling LireFeature.getByteRepresentation() and by Base64 encoding the resulting byte[] data or by using the extract feature of the RequestHandler

- Optional maximum distance for those data items that cannot be processed, ie. don’t feature the respective field.

- [solrurl]/select?q=*:*&fl=id,lirefunc(cl,”FQY5DhMYDg…AQEBA=”) – adding the distance to the reference image to the results

- [solrurl]/select?q=*:*&sort=lirefunc(cl,”FQY5DhMYDg…AQEBA=”)+asc – sorting the results based on the distance to the reference image

Performance

For large sets of images, the solution above may be too slow because of per-request processing of all images. Possibly, you want to precalculate the recommendations (products of the same color) to make it much faster. However, for the generic task, the performance issues are inevitable if your dataset is getting bigger and bigger, even with such optimization techniques as locality sensitive hashing, used in the current solution (BitSampling). Secondly, you need to double check what feature vectors are really important for you to be in the index. Each additional vector may add gigabytes to the index size, and, as a result, slow down both indexing and searching. Thirdly, this task can be solved with machine learning algorithms too, with use of enhanced algorithms for feature extraction, such as SURF and SIFT. However, this has to be left for another day.PoC screenshots

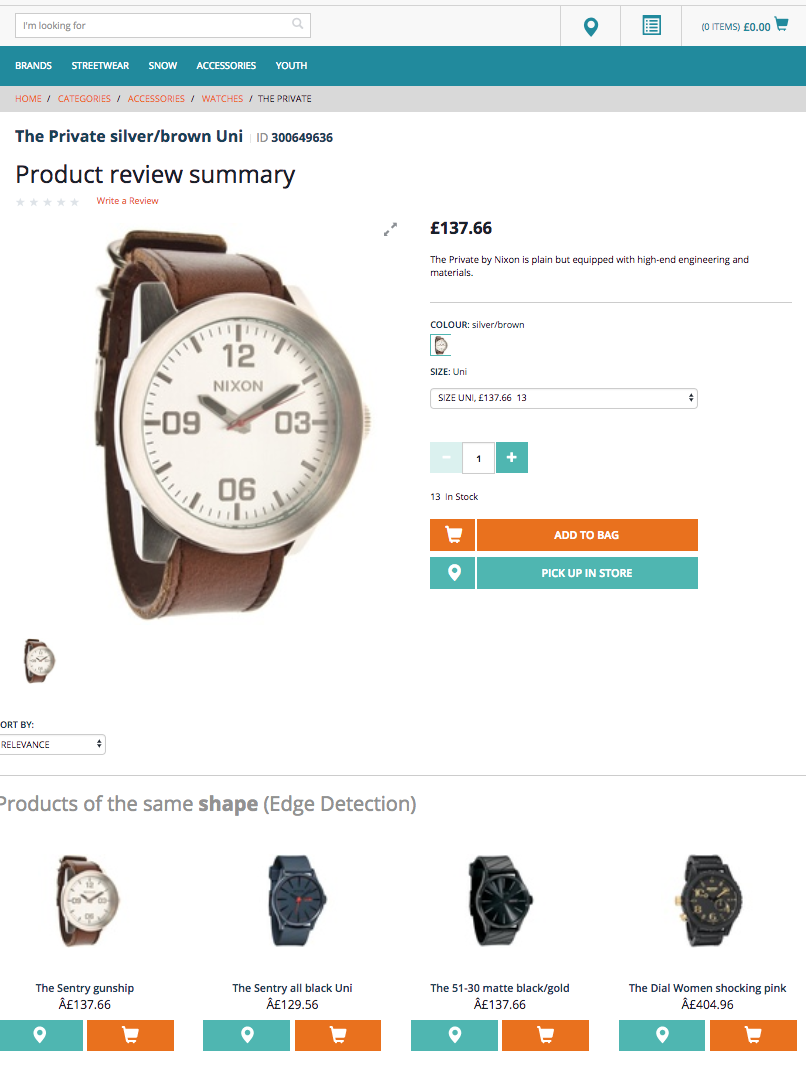

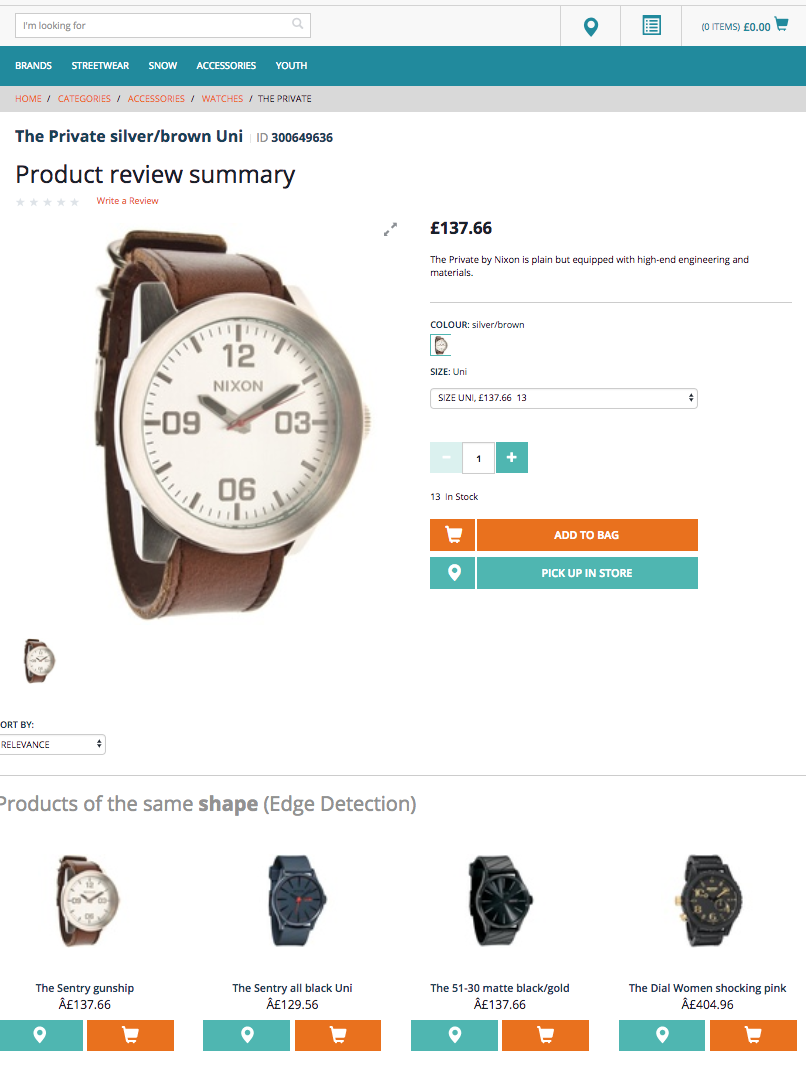

Color Layout: The products with the similar color histograms

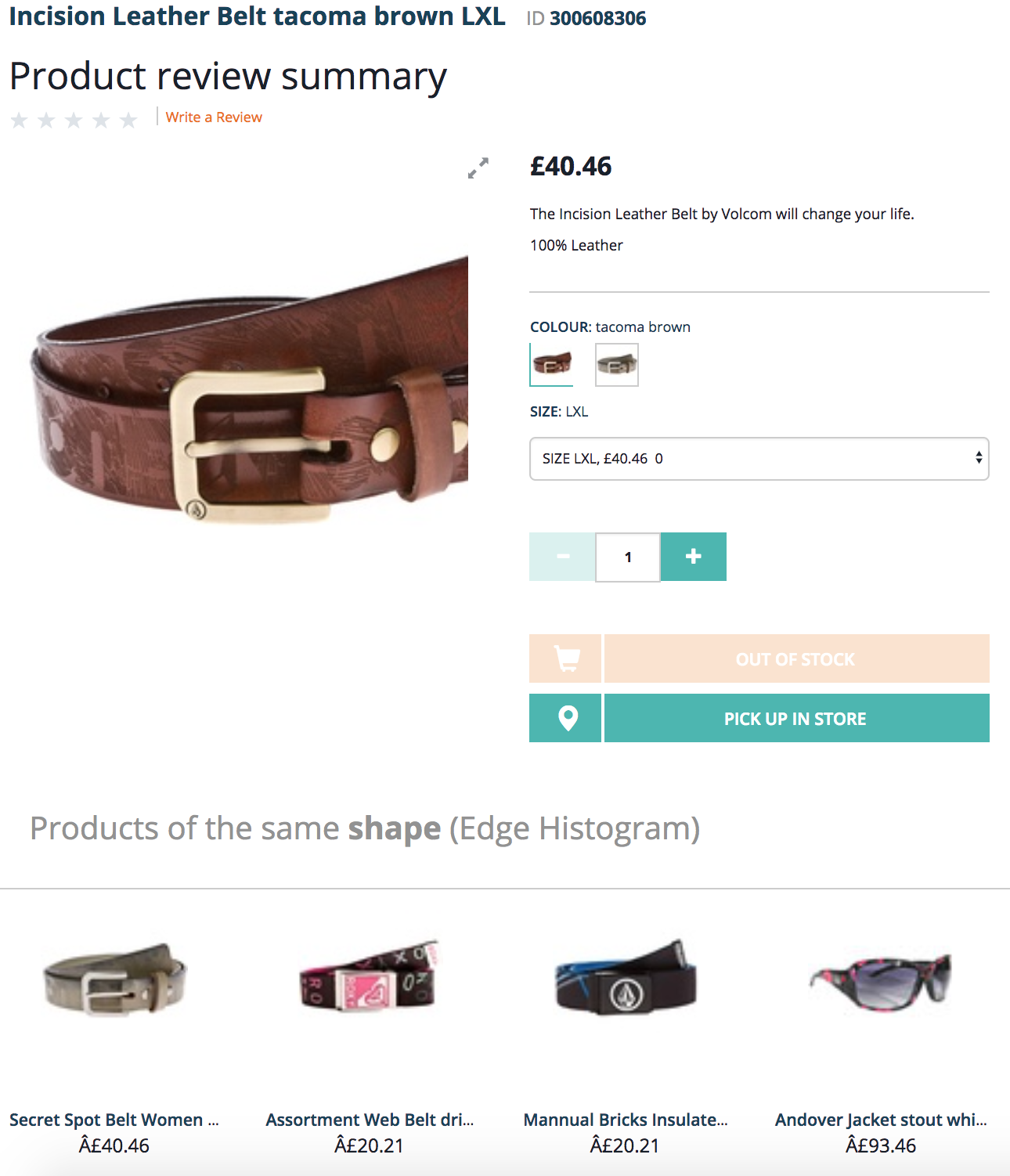

Edge Histogram: Products with the similar shape

When the product catalog is small or the color cluster is small, the system shows the best possible match (fourth product is not a belt)

When the product catalog is small or the color cluster is small, the system shows the best possible match (fourth product is not a belt)