Fixing Oops with JGroups: Advanced Clustering for SAP Commerce Cloud

Every SAP Commerce Cloud setup is based on a multi-server setup organized as web cluster. This product comes with clustering support out of the box since very early versions. However, many years in a row, it is still one of the most challenging topics.

Every SAP Commerce Cloud setup is based on a multi-server setup organized as web cluster. This product comes with clustering support out of the box since very early versions. However, many years in a row, it is still one of the most challenging topics.

JGroups is an essential component of SAP Commerce Cloud. It is widely used as an embedded solution for distributed messaging and eventing in the cluster. It is hard to say that it is under-documented or it has some quirks that nobody knows how to encounter. However, almost every large project stumbles with JGroups. This article is aimed to shed some light on the internals of the SAP Commerce Cloud clustering and JGroups.

Redundancy and Failover

A cluster is a set of nodes running in coordination with each other, communicating with each other, and working toward a common goal.

The nodes in the cluster often share common resources that can be accessed concurrently, such as databases or file storage.

However, over-reliance on using centralized, shared resources creates single points of failure as well as complexity which often hard to handle. The best practice says that a number a number of elements whose failure could bring down the whole service should be reduced as much as we can and every resource should be either actively replicated (redundant) or independently replaceable (failover).

The performance characteristics of the cluster are not only dictated by their physical capabilities but also the mechanisms providing communication between the nodes. For the replicated resources, these mechanisms have a particular responsibility in the cluster. This brings us to cluster messaging as a important and often critical component of any cluster.

Cluster Messaging

Messaging is crucial to keep the cluster nodes in coordination with each other.

The nodes in a cluster can communicate with one another using two basic network technologies:

- IP unicast or multicast. The nodes use these to broadcast availability of services and heartbeats that indicate continued availability. The “heartbeat” messages help to deliver the information that a node in the cluster is alive. Multicast broadcasts messages to applications, but it does not guarantee that messages are actually received

- IP sockets or peer-to-peer communication. The cache invalidation messages are used to keep data consistent in all instances.

Every SAP Commerce Cloud instance is both broadcasting and listening for cache invalidation messages. Every time data is changed within one node, other nodes in the cluster receive an event informing them that their item cache is now stale and should be removed from node’s memory.

In order to deliver updates over the cluster and keep the nodes in sync, SAP Commerce uses special kinds of events named Cluster Aware Events.

There are the following out-of-the-box cluster aware events:

- Persistence layer related

- Item is removed (cache invalidation event)

- Item is changed (cache invalidation event)

- Tenant related

- Tenant is initialized/

- Tenant is restarted

- Cronjob processes and task engine related

- Cronjob process is executed

- Cronjob process is finished

- Repoll

Of course, the particular system may have custom cluster aware events.

If a cluster is not configured properly, some very odd issues may happen. Often the error messages have nothing in common with caches or even persistence layer. The most serious one is related to the data corruption and may affect the data consistency.

If the nodes are out of sync, an object may have different values on different nodes despite all of them are attached to the same database. Some node(s) may report that the item doesn’t exist while others show it is available and there is some value. Such errors may make troubleshooting very challenging.

Invalidation events are sent after each item modification or removal on a per-modification basis.

JGroups

For keeping caches in sync and implementing distributed messaging, SAP Commerce uses JGroups, a distributed coordination framework, a lightweight Java library for reliable group communication. It is not a standalone software; JGroups is an embedded solution, and comes as a Java library.

JGroups offers a high-level communication abstraction, called JChannel, which works like a group communication socket through which the nodes can send and receive messages to/from a process group.

With JGroups, an instance of SAP Commerce Cloud connects to the a cluster group so that other instances that connect to the same group will see each other. Any node can send a message to all members of the group. SAP Commerce in-cluster messages are cluster aware events explained above. JGroups maintains a list of members, notifies them when a new member joins, or an existing member leaves or crashes. The first (oldest) member is a coordinator.

Depending on the configuration, the nodes can use UDP mode where an IP multicast is used for point-to-multipoint communication, or use TCP mode, where group members are connected by TCP point-to-point connections. UDP is much better for large clusters if the infrastructure supports multicasting, but not every infrastructure supports it. For instance, Amazon Elastic Compute Cloud (Amazon EC2) does not allow UDP. If the nodes can’t reach each other via IP multicast and UDP with IP multicast as transport is not available, all you can use is UDP without IP multicasting or TCP with its point-to-point.

After a cold start, each node is unaware of others and their current status. This is where the shared resource is important. If UDP is on, such shared resource is the network. For the TCP, you need to pull it from some dedicated place, such as filesystem or database. JGroups supports 20 membership discovery protocols to build such a membership list. Among those, TCPPING (static list of member addresses), TCPGOSSIP (external lookup service), FILE_PING (shared directory), BPING (using broadcasts) or JDBC_PING (using a shared database). The PING protocol uses UDP multicast messages. Simply put, JGroups shouts “I’m here” and all JGroups-ready nodes respond with their details. The idea of the rest protocols is based on having an external storage or data source from where the addresses of the potential members can be requested.

Since the vast majority of cluster applications use a database as a shared resource, it can efficiently be used as a storage for the cluster configuration. The protocol called JDBC_PING uses a DB to store information about cluster nodes used for discovery. JDBC_PING is often pre-configured for the SAP Commerce Cloud instances, so that it is important to explain it in the first place.

When a SAP Commerce Cloud node starts, it queries a cluster setup (a list of nodes) from the database, and joins the cluster. If a node crushes, its IP is removed from the database.

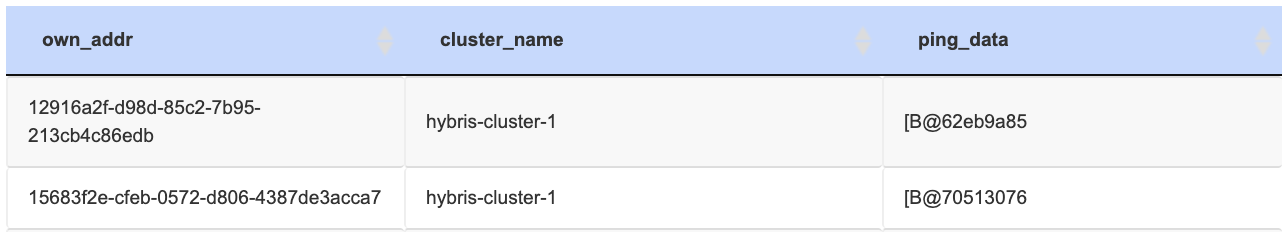

So, JDBC_PING members write their addresses to the store and remove them on leaving the cluster. So when SAP Commerce Cloud nodes are up, one after another, they register themselves in the JGROUPSPING table.

This table has three fields: the encoded local address (own_addr), cluster name (cluster_name), and serialized form of internal objects needed by JGroups (ping_data). The encoded local address and ping_data are not human readable because it has a set of individual components encoded.

However, during the discovery process the nodes also read other nodes’ IPs from the table and try to connect them to fetch information. Each node pings the other nodes. The process is a bit more complex because it is done by the first node, which is of the coordinator role, the first node marked as a responsible for the whole cluster. The coordinator is the member who has been up the longest and who in charge of installing new cluster configuration (called “views”).

If your nodes have dynamic IPs, and if they crash often, and if hybris nodes can’t often be gracefully stopped, a number of abandoned IPs are accumulating in JGROUPSPING so that the starting time grows over time until it stops responding.

If the JGROUPSPING table is full of such “zombies”, SAP Commerce Cloud will be trying to check all of these. The system will try to join with many retries (default is 10) and generate tons of timeout errors. In the kubernetes cluster, it might be misconstrued (“a node is sick and need restarting”) and kubernetes won’t be able to up the service.

There are two ways how these “zombies” can be automatically removed from the table:

- using Failure Detection protocol, and

- clearing the table after every change of the cluster configuration.

The failure detection protocol is used to exclude the node from the cluster if it is not responsive. All nodes receive the updated list of members once it happens.

- FD / FD_ALL / FD_ALL2 are protocols that provide failure detection by using heartbeat messages. If a node is not reachable, the VERIFY_SUSPECT protocol comes into play. The system sends a SUSPECT message to the cluster and if the node is not reachable again, the node gets excluded from the cluster. This is not recommended to use with UDP mode.

- Another protocol, FD_SOCK, is based on a “ring of sockets”. Each node connects to another one forming a ring. If a node is not responsive, it breaks the ring and the previous node knows where.

Because of two-phase removal, relatively slow heartbeat rate and verify suspect timeout, it may take some time to remove these items from the table. Often the node is stopped by kubernetes because of tons of errors earlier than the items are removed from the database.

The alternative (more exact, additional) way is a mode that clears the table when the cluster configuration changes. It is activated by the TCP tag attribute “clear_table_on_view_change” when set to true. In this case, the table is cleared after a node joins or leaves the cluster. If there were any zombies, they are removed with all non-zombies, and all live nodes re-inserts its own information. This method is often a bruteforce solution. It reminds me of a concept of a watchdog reset which restarts the whole service if something odd happens.

I also found that significantly reduce logical_addr_cache_max_size. It cleans all zombie nodes just after a crash. Documentation for the parameter is saying that default value is 2000. The recommended parameters are from 2 to 2000. It is the maximum number of elements in the logical address cache before eviction starts.

KUBE_PING

In Kubernetes, the nodes (pods) get a new local IP address each time they are created, and JGROUPSPING table is not going to be too useful. Since Kubernetes is in charge of launching nodes, it knows the IP addresses of all pods it started, and is therefore the best place to ask for cluster discovery. JGroups does discovery by asking Kubernetes for a list of IP addresses of all cluster nodes by label or in combination of namespace and label. For the Kubernetes setup, JGROUPS offers KUBE_PING extension, which is hosted on jgroups-extras.

https://github.com/jgroups-extras/jgroups-kubernetes

However, the latest (1.0.14 Final) KUBE_PING is supported by SAP Commerce Cloud 1905 only (with jGroups 4.0.19 Final).

When a network error occurs, a cluster may be split into partitions. There is a special JGroups service called MERGE which let’s these partitions communicate with each other and reform into a single cluster.

There is a TCP parameter “write_data_on_find” which is recommended to set to “true” to resolve the issue where subclusters could fail to merge after a network partition.

Bind address

I found that many sample jgroups-tcp.xml files include `match-interface:eth.*` as a value for bind-addr. It means extracting the IP address from thelocal network settings automatically. The “eth.*” means local network card.

However, I also found that Jgroups 4.0.16 doesn’t support match-interface as a value of bind_addr, but the next version, 4.1, does support it. The class JGroupsBroadcastMethod has a method validateTcpConnection() which assumes that the value is just an IP address.

Version 4.0.x: https://github.com/belaban/JGroups/blob/4.0.x/src/org/jgroups/util/Util.java

Version 4.1: https://github.com/belaban/JGroups/blob/JGroups-4.1.0.Final/src/org/jgroups/util/Util.java

CLNodeInfos

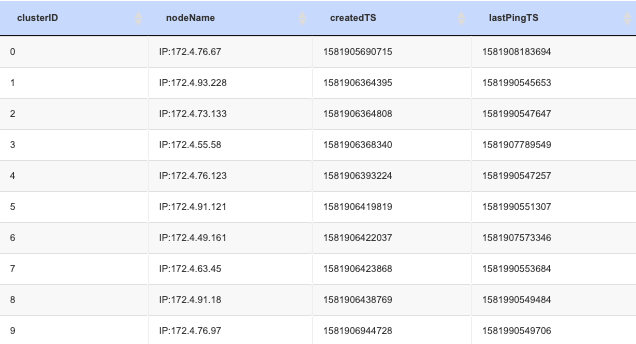

There is a built-in heartbeat mechanism very similar to JDBC_PING from jGroups, but part of SAP Commerce Cloud. CLNodeInfos is a name of the database table where the cluster configuration is stored.

Nodes are assigned to the clusterIds automatically using CLNodeInfos table. This mode is active if autodiscovery is set to true: cluster.nodes.autodiscovery=true

Each SAP Commerce Cloud instance sends a heartbeat to the database by updating CLNodeInfos table with the current timestamp. The default interval is 10000 ms and can be customized by changing cluster.nodes.stale.interval. So if the node is dead or it doesn’t have enough resources or broken connection to the database, the stale entry in CLNodeInfos will be treated as a signal for excluding such node from the cluster. If SAP Commerce Cloud is stopped gracefully, the system stops sending heartbeat, releases the cluster ID from the CLNodesInfo table.

cluster.nodes.stale.timeout is a max allowed ping delay. SAP Commerce stores all ping timestamps in the database and find stale nodes by comparing the last received ping time stamp with the current time delayed by this value. The default value is 30000 ms.

If a node is not responding, it may or may not stop pinging others. The ability to ping other nodes is not a good measure of healthiness of a node. So, it is recommended to build more comprehensive cluster health check mechanisms than provided out of the box. Very often these mechanisms should be project-specific. The best option is implementing a health check API which is able to quickly return the operational status and indicate its ability to connect to the SAP Commerce Cloud API or controllers.

CLNodeInfos is not used by cluster aware messaging system. The node ID is dynamic here. If you decide to run a cronjob on the node 3 (which is called clusterId here), this node 3 may point to the different servers. If a node leaves the cluster, their cluster ID can be reused by the next node joins the cluster. Each node knows its cluster id, and if the cronjob is set up to be run on the particular cluster id, only this instance will let it run.

IPv4

Many sources say that JGroups has issues with IPv6 and it is recommended to turn IPv6 off if IPv6 is not in use.

I recommend disable IPv6 via

-Djava.net.preferIPv4Stack=true

If you communicate between an IPv4 and you need to force use of IPv6, start your JVM with

-Djava.net.preferIPv6Addresses=true

The JDK uses IPv6 by default, although is has a dual stack, that is, it also supports IPv4.

Troubleshooting

Probe

First of all, JGroups provides a useful tool, Probe.

This tool shows the diagnostic information about how the cluster is formed.

# java -cp hybris/bin/platform/ext/core/lib/jgroups-4.0.16.Final.jar org.jgroups.tests.Probe

(use your version of the jar and hybris root path)

The tool shows how jGroups sees the cluster.

#1 (330 bytes): local_addr=hybrisnode-2 physical_addr=172.5.22.100:7800 view=View::[hybrisnode-5|1599] (3) [hybrisnode-5, hybrisnode-2, hybrisnode-4] cluster=hybris-cluster-admin version=4.0.16.Final (Schiener Berg) 1 responses (1 matches, 0 non matches)

1599 is a sequential number of the state of the cluster, a list of current members of a group. This state is called a view in JGroups.

Hybris-5 is a current coordinator node. This node is elected as a coordinator as the oldest in the cluster. The coordinator emits new views.

In the example above, you can see three nodes organized in the cluster with hybrisnode-5 elected as coordinator.

If you see that some members are not real, it is very likely these members are “zombies” left from the previous runs.

* * *

The following method can be used to test JGroups multicast communication between 2 nodes without running SAP Commerce Cloud:

On Node #1 (replace with your jgroups jar, ip address and port):

java -Djava.net.preferIPv4Stack=true \

-Djava.net.preferIPv4Addresses=true \

-cp bin/platform/ext/core/lib/jgroups-4.0.16.Final.jar \

org.jgroups.tests.McastReceiverTest -mcast_addr XXX.XXX.XXX.XXX -port XXXXX

On Node #2 (replace with your jgroups jar, ip address and port):

java -Djava.net.preferIPv4Stack=true \

-Djava.net.preferIPv4Addresses=true \

-cp bin/platform/ext/core/libjgroups-4.0.16.Final.jar \

org.jgroups.tests.McastSenderTest -mcast_addr XXX.XXX.XXX.XXX -port XXXXX

If you want to bind to a specific network interface card (NIC), use

* * *

Quering the JMX statistics and the protocol stacks of all JGroups nodes (change the jar file name):

java -cp bin/platform/ext/core/lib/jgroups-4.0.16.Final.jar org.jgroups.tests.Probe -timeout 500 -q uery jmx -query props

you can limit the output by specifying the section. For example,

* * *

If the cluster nodes can’t find each other within the specified time frame, failure detection mark them as suspicious and eventually removes from the cluster. Very often it is because the interval for failure detection is set to the small value (meaning the interval is too short). I recommend to increase these timeouts.

<FD_SOCK />

<FD timeout="3000" max_tries="3" />

JGroups 5.0

Two weeks ago Bela Ban, author of JGroups, introduced JGroups 5.0. According to the release information, it got some changes referenced as major.

http://belaban.blogspot.com/2020/01/first-alpha-of-jgroups-50.html