Environment Data Migration – Part 2

I have been discussing where the merchants have multiple instances of SAP-Commerce (hybris) and they want their non-production environments to be “more representative” of the production environment in terms of the data.

In the previous part of this article, I looked at the rationale of why data migration is important and a number of various initiatives that have supported this over the years.

In this part, I look at the areas of data for migration and the necessary concerns to consider when data is migrated.

How Should a Merchant Approach Environment Migration?

The approaches described in Part 1 of this article are in reality just a sticking plaster approach to the desired solution. And in this respect, I suspect there is no universally available solution amongst all the systems out there and many developers and sys-ops will have created custom solutions over the years. Why is this ? There are important criteria to think of:

- In the on-premise or private cloud worlds, staging and dev environments were and are still different sizes in terms of memory, CPU cores, numbers of servers, disk/database capacity and therefore it would be necessary to reduce the overall amount of data migrated, and that would invariably need to be a manual process or a purpose developed process;

- Some form of intermediate data processing is typically required to ensure no distribution of PII personal data from the production system as that could have ruinous business impact;

- Many clients and suppliers like Epam often have policies that do not permit development teams or support teams having any access to production data, and therefore these complex data migrations would have to be undertaken by client business staff;

- Maybe only a delta is needed to be migrated and not the full amount each time, therefore adding complexities of logic on the data selection;

- Some data contents might need configuration criteria to be manually changed – such as analytics tags, hyperlinks etc.

What are the Areas of Data to Consider for Migration ?

Customer data

Migrating customer data is only useful for purposes of replicating volumes of customers, customer segments and example relationships and cross-sample metrics of customer to order ratios and for possible testing of reporting. It is highly advisory that customer data is obfuscated before being made available for adding into other systems. This should include obfuscation of names, addresses, phone numbers, emails etc. Obfuscated customer passwords can all be made the same by setting a well-known encrypted form of an easy-to-identify password.

- Consider if all the customer data is needed or just a subset.

- Consider if it is best to create from scratch a fictitious set of customer data rather than touching the actual production customer data.

Product data and Product media

Migrating product data is useful to ensure there are example products that are relevant to searching, menu selections, facet filtering, PLP/PDP, testing of various promotions and where specific web content components are calling out specific products in (say) carousels, or related product widgets, where products are in bundles etc. Product data does not necessarily need any obfuscations. The media is likely to take the format of media folders, media formats, media items (whether local or as URLs) and media containers.

Categories and Classifications

These will be in their own hierarchies in the product catalog and cross-referenced from the products

Product Pricing

This data would be associated to the products in the form of price rows, although care is needed here because price rows cannot be “updated” easily through impex.

Web content and web content media

This is a complex area in terms of determining all the web content components that are in use. The script above goes some way towards this (for earlier releases of SAP Commerce / Hybris). Care is needed when web components are explicitly calling out products (eg, carousels, where images link to category searches, widgets like best-selling products, what’s new ?) as the export is likely to cross reference those products which would then fail the re-import of those products do not exist. Consideration also needed if both the staging and the online catalog versions are needed. Note also, that

Orders

For any orders to be migrated, an amount of obfuscation is necessary where address and any payment-related sensitive material is included. Order data is anticipated only on the basis of reporting and performance testing to be undertaken.

I have assumed in the above that the actual catalogs, are already reflected the same in all environments and do not need to be migrated.

What are the Concerns in Data Migration ?

Data Volume

Exporting large volumes of data (such as products, product media items) can be expected to generate significant file storage size, especially the media. Concern therefore whether a delta can be exported or if the full product set is to be exported.

Personal data

As stated above, it is essential that customer data is obfuscated before being made available for adding into non-production systems. This should include obfuscation of names, addresses, phone numbers, emails etc etc. Obfuscated customer passwords can all be made the same by setting a well-known encrypted form of an easy-to-identify password.

Quality/Completeness

it is likely that the exported data may need some form of filtering and validating and as referred above, obfuscation of personal data. In addition, if any of the data models related to the data that is migrated are amended, then the data migration process should also be reviewed in case changes are needed to reflect the data model changes.

Frequency

Determine how often it is necessary to perform data migration, this should consider the variance of the production data and whether there are any significant planned changes to the SAP Commerce systems such as upgrades which might warrant any out-of-the-ordinary testing or training etc.

Security

Any data migration involving personal data must be carefully planned and managed from a security perspective.

Impact on Performance

If a large data migration is scheduled from a production system, please bear in mind this will have a lot of database access, consume additional memory and take up disk resources whilst the exported data is pending download.

Automation

Where possible, consider a design that can assist in the automation of the data. For example, if data that is in process of being exported can be obfuscated automatically, then do that.

Housekeeping

data sets that are generated for export should be purged afterwards, to avoid unnecessary resources being used.

Approaches

Given the above considerations to the available methods, the areas of data to migrate and the cited concerns it becomes more evident why there is no universal approach to this and hence the many approaches that are unilaterally taken.

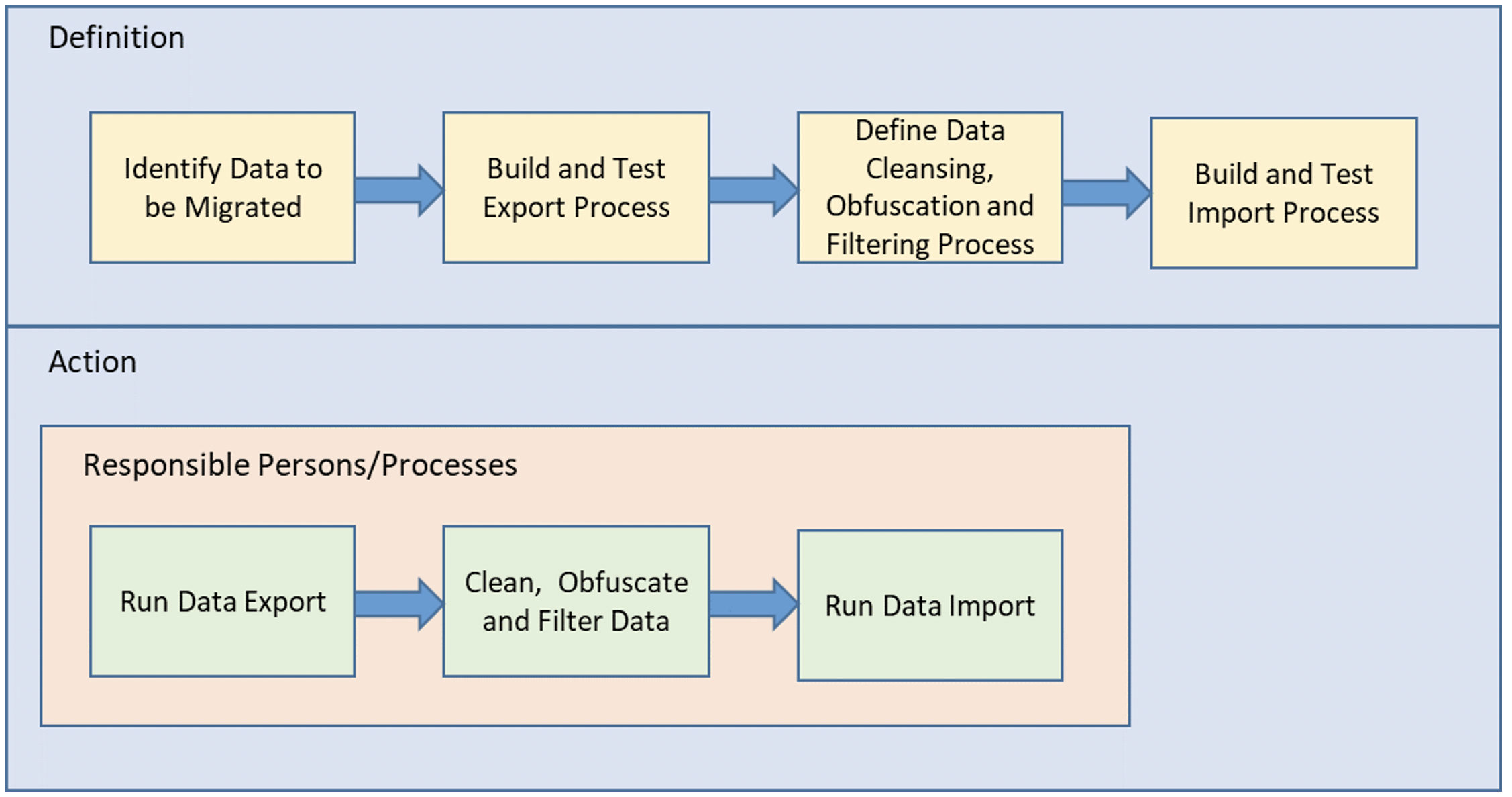

The process flows for the development of such a process and the operations of such a process will be based on something like below:

Fig. 1 – Logical Approach to Environment Migration of Data

Data Export process using Impex

The most well-known approach to export is to use an Impex export. Taking the example below, three lines can be used to control the export of a specific data type:

- A line to define the filename that the export will be placed into,

- A line defining the Impex of the data type and the attributes to export,

- A line controlling the export with option to include a flexible query to specify specific data criteria.

Looking at this in action, for all records of the specific type:

"#% impex.setTargetFile( ""Product.csv"");" INSERT_UPDATE Product;type(code,itemtype(code))[unique=true,allownull=true];value[unique=true,allownull=true] "#% impex.exportItems( ""Product"" , true );"

Or with a flexible query:

"#% impex.setTargetFile( ""Product.csv"");"

INSERT_UPDATE Product;type(code,itemtype(code))[unique=true,allownull=true];value[unique=true,allownull=true]

"#% impex.exportItemsFlexibleSearch""SELECT {type:PK} FROM {Product as type}, {catalogversion as CV}, {catalog as C} WHERE {type:catalogversion}={CV:PK} AND {CV:catalog}={C:PK} AND {C:id}='masterProductCatalog' AND {CV:version}='online'"");"

I am not intending this article to be a tutorial of Impex, but the above is the basis for all the data types to be exported. Note that media files are named after their PK codes and are exported in a separate zip file. The challenge to the exercise is determining which data types to export.

Obfuscation and Cleaning Process

The files exported can be easily edited in a text editor and can be obfuscated with copy/paste approaches or manual editing.

If the process is to clean up personal data, then this should be undertaken by authorised personnel only.

Note – I have seen examples where exported media items are PDF files that also contain personal data. Please be wary of checking that these items are not distributed to unauthorised persons/systems.

Conclusions

I have used SAP Commerce (hybris) for over 12 years now and nearly every project has needed some mechanism to migrate data from the production system to non-production systems and all approaches have been purpose designed, mostly derived on the impex-export and re-import approach.

By far the most complex area to migrate is the hybris web content, as there are many data types involved and multiple and complex relationships exist between the pages, content slots and CMS components. However, this should be more manageable in headless architectures as typical content management systems will include version control and site migration capabilities. Remember also that web content migration in SAP Commerce should also include other files such as CSS stylesheets and JavaScript files and these files are not transferred via the impex export/import approach.

Any approach should be factored throughout the SDLC – discussed during any discovery phase and built and tested during development and operations and to ensure that training is provided. Ensure also that any approach to handling personal data is addressed securely.

The requirement for migration will probably always be needed and an efficient solution will be beneficial to all stakeholders involved, both merchant, development and support teams.

Rob Bull

Lead Solutions Architect at EPAM Systems